We all love a good story. And with so many unknowns, it’s easy to see why artificial intelligence is at the center of so many. In Hollywood, movies like The Terminator, The Matrix, or The Stepford Wives all follow a common trope: AI at odds with humanity with AI often getting the short end of the stick.

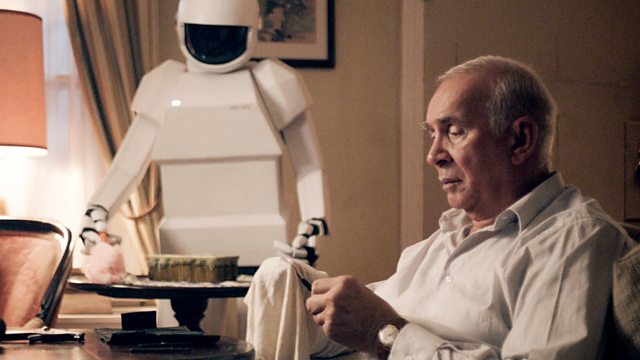

Released in 2012, Robot and Frank’s portrayal of AI is the most recognizable or realistic I’ve seen. There’s yet to be a robot that can act as independently as the movie’s robot, but from folding laundry to monitoring for accidents, today’s AI-powered robots are already assisting the elderly. With AI, these people can also stay in touch with their loved ones and the professionals who look after them, letting them to stay in their own homes or live without full-time care.

Much like in the Machine learning for the movie, AI is helping seniors to keep their independence but also offering caretakers peace of mind as they will no longer have to worry about how the people they are caring for will get on without their constant presence. With how similar it is to our current world, Robot and Frank offers some key takeaways that, if kept in mind, could serve us well as we bring AI deeper into our lives.

Synopsis

*SPOILERS AHEAD*

Frank is a gruff old man with two adult children (Hunter and Madison). Suffering from dementia, his memory is deteriorating, causing his children to worry. Since Madison travels for work, Hunter is left alone to make regular 10-hour round trips to see their father, who is (at least outwardly) unmoved by their fretting.

So when Hunter buys Frank “a robot companion” that has been programmed to work as a home health aide, the old man is, to say the least, unimpressed.

.jpg?width=1982&name=RobotFrankMurder%20(1).jpg)

Evidently sharing some common concerns about AI, Frank tries to refuse the robot on the grounds that it will kill him in his sleep. Nevertheless, and much to Frank’s irritation, Hunter activates the machine and sends it right to work.

Since Hunter’s alternative to the robot is putting his father in a “memory center,” Frank begrudgingly accepts it, but refuses its suggestions. Later, the robot tells him it can adjust its methods to suit his preferences, so long as the adjustment is consistent with its assigned goal of keeping Frank healthy.

During a trip into town, they visit the small bath shop Frank consistently steals from. See, while these days, Frank is mostly stealing bath bombs and soap, we find out that Frank is actually a retired master jewel thief (and still very much one in his head.) So when he discovers the robot took an item he was unable to shoplift, assuming Frank forgot it, he’s most intrigued. Turns out, despite its high level of intelligence, the robot has no concept of what theft is. And with this knowledge in mind, Frank immediately warms to the robot and gets back to business.

So what can we learn from this movie?

1) We should probably develop AI to actually understand our law.

While Ex Machina shows us a robot that is machiavellian by design, Robot and Frank shows us one that is “machiavellian by omission.” As its developers only teach the robot the definitions of terms like “stealing”, versus what these concepts actually entail, it can’t apply that knowledge in a meaningful way.

You may not remember exactly how you learned about stealing, but it’s not something you were born knowing or learned all on your own. At some point in life, whether we were taught not to do it or, like Frank, to do it discreetly, someone, or something, taught us about it. Since AI’s brains (artificial neural networks or ANNs) are meant to replicate our own and we need to be taught not to steal, we can assume AI is no different.

When AI is used to tackle the world’s most colossal problems, it’s been trained to do so. If I train a computer vision model to learn the concept “dog,” assuming I’ve trained it well, the model will quickly learn to recognize dogs with a high level of accuracy. However, if I then upload an image of a cat, while a well-made model could tell me “this is not a dog,” it will not be able to say “this is not a dog, but it is a cat.” Why? Because I didn’t teach it anything about “cats” or what they look like.

If Frank’s robot was trained to recognize a “hobby” as any “regular activity that is done for enjoyment,” and good for your health, unless it’s taught that Frank’s preferred hobbies i.e. “breaking and entering”, “shoplifting” and “lock-picking” are not hobbies, it’s plausible that it would put these activities right alongside the examples it knows.

Further, mentally stimulating activities, like puzzles, are proven to be highly beneficial for people with dementia, as it helps to improve cognitive functions like perception, reasoning and, of course, remembering. As they engage in the lock-picking lesson, Frank describes every lock as being like a puzzle and reminisces about his career as a jewel thief. Guess what else is considered to be effective against dementia? (Reminiscence. It’s reminiscence.) As he plans out their heists, Frank’s mental health begins to improve, making burglaries well aligned with the robot’s foremost goal. So, with its goal being achieved and no concept of legality or morality…

While Frank using the robot’s weakness to steal the (snooty, snotty) rich to give to the poor (in morals) is a fun ride, the real-life implications of such a robot might be more dangerous. Generally, laws set a standard of conduct and allow for the smooth functioning of civil society. While AI has been largely applied towards helping humans protect ourselves and obey laws, with its varied potential, it could easily be exploited by wrongdoers, particularly when combined with the capabilities of computers. We see this when upon finding the safe in the house they are robbing, Frank leaves the robot to find the combination. Frank guesses it would take a human weeks to figure out the correct combination. The robot? “Assuming it's a three-number combination, (it would take) somewhere between four seconds and one hour and 43 minutes.”

To help our current AI platforms to focus on their assigned tasks, teaching it to block out things that are irrelevant to its goal is key. Still, as AI continues to progress, we need to make sure we don’t teach AI to ignore what it shouldn’t.

2) We should also teach it to actually take ours laws or ethics into account.

As advanced AI solutions are able to ignore anything that isn’t relevant to their assigned goals, even if the developers of Frank’s robot had trained it to understand concepts like “legality,” and “burglary,” I would argue that Frank’s robot would still only analyze this information from one angle: “Is it bad for Frank?”

Storytime: two little girls were (briefly) left home alone and instructed that under no circumstances were they to let anyone into the house. While their parents were out however, their beloved grandmother stopped by. Given their assigned goal of not letting anyone into the house, the girls refused to open the door. That she was somebody they knew well and saw all the time didn’t matter. They were told that nobody should be let in, so nobody was let in.

Like children, AI cannot think critically or determine if a particular circumstance should be overridden if it clashes with or is irrelevant to its assigned task. It has to be explicitly told to do so. For the most part, this is fine. There was no actual harm, for instance, in the little girls having their grandma wait outside. Still, it’s important to keep all potential scenarios in mind, by building diverse teams with diverse points of views and experiences.

Humans might forgo certain advantages to helps other avoid harm, but sometimes we act solely in our self-interest. Maybe the robot’s developers couldn’t have foreseen their invention ending up in the hands of an impulsive old jewel thief with a grudge, but somebody did think to write a whole movie about it, and truth is stranger than fiction.

3) And we should definitely not let it override ethical standards where it suits its goal.

Since Frank’s robot tells him “your health supersedes my other directives,” we could infer that even if its developers did the above, it would forge ahead with the burglary regardless. This is because even as narrow AI gets smarter, unless otherwise trained, it cannot look beyond its original goal, it must be instructed to do this also.

As developers push the frontiers of AI, they need to ensure that the AI knows to abandon its assigned goal if achieving it requires the violation of some law or ethical standard. Without this parameter, even if Frank’s robot could assess the impact these burglaries would have on his family and pompous, gaudy victims, the benefit of the burglaries to Frank’s mental health would take precedence.

All of this being said, the movie confirms something we’ve already seen: AI has the ability to change and improve lives. In the end, when Frank is forced to reset his robot, so he cannot be incriminated for the burglaries, he’s reluctant. Th is due, at least partly, to the way the robot has impacted his life. He exploits its weaknesses, but with the robot’s abilities, he was able to regain the freedom and confidence he had lost to his illness. Though we need to close the kind of gaps I outlined above, if we do, AI offers opportunities that many humans have lost or never had. In all, with careful and responsible development, there’s much to gain from AI’s existence and not developing the technology would only be our loss.