We've created a module for this tutorial. You can follow these directions to create your own module using the Clarifai template, or just use this module itself on Clarifai Portal.

The advent of large language models (LLMs) like GPT-3 and GPT-4 has revolutionized the field of artificial intelligence. These models are proficient in generating human-like text, answering questions, and even creating content that is persuasive and coherent. However, LLMs are not without their shortcomings; they often draw on outdated or incorrect information embedded in their training data and can produce inconsistent responses. This gap between potential and reliability is where RAG comes into play.

RAG is an innovative AI framework designed to augment the capabilities of LLMs by grounding them in accurate and up-to-date external knowledge bases. RAG enriches the generative process of LLMs by retrieving relevant facts and data in order to provide responses that are not only convincing but also informed by the latest information. RAG can both enhance the quality of responses as well as provide transparency into the generative process, thereby fostering trust and credibility in AI-powered applications.

RAG operates on a multi-step procedure that refines the conventional LLM output. It starts with the data organization, converting large volumes of text into smaller, more digestible chunks. These chunks are represented as vectors, which serve as unique digital addresses to that specific information. Upon receiving a query, RAG probes its vast database of vectors to identify the most pertinent information chunks, which it then furnishes as context to the LLM. This process is akin to providing reference material prior to soliciting an answer but is handled behind the scenes.

RAG presents an enriched prompt to the LLM, which is now equipped with current and relevant facts, to generate a response. This reply is not just a result of statistical word associations within the model, but a more grounded and informed piece of text that aligns with the input query. The retrieval and generation happen invisibly, handing end-users an answer that is at once precise, verifiable, and complete.

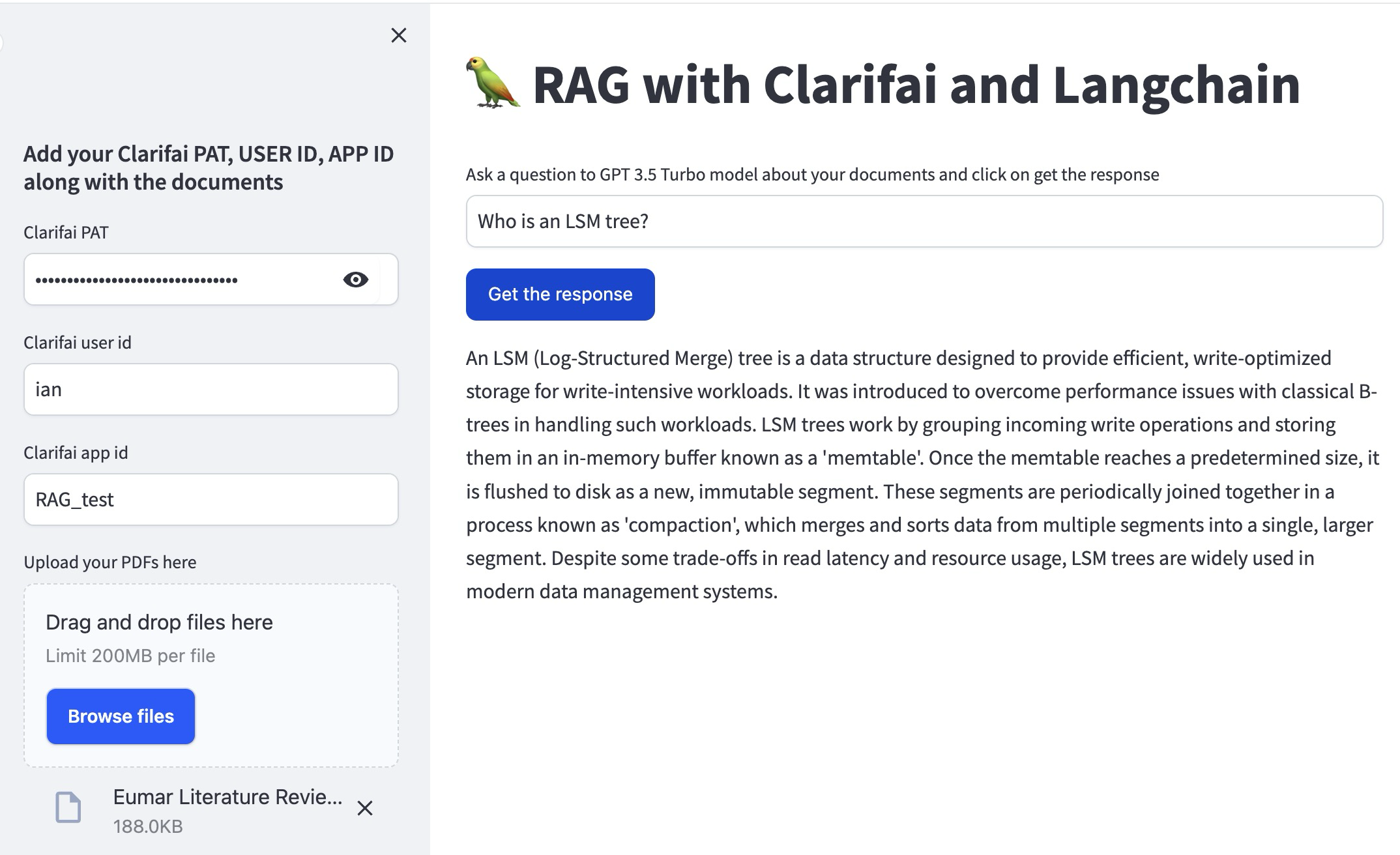

This short tutorial aims to illustrate an example of an implementation of RAG using the libraries streamlit, langchain, and Clarifai, showcasing how developers can build out systems that leverage the strengths of LLMs while mitigating their limitations using RAG.

Again, you can follow these directions to create your own module using the Clarifai template, or just use this module itself on Clarifai Portal to get going in less than 5 minutes!

Let's take a look at the steps involved and how they're accomplished.

Before you can use RAG, you need to organize your data into manageable pieces that the AI can refer to later. The following segment of code is for breaking down PDF documents into smaller text chunks, which are then used by the embedding model to create vector representations.

Code Explanation:

This function load_chunk_pdf takes uploaded PDF files and reads them into memory. Using a CharacterTextSplitter, it then splits the text from these documents into chunks of 1000 characters without any overlap.

Once you have your documents chunked, you need to convert these chunks into vectors—a form that the AI can understand and manipulate efficiently.

Code Explanation:

This function vectorstore is responsible for creating a vector database using Clarifai. It takes user credentials and the chunked documents, then uses Clarifai's service to store the document vectors.

After organizing the data into vectors, you need to set up the Q&A model that will use RAG with the prepared document vectors.

Code Explanation:

The QandA function sets up a RetrievalQA object using Langchain and Clarifai. This is where the LLM model from Clarifai is instantiated, and the RAG system is initialized with a "stuff" chain type.

Here, we create a user interface where users can input their questions. The input and credentials are gathered, and the response is generated upon user request.

Code Explanation:

This is the main function that uses Streamlit to create a user interface. Users can input their Clarifai credentials, upload documents, and ask questions. The function handles reading in the documents, creating the vector store, and then running the Q&A model to generate answers to the user's questions.

The last snippet here is the entry point to the application, where the Streamlit user interface gets executed if the script is run directly. It orchestrates the entire RAG process from user input to displaying the generated answer.

Here is the full code for the module. You can see its GitHub repo here, and also use it yourself as a module on the Clarifai platform.

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy