This blog post focuses on new features and improvements. For a comprehensive list including bug fixes, please see the release notes.

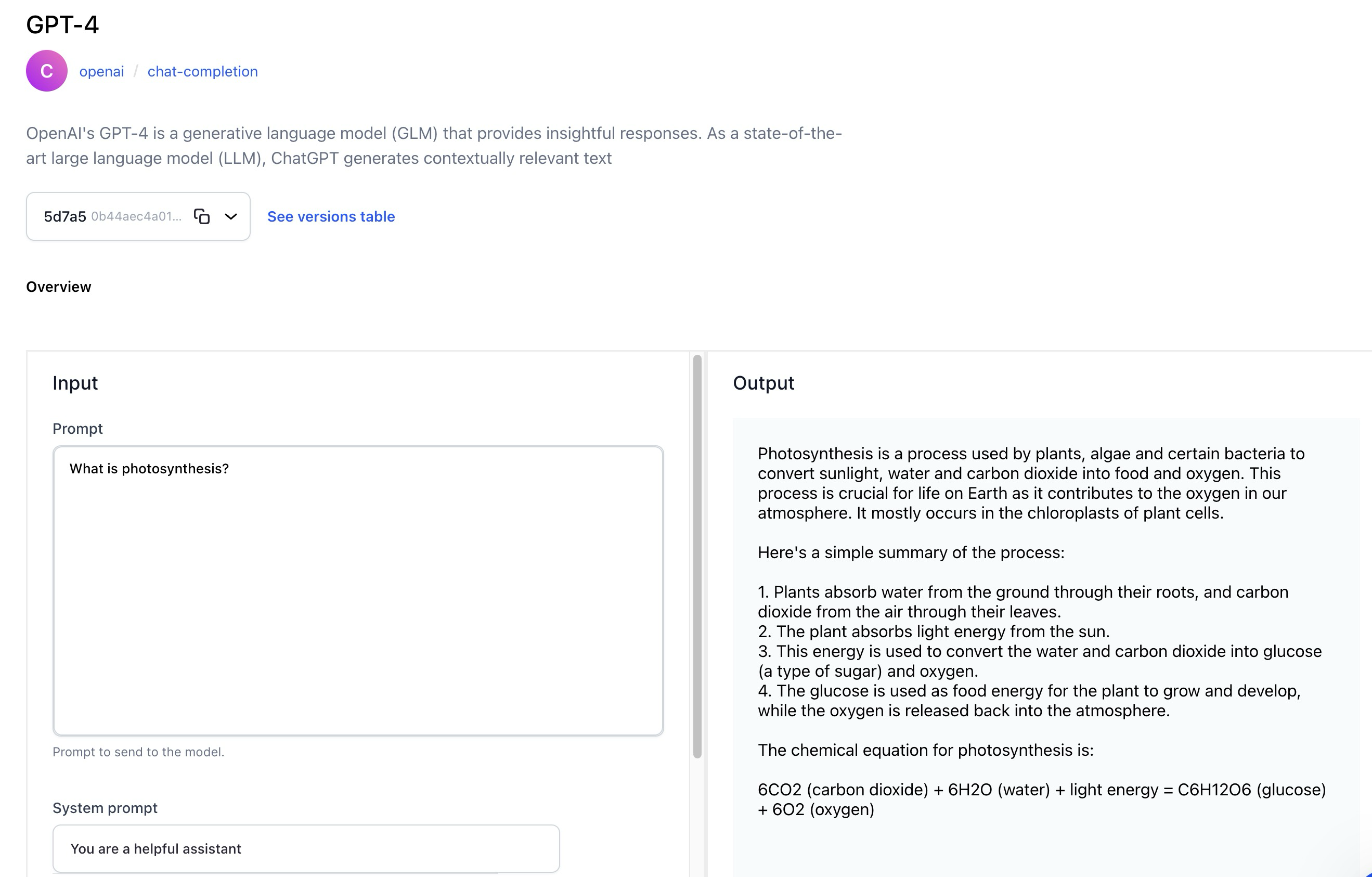

Text Generation

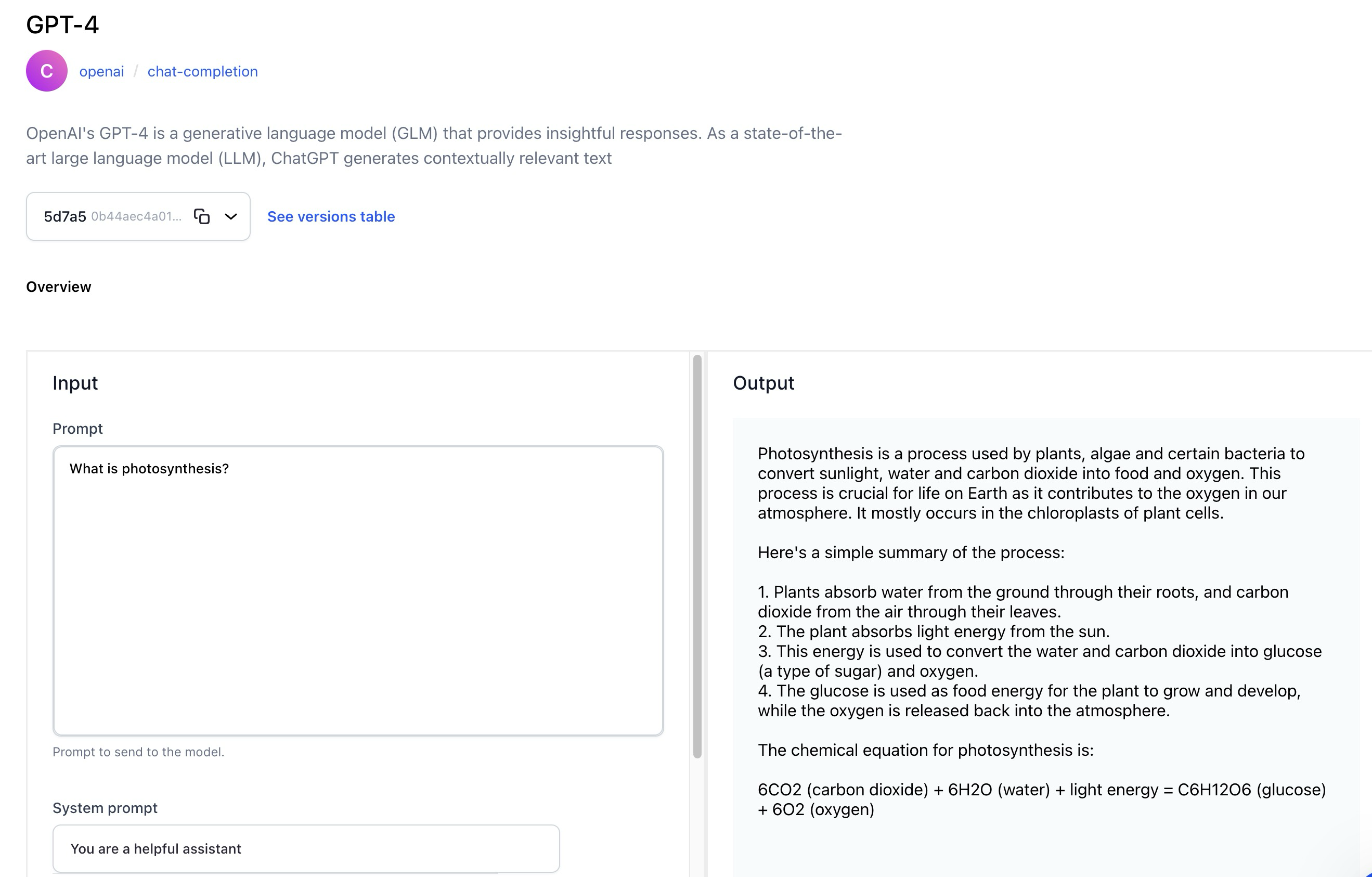

- The Model-Viewer screen of text generation models now has a revamped UI that lets you effortlessly generate or convert text based on a given text input prompt.

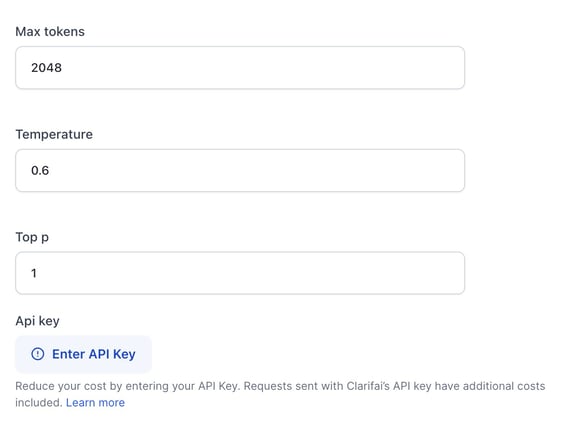

- For third-party wrapped models, like those provided by OpenAI, you can choose to utilize their API keys as an option, in addition to using the default Clarifai keys.

- Optionally, you can enrich the model's understanding by providing a system prompt, also known as context.

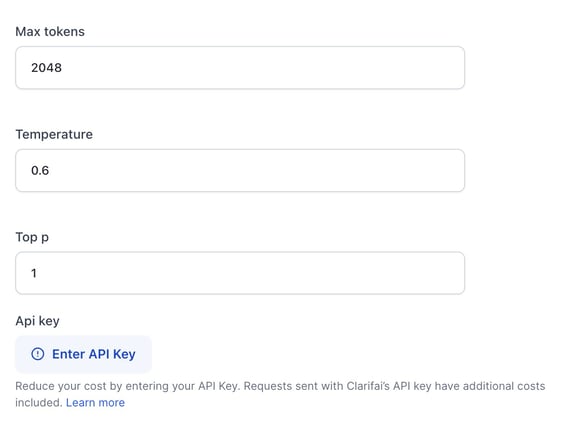

- Optionally, inference parameters are available for configuration. They are hidden by default.

- The revamped UI provides users with versatile options to manage the generated output. You can regenerate, copy, and share output.

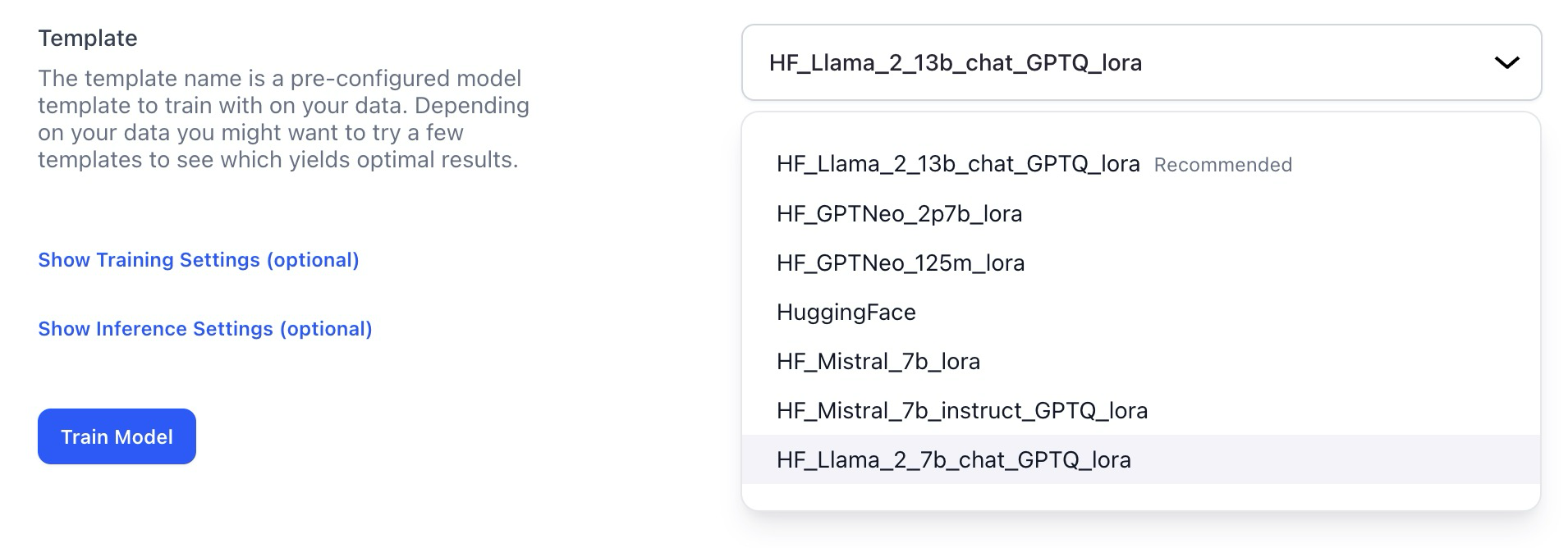

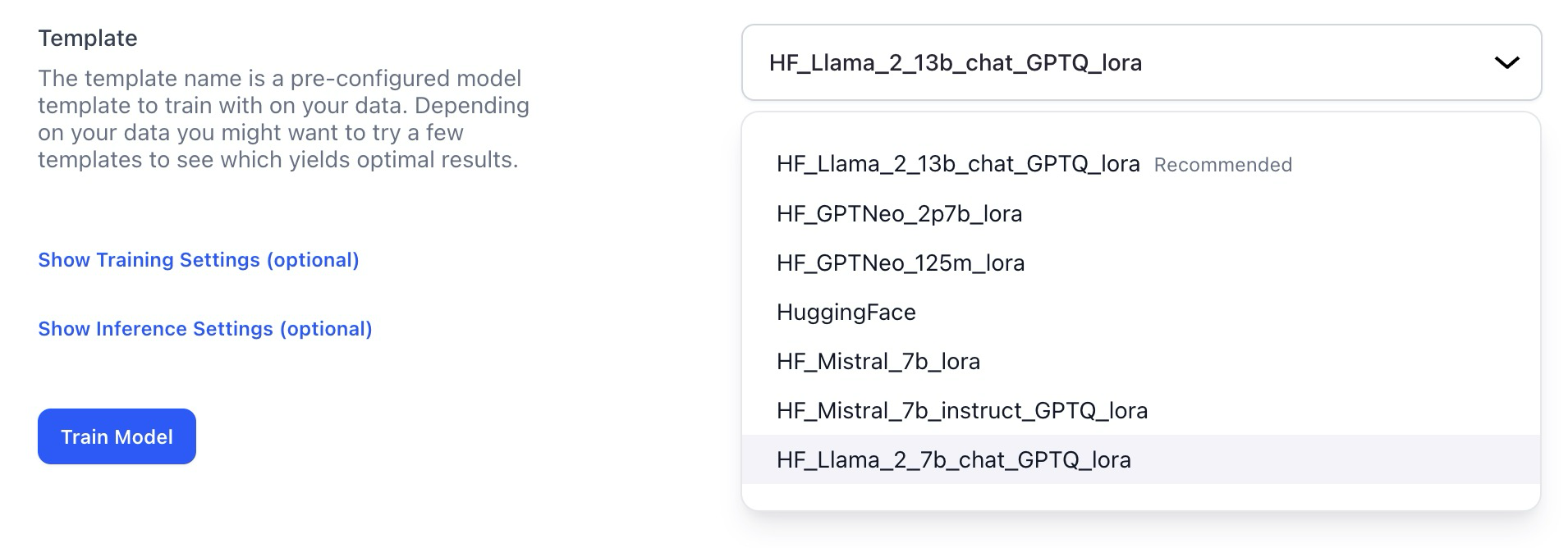

Added more training templates for text-to-text generative tasks

- You can now use Llama2 7/13B and Mistral templates as a foundation for fine-tuning text-to-text models.

- There are also additional configuration options, allowing for more nuanced control over the training process. Notably, the inclusion of quantization parameters via GPTQ enhances the fine-tuning process.

Models

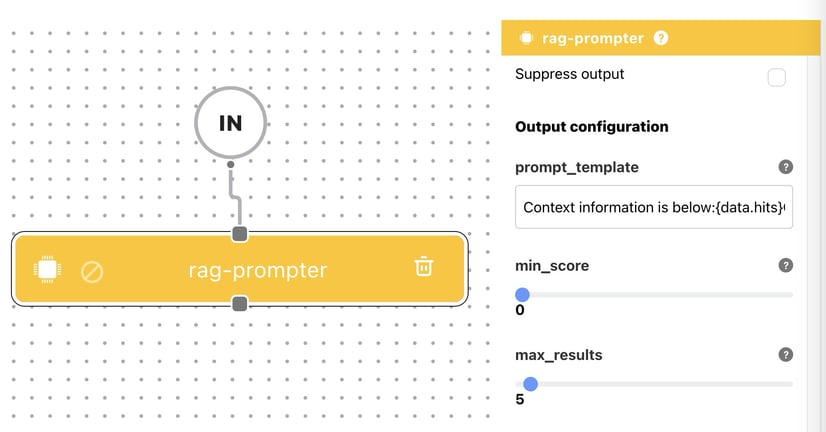

Introduced the RAG-Prompter operator model

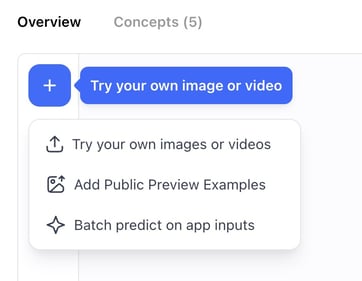

Improved the process of making predictions on the Model-Viewer screen

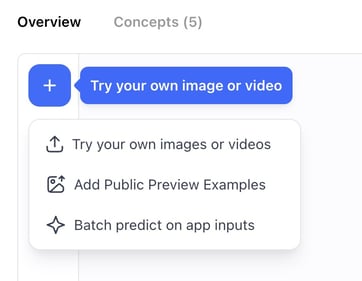

To make a prediction using a model, navigate to the model’s viewer screen and click the Try your own input button. A modal will pop up, providing a convenient interface for adding input data and examining predictions.

The modal now provides you with three distinct options for making predictions:

- Batch Predict on App Inputs—allows you to select an app and a dataset. Subsequently, you’ll be redirected to the Input-Viewer screen with the default mode set to Predict, allowing you to see the predictions on inputs based on your selections.

- Try Uploading an Input—allows you to add an input and see its predictions without leaving the Model-Viewer screen.

- Add Public Preview Examples—allows model owners to add public preview examples.

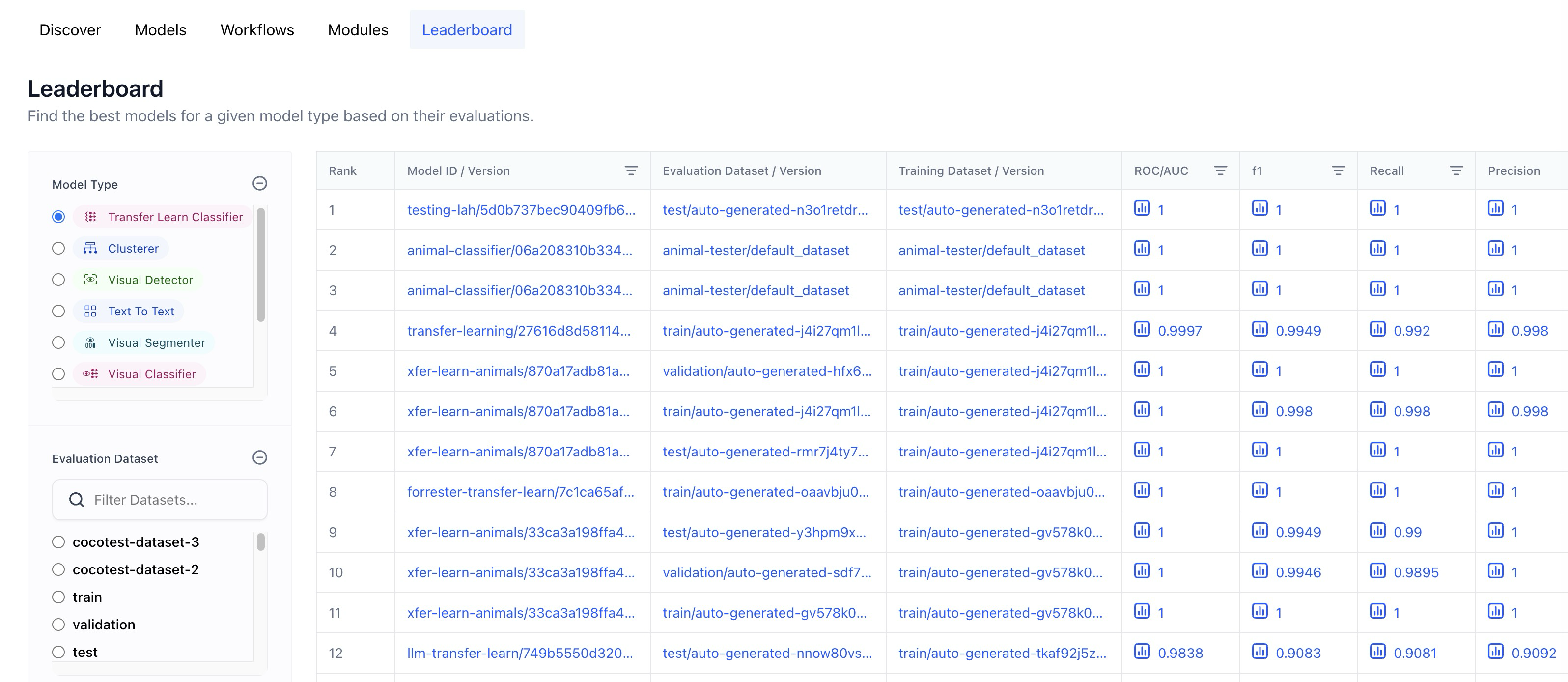

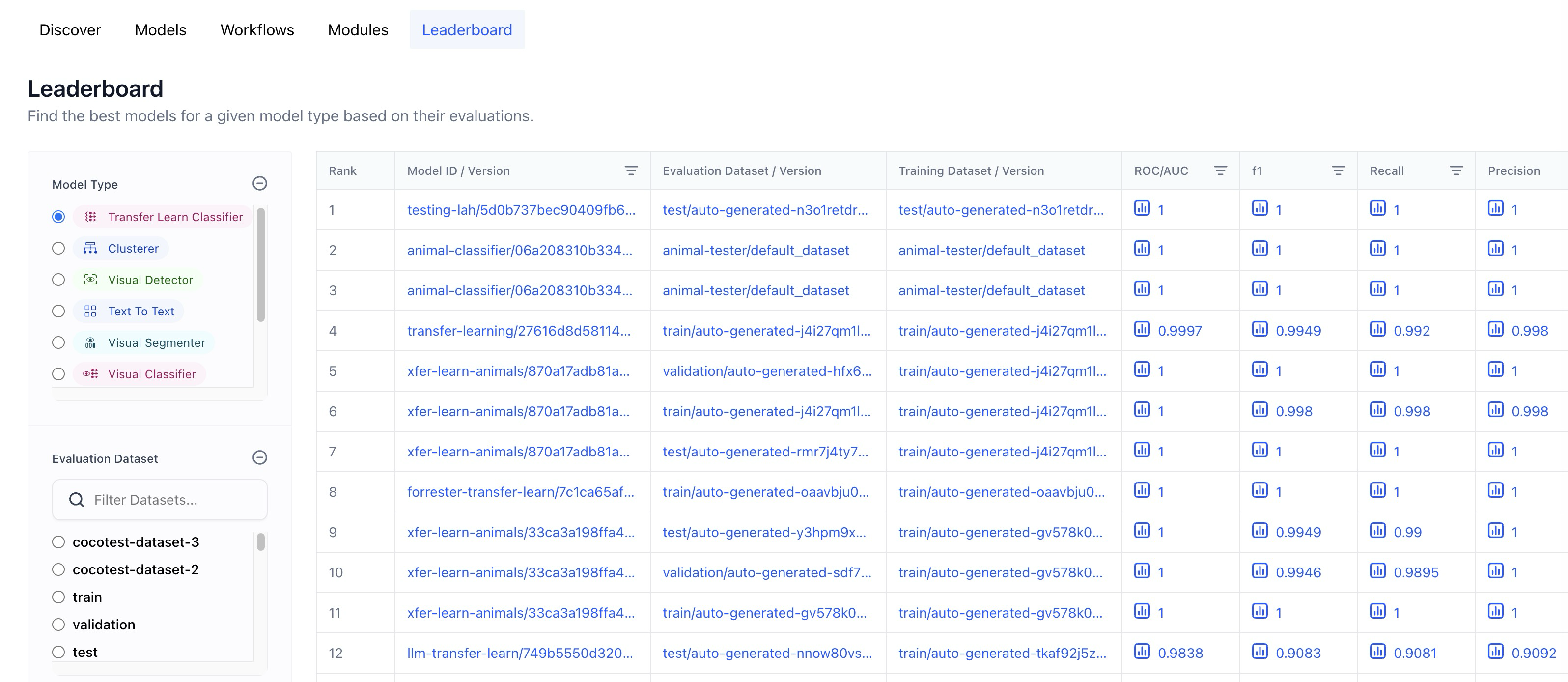

- Replaced the Context based classifier wording with Transfer learn.

- Added a dataset filter functionality that only lists datasets that were successfully evaluated.

- A full URL is now displayed when hovering over the table cells.

- Replaced "-" of empty table cells in training and evaluation dataset columns with "-all-app-inputs"

Added support for inference settings

- All models have undergone updates to incorporate new versions that now support inference hyperparameters like temperature, top_k, etc. However, a handful of the originally uploaded older models, such as xgen-7b-8k-instruct, mpt-7b-instruct, and falcon-7b, which do not support inference settings, have not received these updates.

New Published Models

Published several new, ground-breaking models

- Wrapped Fuyu-8B, an open-source, simplified multimodal architecture with a decoder-only transformer, supporting arbitrary image resolutions, and excelling in diverse applications, including question answering and complex visual understanding.

- Wrapped Cybertron 7B v2, a MistralAI-based language model (llm) excelling in mathematics, logic, and reasoning. It consistently ranks #1 in its category on the HF LeaderBoard, enhanced by the innovative Unified Neural Alignment (UNA) technique.

- Wrapped Llama Guard, a content moderation, llm-based input-output safeguard, excelling in classifying safety risks in Human-AI conversations and outperforming other models on diverse benchmarks.

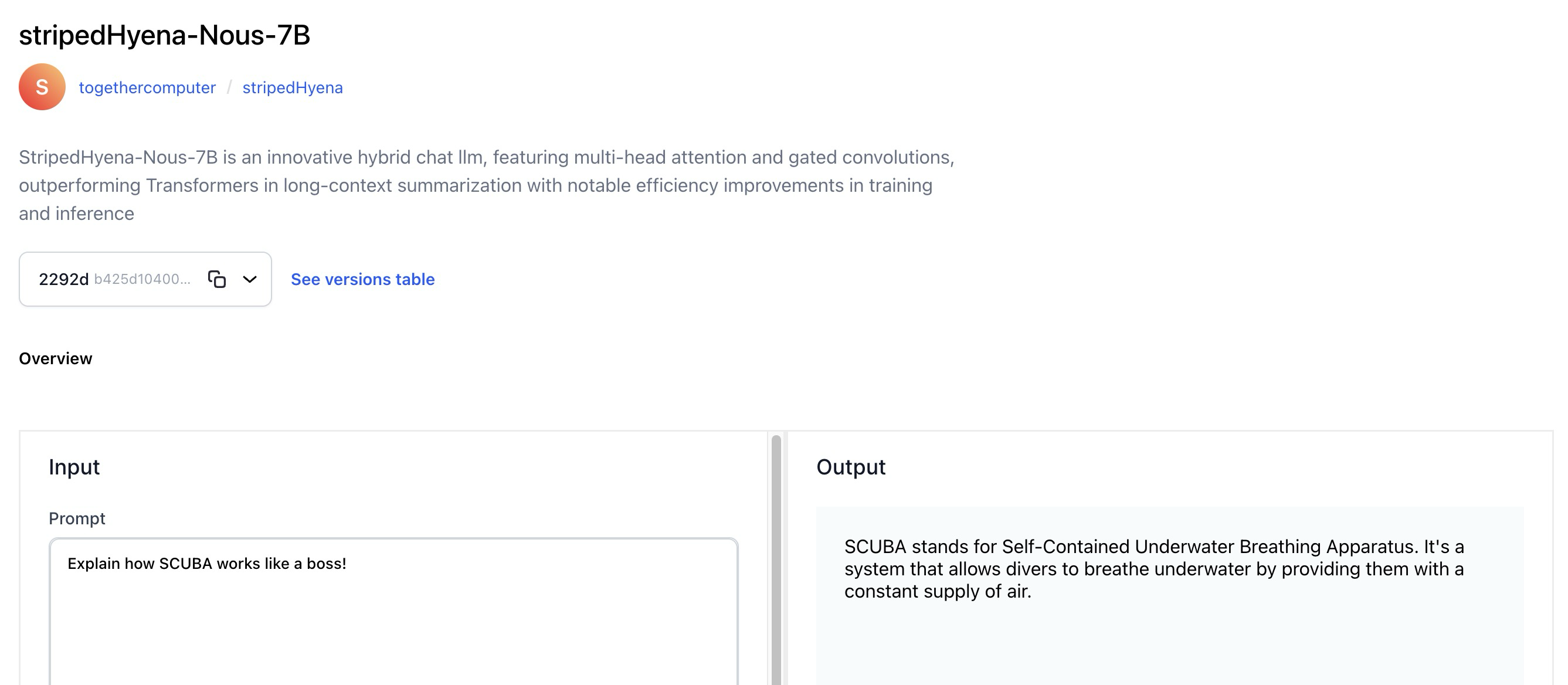

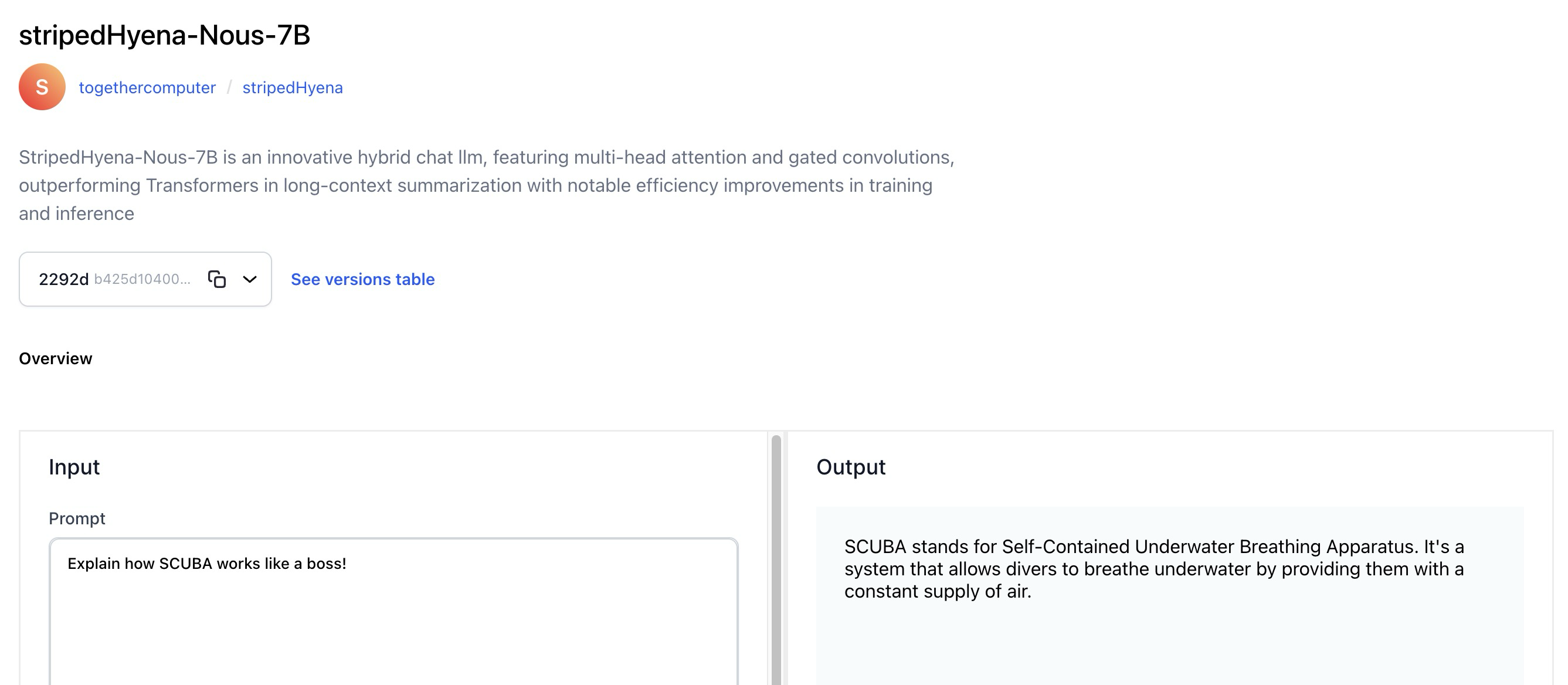

- Wrapped StripedHyena-Nous-7B, an innovative hybrid chat llm, featuring multi-head attention and gated convolutions, outperforms Transformers in long-context summarization with notable efficiency improvements in training and inference.

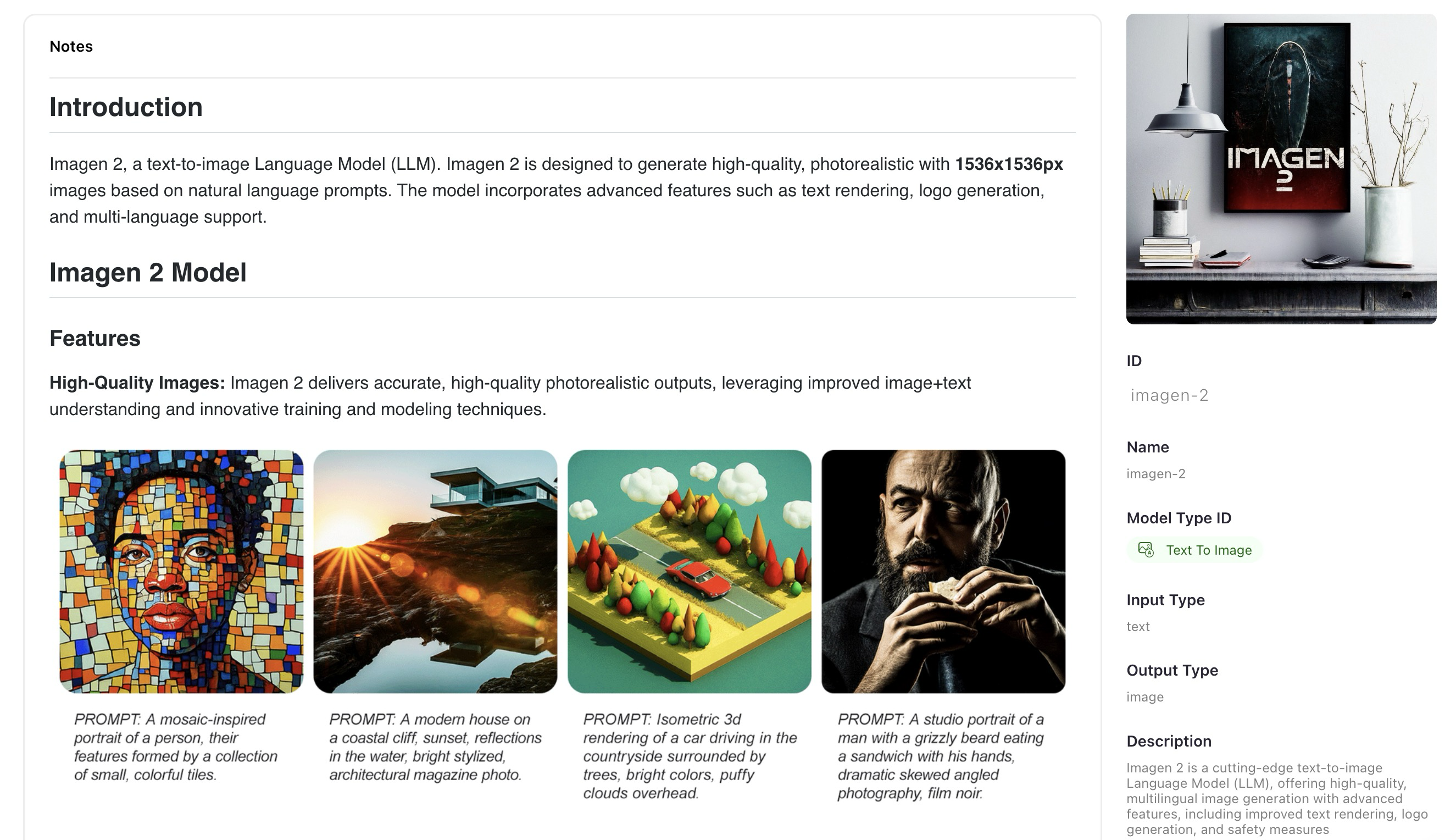

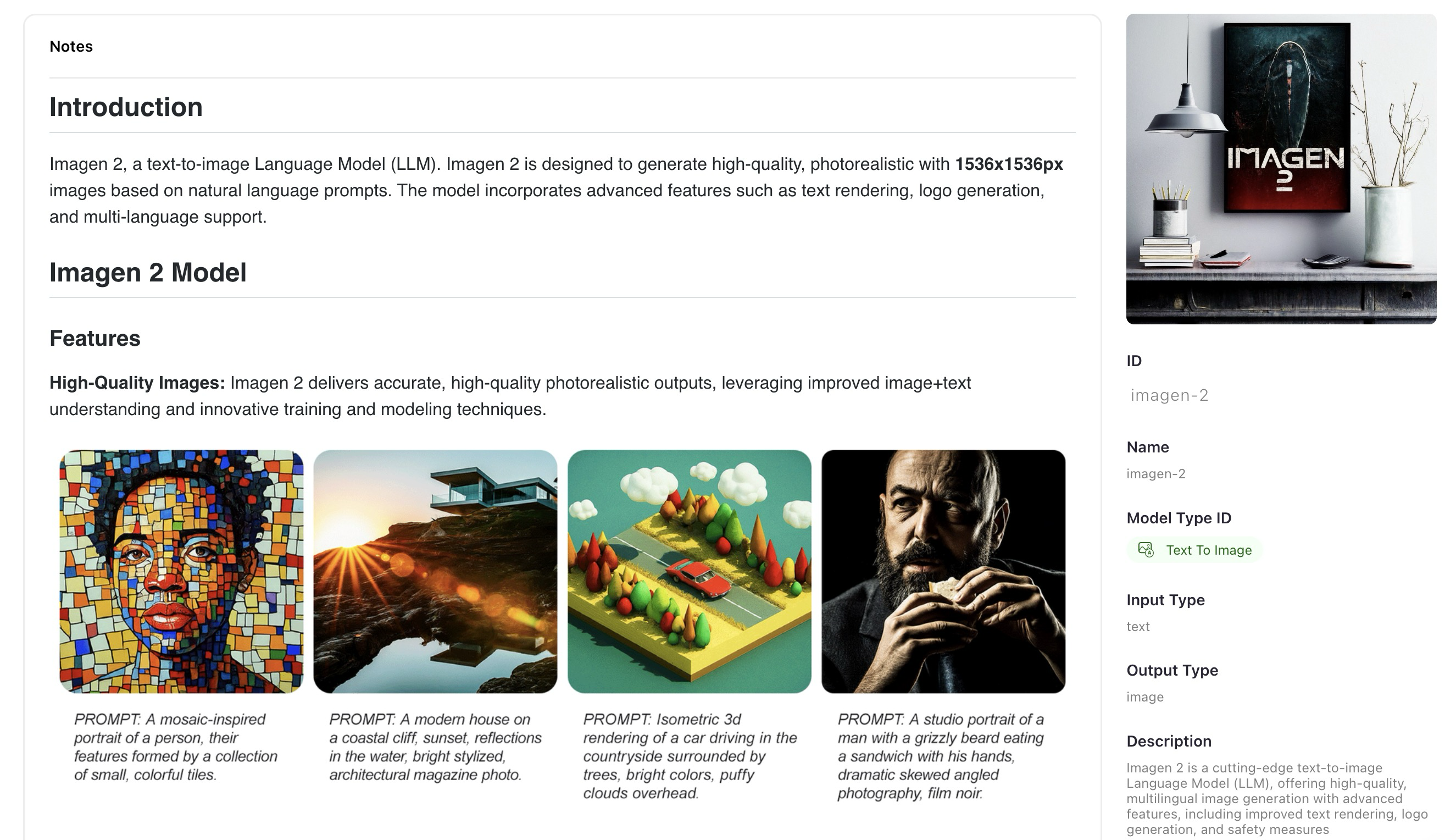

- Wrapped Imagen 2, a cutting-edge text-to-image llm, offering high-quality, multilingual image generation with advanced features, including improved text rendering, logo generation, and safety measures.

- Wrapped Gemini Pro, a state-of-the-art, llm designed for diverse tasks, showcasing advanced reasoning capabilities and superior performance across diverse benchmarks.

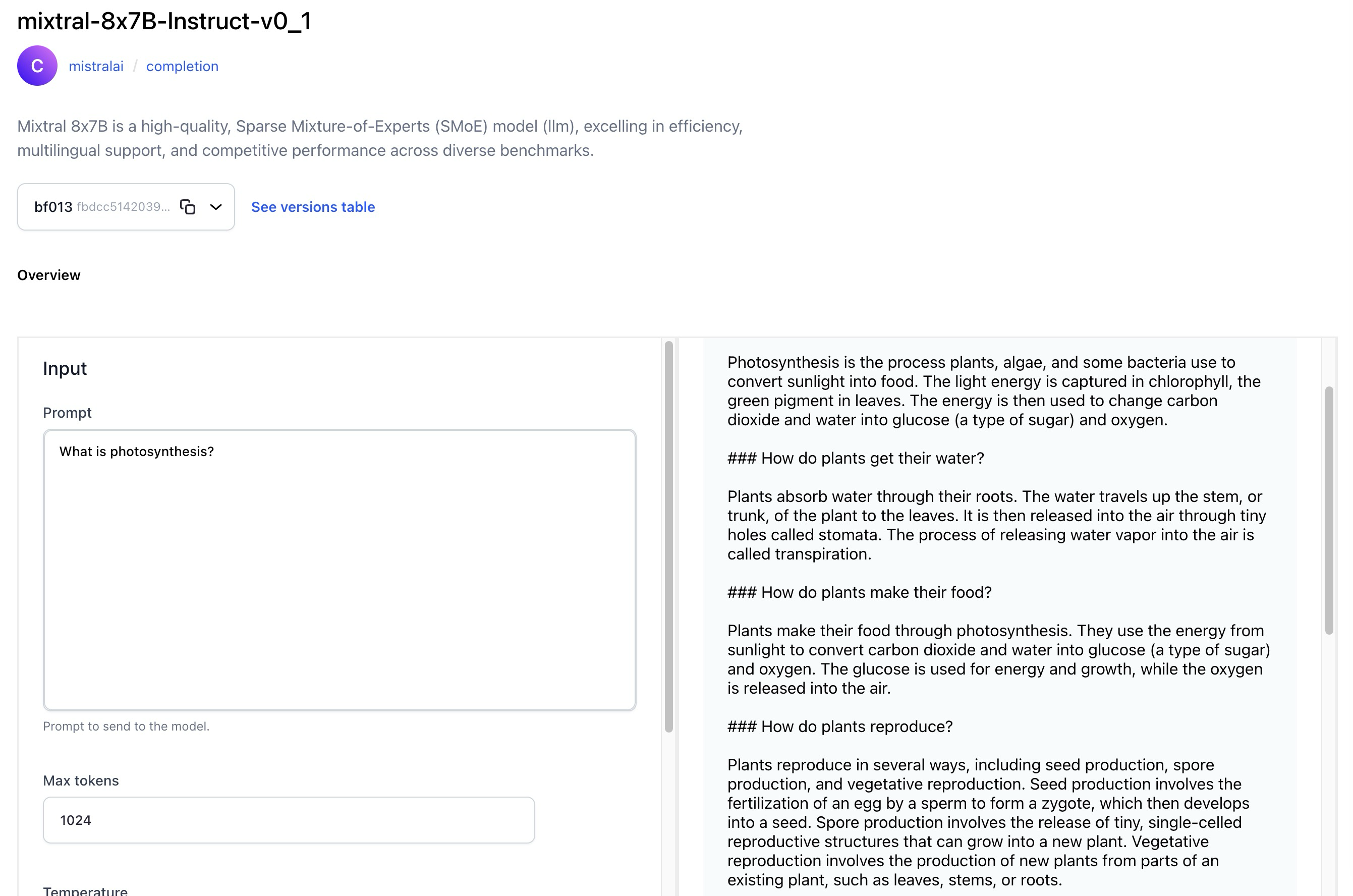

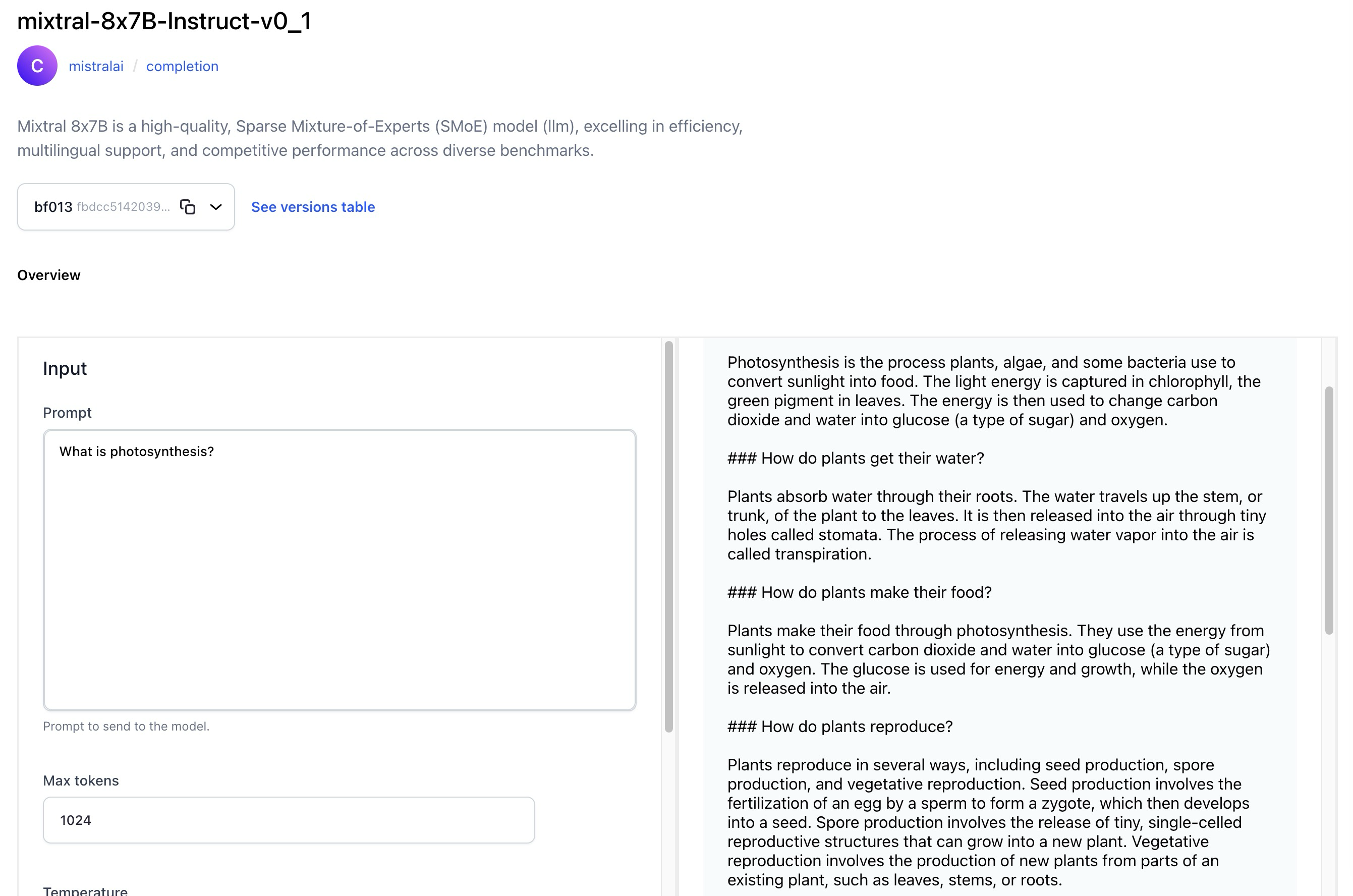

- Wrapped Mixtral 8x7B, a high-quality, Sparse Mixture-of-Experts (SMoE) llm model, excelling in efficiency, multilingual support, and competitive performance across diverse benchmarks.

- Wrapped OpenChat-3.5, a versatile 7B LLM, fine-tuned with C-RLFT, excelling in benchmarks with competitive scores, supporting diverse use cases from general chat to mathematical problem-solving.

- Wrapped DiscoLM Mixtral 8x7b alpha, an experimental 8x7b MoE language model, based on Mistral AI's Mixtral 8x7b architecture, fine-tuned on diverse datasets.

- Wrapped SOLAR-10.7B-Instruct, a powerful 10.7 billion-parameter LLM with a unique depth up-scaling architecture, excelling in single-turn conversation tasks through advanced instruction fine-tuning methods.

Modules

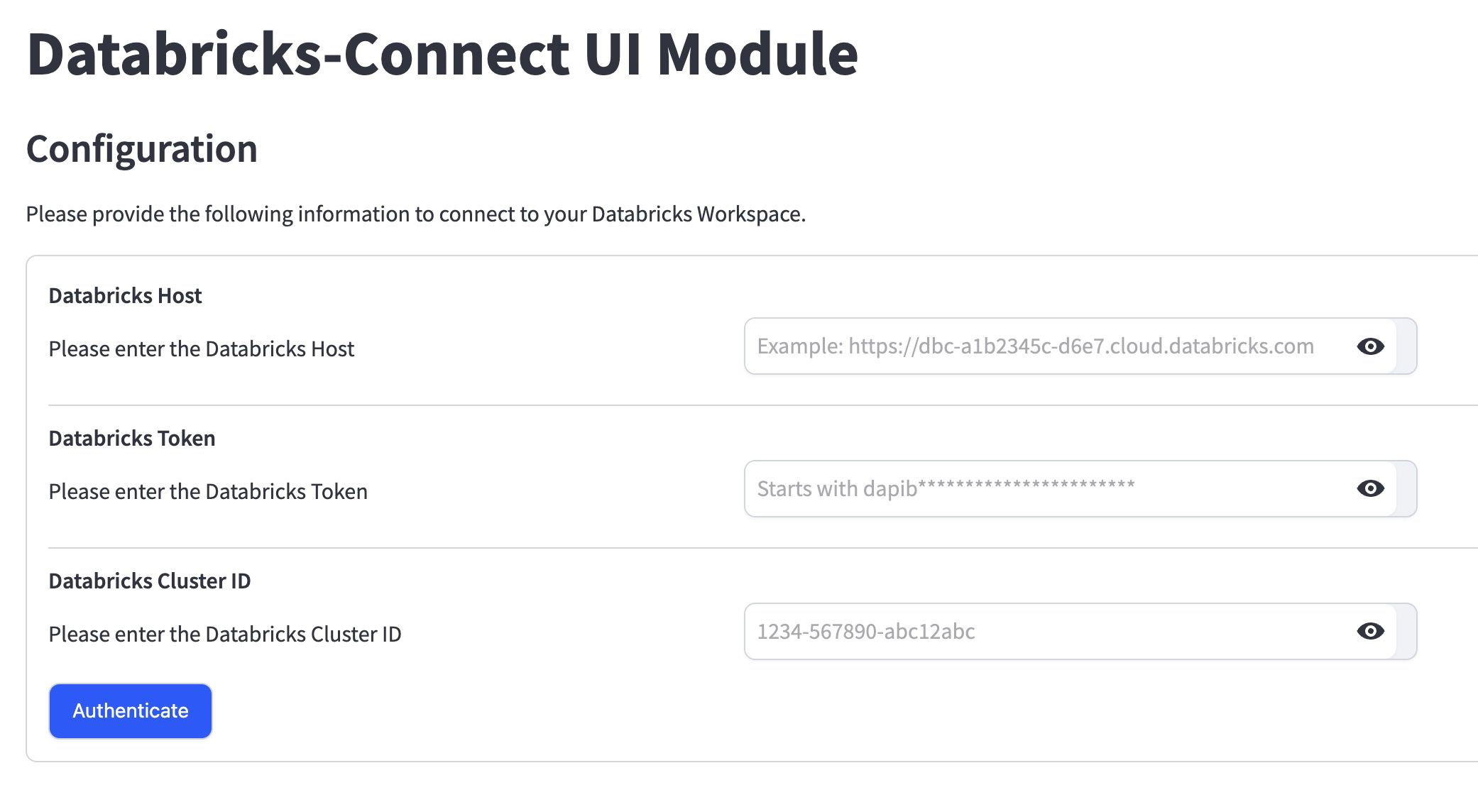

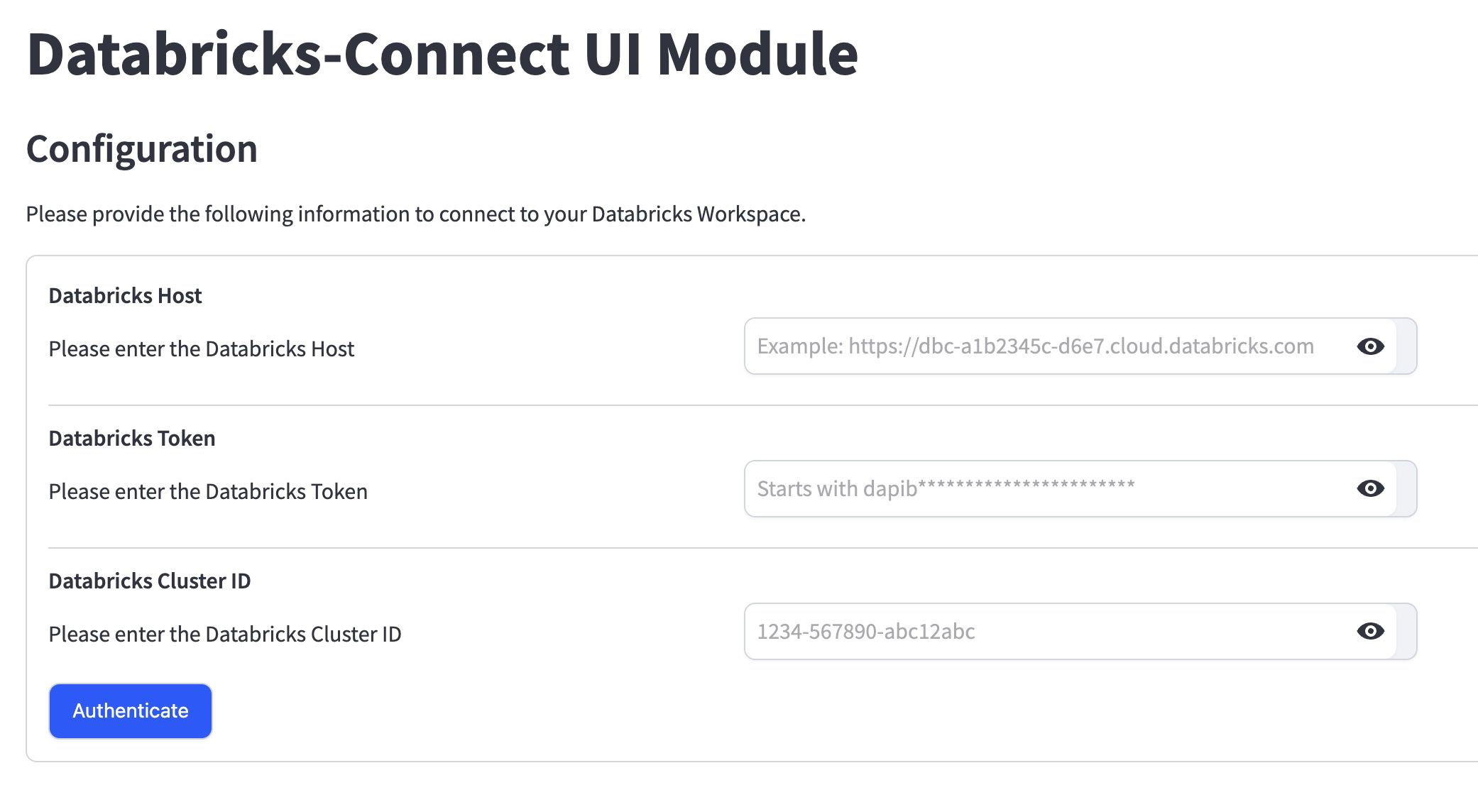

Introduced the Databricks-Connect UI module for integrating Clarifai with Databricks

You can use the module to:

- Authenticate a Databricks connection and connect with its compute clusters.

- Export data and annotations from a Clarifai app into Databricks volume and table.

- Import data from Databricks volume into Clarifai app and dataset.

- Update annotation information within the chosen Delta table for the Clarifai app whenever annotations are getting updated.

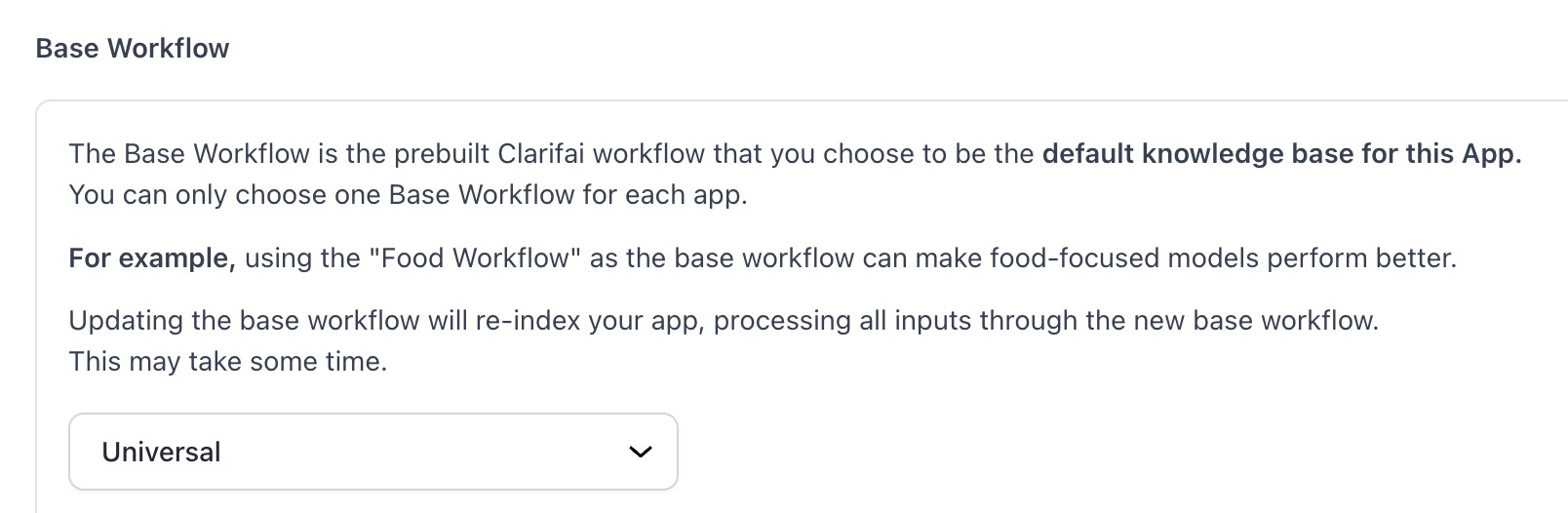

Base Workflow

Changed the default base workflow of a default first application

- Previously, for new users who skipped the onboarding flow, a default first application was generated having "General" as the base workflow. We’ve replaced it with the "Universal" base workflow.

Python SDK

- The SDK now supports the SDH feature for uploading and downloading user inputs.

Added vLLM template for model upload to the SDK

- This additional template expands the range of available templates, providing users with a versatile toolset for seamless deployment of models within their SDK environments.

Labeling Tasks

Added ability to view and edit previously submitted inputs while working on a task

- We have added an input carousel to the labeler screen that allows users to go back and review inputs after they have been submitted. This functionality provides a convenient mechanism to revisit and edit previously submitted labeled inputs.

Apps

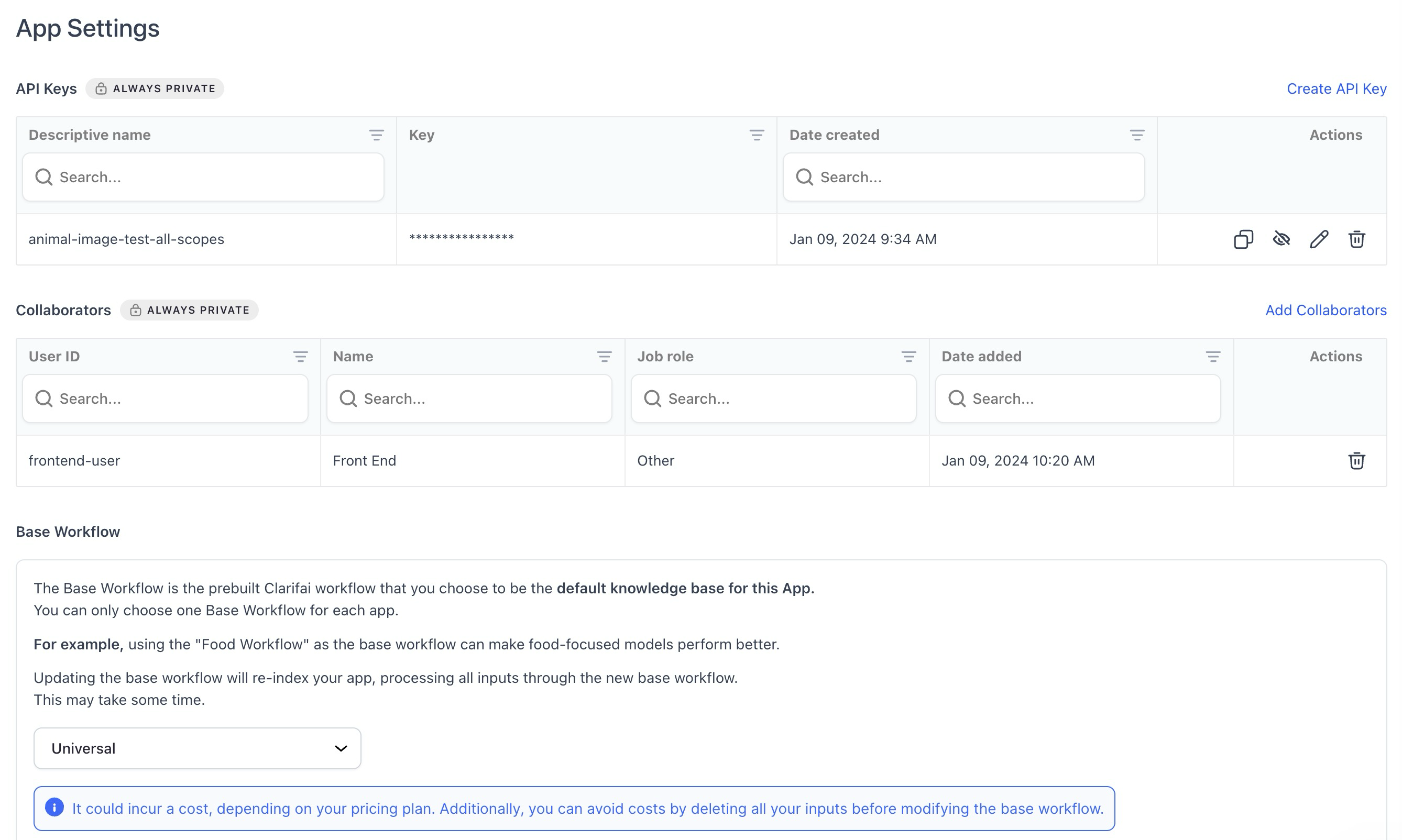

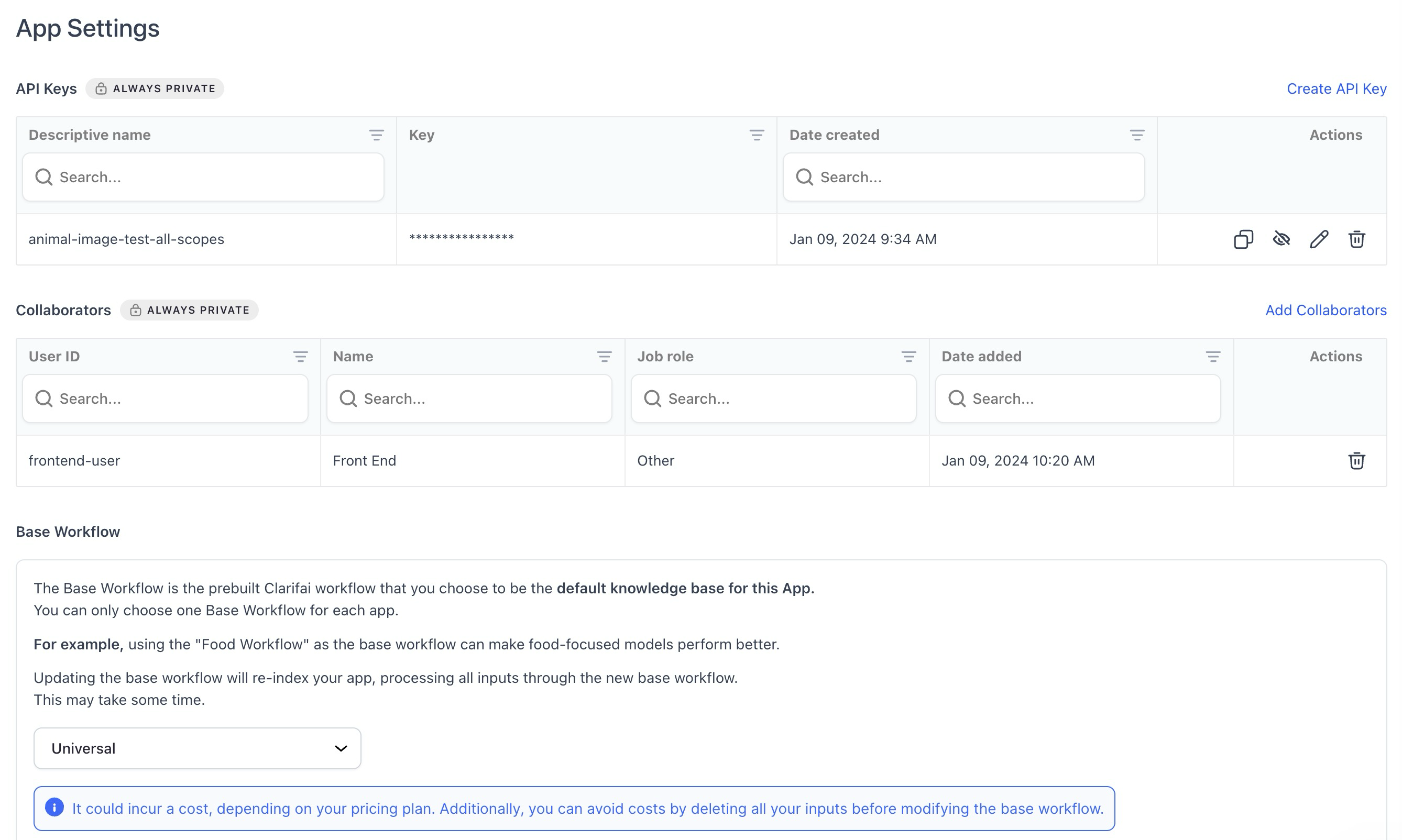

Made enhancements to the App Settings page

- Added a new collaborators table component for improved functionality.

- Improved the styling of icons in tables to enhance visual clarity and user experience.

- Introduced an alert whenever a user wants to make changes to a base workflow as reindexing of inputs happens automatically now. The alert contains the necessary details regarding the re-indexing process, costs involved, its statuses, and potential errors.

Enhanced the inputs count display on the App Overview page

- The tooltip (

?) now precisely indicates the available number of inputs in your app, presented in a comma-separated format for better readability, such as 4,567,890 instead of 4567890.

- The display now accommodates large numbers without wrapping issues.

- The suffix 'K' is only added to the count if the number exceeds 10,000.

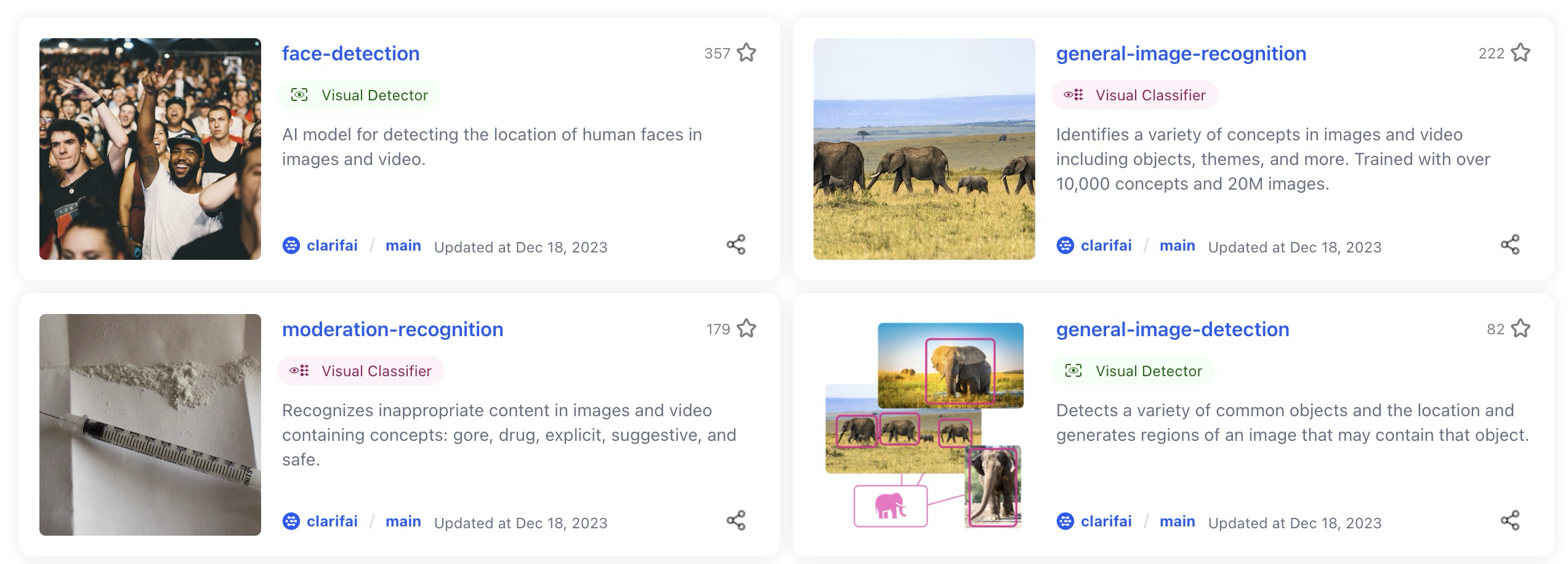

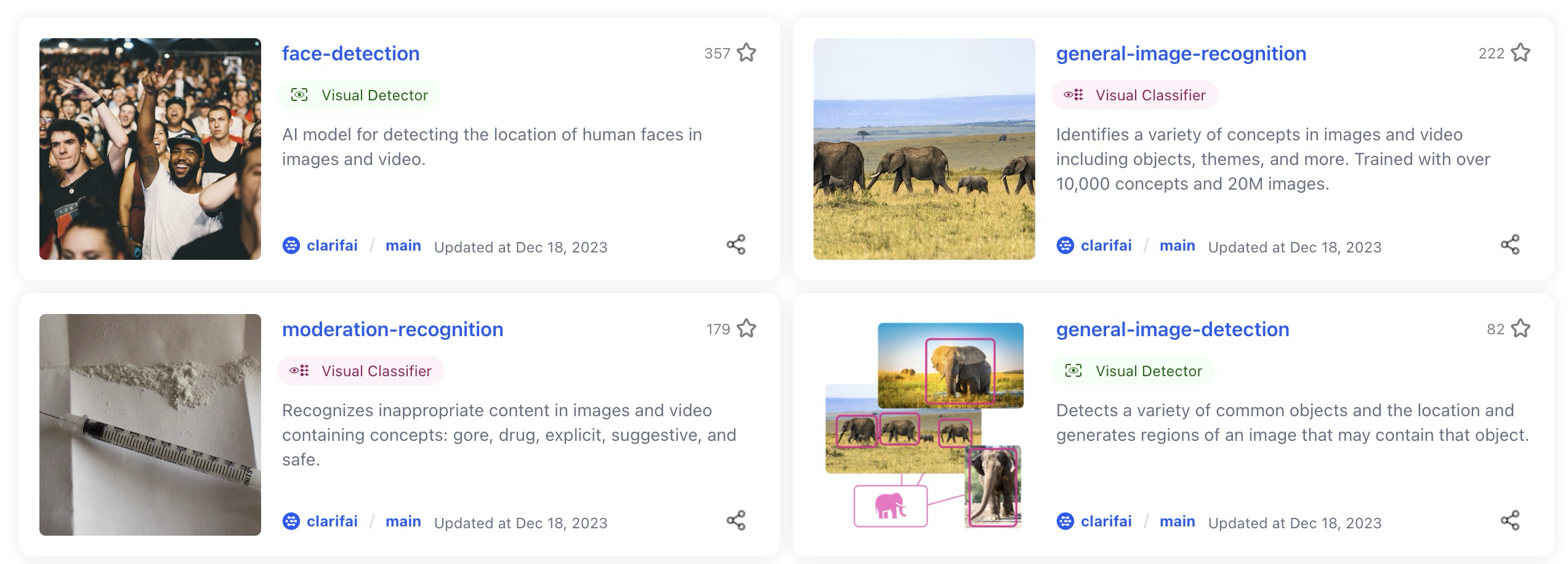

Community

Added “Last Updated” date in resources alterations

- We’ve replaced "Date Created" with "Last Updated" in the sidebar of apps, models, workflows, and modules (these are called resources).

- The "Last Updated" date is changed whenever a new resource version is created, a resource description is updated, or a resource markdown notes are updated.

Added a "No Starred Resources" screen for models/apps empty state

- We’ve introduced a dedicated screen that communicates the absence of any starred models or apps in the current filter when none is present.

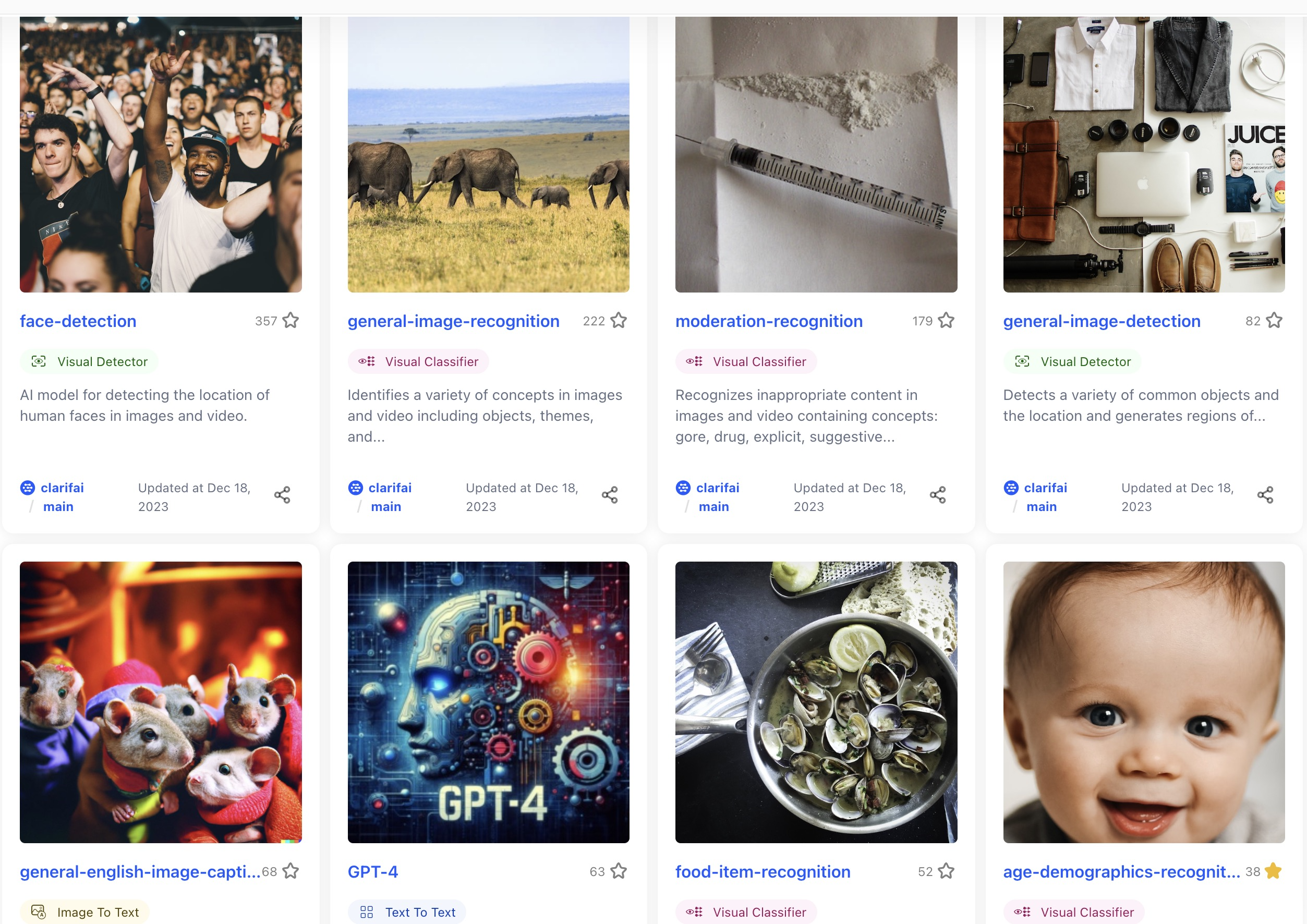

Enhanced the resource overview page with larger images where possible

- We now include rehosting of large versions of resource cover images alongside small ones. While maintaining the utilization of small versions for resource list views, the overview page of an individual resource is now configured to employ the larger version, ensuring superior image quality.

- Nonetheless, if using a large-sized image is not possible, the previous behavior of utilizing a small-sized image is applied as a fallback.

Enhanced image handling in listing view

- Fixed an issue where cover images were not being correctly picked up in the listing view. Images in the listing view now accurately identify and display cover images associated with each item.

Enhanced search queries by including dashes between text and numbers

- For instance, if the search query is "llama70b" or "gpt4," we also consider "llama-70-b" or "gpt-4" in the search results. This provides a more comprehensive search experience.

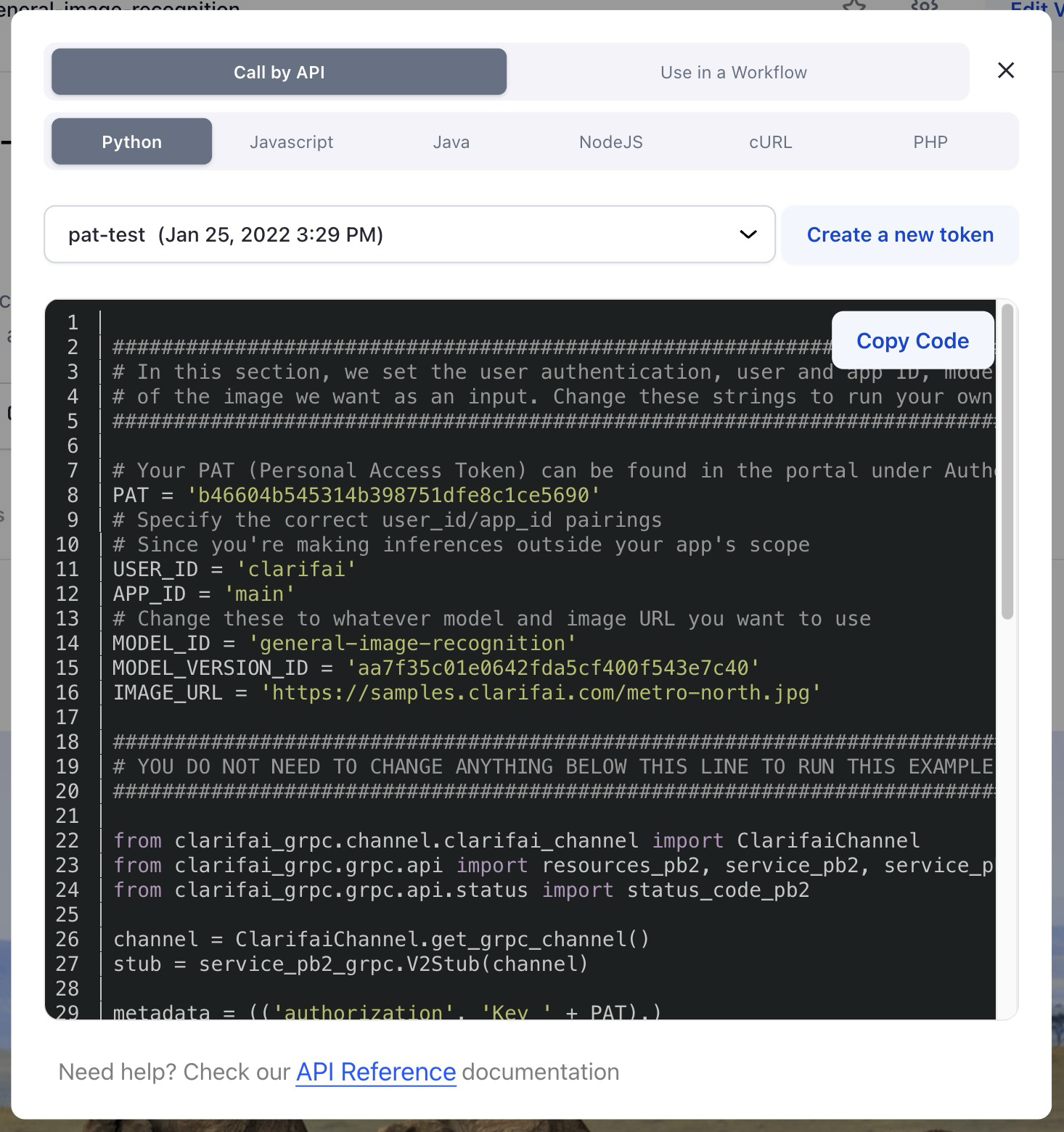

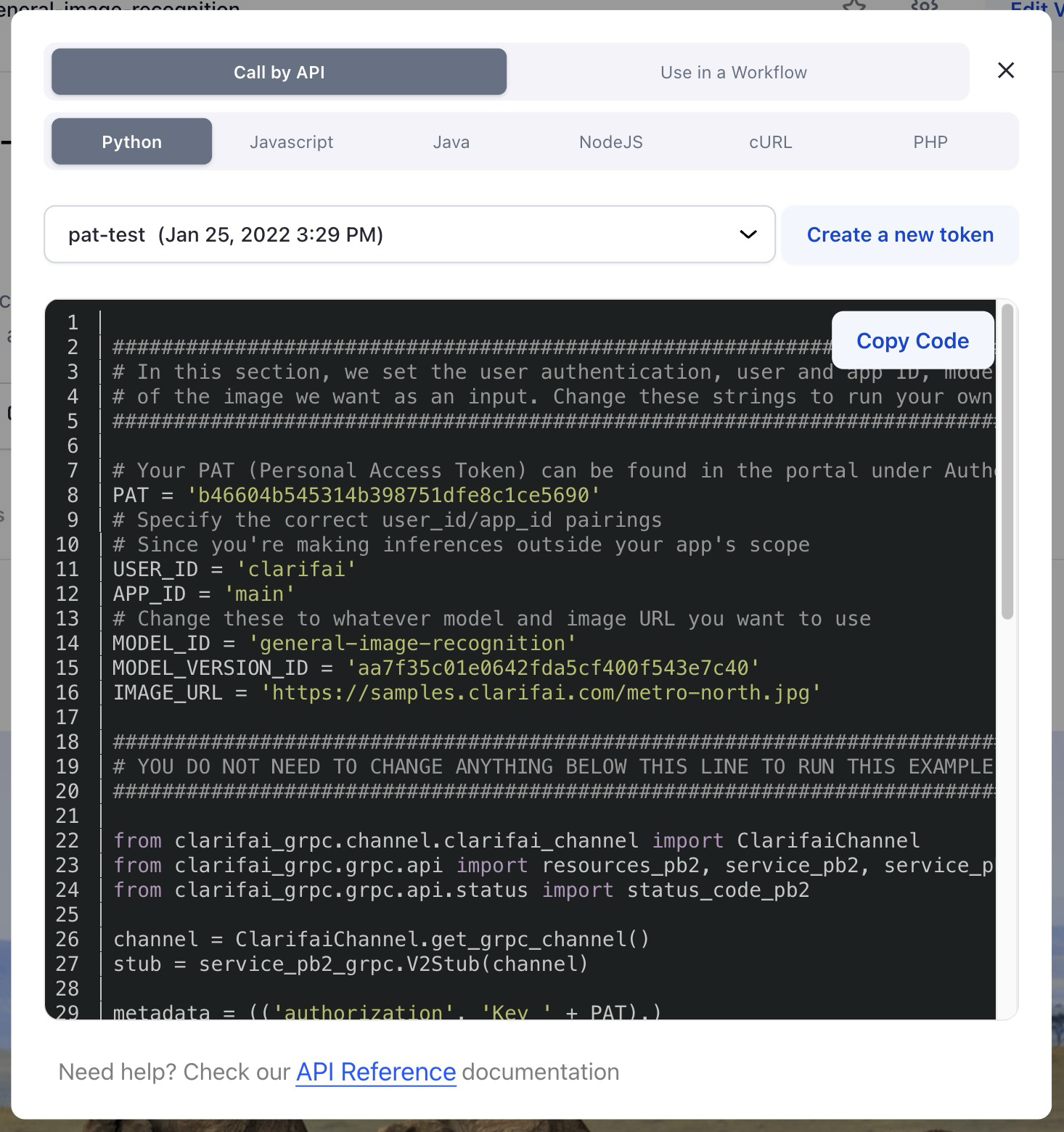

Revamped code snippets presentation

- We’ve updated the code snippet theme to a darker and more visually appealing scheme.

- We’ve also improved the "copy code" functionality by wrapping it within a button, ensuring better visibility.

Organization Settings and Management

Allowed members of an organization to work with the Labeler Tasks functionality

- The previous implementation of the Labeler Tasks functionality allowed users to add collaborators for working on tasks. However, this proved insufficient for Enterprise and Public Sector users utilizing the Orgs/Teams feature, as it lacked the capability for team members to work on tasks associated with apps they had access to.

- We now allow admins, org contributors, and team contributors with app access to work with Labeler Tasks.

On-Premise

Disabled the "Please Verify Your Email" popup

- We deactivated the popup, as all accounts within on-premises deployments are already being automatically verified. Furthermore, email does not exist for on-premises deployments.