Named Entity Recognition (NER) is a task in natural language processing (NLP) that involves identifying and classifying named entities in text into predefined categories such as names of people, organizations, locations, dates, etc. While Large Language Models (LLMs) have gained significant attention and achieved impressive results in various NLP tasks, including few-shot NER, it's important to acknowledge that simpler solutions have been available for some time. These solutions usually require less compute to deploy and run, and thus lower the cost of adoption (read: cheaper! Also see this MLOps Community Talk by Meryem Arik). In this blogpost, we will explore the problem of few-shot NER and the current state-of-the-art (SOTA) methods for this problem.

A named entity means anything that can be referred to with a proper name, such as a person (Steven Spielberg) or a city (Toronto). A textbook definition of NER is the task of locating and classifying named entities with larger spans of texts. The most common types are:

Image above taken from What is named entity recognition (NER) and how can I use it? by Christopher Marshall

NER is widely adopted in industry applications and is often used as a first step in a lot of NLP tasks. For example:

There are a few existing approaches to NER.

While there is a plethora of pre-trained NER models that are readily-available in the open source community, these models only cover the basic entity types, which often do not cover all possible use cases. In these scenarios, the list of entity types needs to be changed or extended to include use-case-specific entity types like commercial product names or chemical compounds. Typically there will only be a few examples of what the desired output should look like, and not an abundance of data to train models on. Few-Shot NER approaches aim to solve this challenge.

Training examples are needed for the system to learn new entity types. But typical deep learning methods require a lot of training examples to do their task well. Training a deep neural network with only a few examples would lead to overfitting: the model would simply memorize the few examples it has seen and always give the correct answers on those, but would not achieve the end goal of generalizing well to examples it has not encountered before.

To overcome this challenge one can use a pre-trained model that has been trained to do NER on a lot of general-domain data labeled with generic entity types, and use the few available domain-specific examples to adapt the base model to the text domain and entity types of interest. Below we describe a few methods which have been used to introduce the new knowledge into the model while avoiding excessive overfitting.

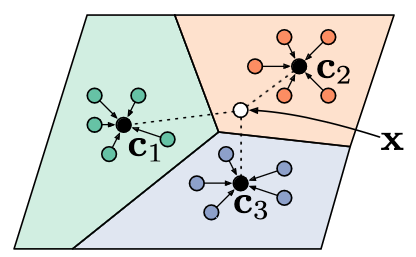

Each data point (in our case a token) is internally represented within a deep learning model as vectors. Prototype-based methods were first introduced in a paper by Jake Snell et al. and investigated for few-shot NER by Jiaxin Huang et al.. When a support set (the few available in-domain examples that can be used to adapt the model) is obtained, the pre-existing generic model is first used to calculate a representation vector for each token. It is expected that the more similar the meaning of a pair of words, the smaller the distance between their representations will be.

After obtaining the vectors, they are grouped by entity type (e.g. all Person tokens), and the average of all representations within a group is calculated. The resulting vector is called a prototype, representing the entity type as a whole.

Prototypical networks in the few-shot scenario. Prototypes are computed as the mean of support example vectors from each class (Snell et al., 2017)

When new data comes in that the system needs to predict on, it can calculate token representations for the new sentence in the same way. In the simplest case, for each of the tokens it predicts the class of the prototype which is the closest to the vector of the token in question. When there are only a few data points for training, the simplicity of this method helps to avoid overfitting to them.

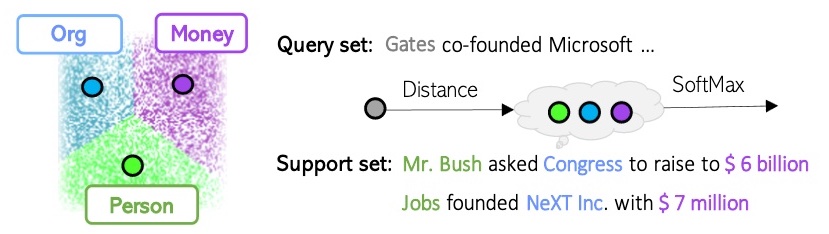

An illustration of a prototype-based method (Huang et al., 2021)

Variations of the prototype-based approach include the work of Yi Yang and Arzoo Katiyar, which uses an instance-based instead of a class-based metric. Instead of explicitly constructing an average prototype representation for each class, this algorithm will simply calculate the distance from the token in question to each of the individual tokens in the support set, and predict the tag of the most similar support token. This leads to better predictions of the O-class (the tokens which are not named entities).

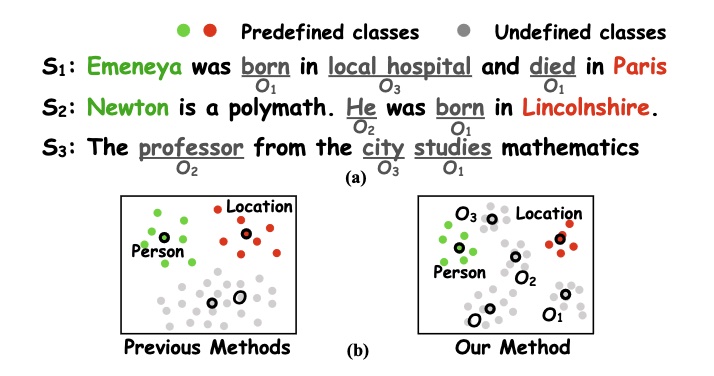

This issue is also dealt with in the paper by Meihan Tong et al., who propose to learn multiple prototypes for the different O-class words instead of a single one to better represent rich semantics.

(a): Examples for undefined classes. (b): Different ways to handle O class (single prototype vs. multiple prototypes) (Tong et al., 2021)

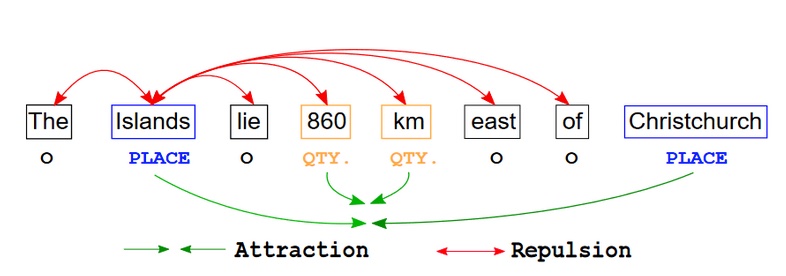

Another method often used for few-shot NER is contrastive learning. The aim of contrastive learning is to represent data within a model in such a way that similar data points would have vectors that are close to each other, and dissimilar data points would be further apart. For NER, this means that during the process of adapting a model to the new data, tokens of the same type will be pushed together, while tokens of other types will be pulled apart. The contrastive learning approach is used in the article by Sarkar Snigdha Sarathi Das et al. among several others.

Contrastive learning dynamics of a token (Islands) in an example sentence. Distance between tokens of the same category is decreased (attraction), while distance between different categories is increased (repulsion) (Das et al., 2022)

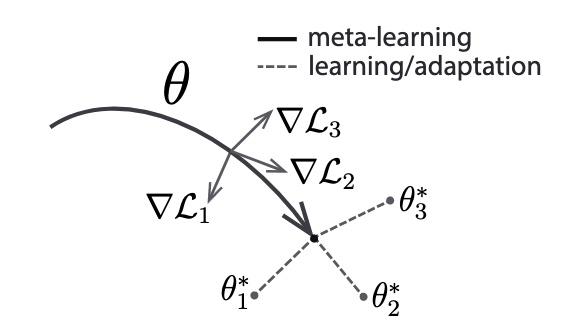

The aim of meta-learning is to learn how to learn: a model is trained on different tasks such that it would adapt quickly to a new task and a small number of new training samples would suffice. Model-agnostic meta-learning (MAML), introduced by Finn et al., also trains the models to be easily and quickly fine-tuned. It does so by aiming to find task-sensitive model parameters: a small change to the parameters can significantly improve performance on a new task.

Diagram of the model-agnostic meta-learning algorithm (MAML), which optimizes for a representation θ that can quickly adapt to new tasks (Finn et al., 2017)

An approach by Ma et al. applies MAML to few-shot NER and uses it to find initial model parameters that could easily adapt to new name identity classes. It combines MAML with prototypical networks to achieve SOTA results on the Few-NERD dataset.

Recently, increasing focus has been put on LLMs. They are used to solve more and more tasks, and NER is not an exception. Modern LLMs are very good at learning from only a few examples, which is certainly beneficial in a few-shot setting.

However, NER is a token-level labeling task (each individual token in a sentence needs to be assigned a class), while LLMs are generative (they produce new text based on a prompt). This has led researchers to investigate how to best use the abilities of LLMs in this scenario, where explicitly querying the model with templates many times per sentence is impractical. For example, the work of Ruotian Ma et al.tries to solve this by prompting NER without templates, fine-tuning a model to predict label words representative of a class where named entities appear in the text.

Recently, GPT-NER by Shuhe Wang et al. proposed to transform the NER sequence labeling task (assigning classes to tokens) into a generation task (producing text), which should make it easier to deal with for LLMs, and, in particular, GPT models. The article suggests that in the few-shot scenario, when only a few training examples are available, GPT-NER is better than models trained using supervised learning. However, the supervised baseline does not use any techniques specific to few-shot NER such as ones mentioned above, and it is to be expected that a general-purpose model trained on only a handful of examples performs poorly. This leaves open questions for this particular comparison.

As in all artificial intelligence tasks, data availability is a very important consideration. High-quality training data is needed to create the models, but no less crucially, publicly available high-quality test data is required to compare the quality of different models and to evaluate the progress in a controllable, reproducible way.

Performance on few-shot NER used to be evaluated on subsets of popular NER datasets, e.g. by sampling 5 sentences from each entity type in CoNLL 2003 as training examples. A comprehensive evaluation can be found in a study by Huang et al.

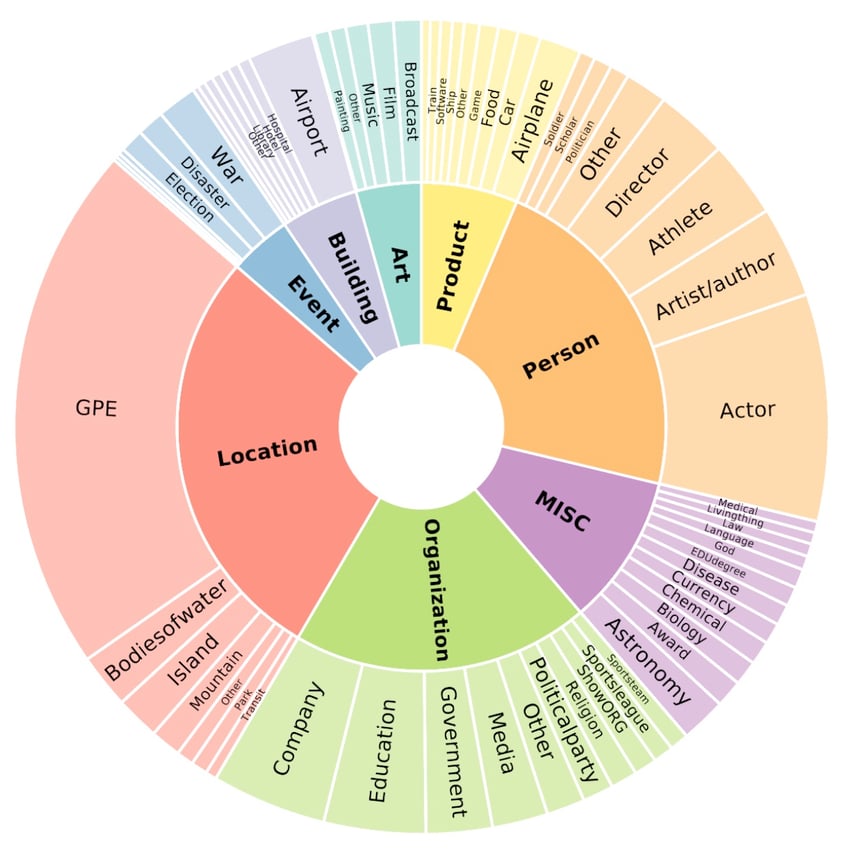

In 2021, Ding et al. introduced Few-NERD (Few-shot Named Entity Recognition Dataset), a dataset specifically designed for the task of few-shot NER. Named entities in this dataset are labeled with 8 coarse-grained entity types, which are further divided into 66 fine-grained types. Each instance type (either coarse- or fine-grained) only appears in one of the subsets: train (used for training the model), validation (used for assessing training progress), or test (treated as unseen data on which results are reported). This way, Few-NERD is becoming a popular choice for assessing the generalization and knowledge transfer abilities of few-shot NER systems.

Coarse- and fine-grained entity types in the Few-NERD dataset (Ding et al., 2021)

The main challenge of few-shot NER is learning from a small amount of available in-domain data while retaining the ability to generalize to new data. A few types of approaches, which we describe in this blog post, are used in research to solve this problem. With the recent focus on LLMs, which are exceptionally strong few-shot learners, it certainly is exciting to see future progress on few-shot NER benchmarks.

Try out one of the NER models on the Clarifai platform today! Can’t find what you need? Consult our Docs page or send us a message in our Community Slack Channel.

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy