This tutorial is based on this video, in which we aim to guide you step-by-step in fine-tuning a text-to-text generative model for a classification task. The specific model we are going to work with is the GPT-Neo model from Aleuther AI.

To walk you through the process, we'll be using the student questions dataset, a set characterized by approximately 120,000 test questions. However, to optimize the learning experience, we'll narrow the tutorial down to about 5,000 test questions.

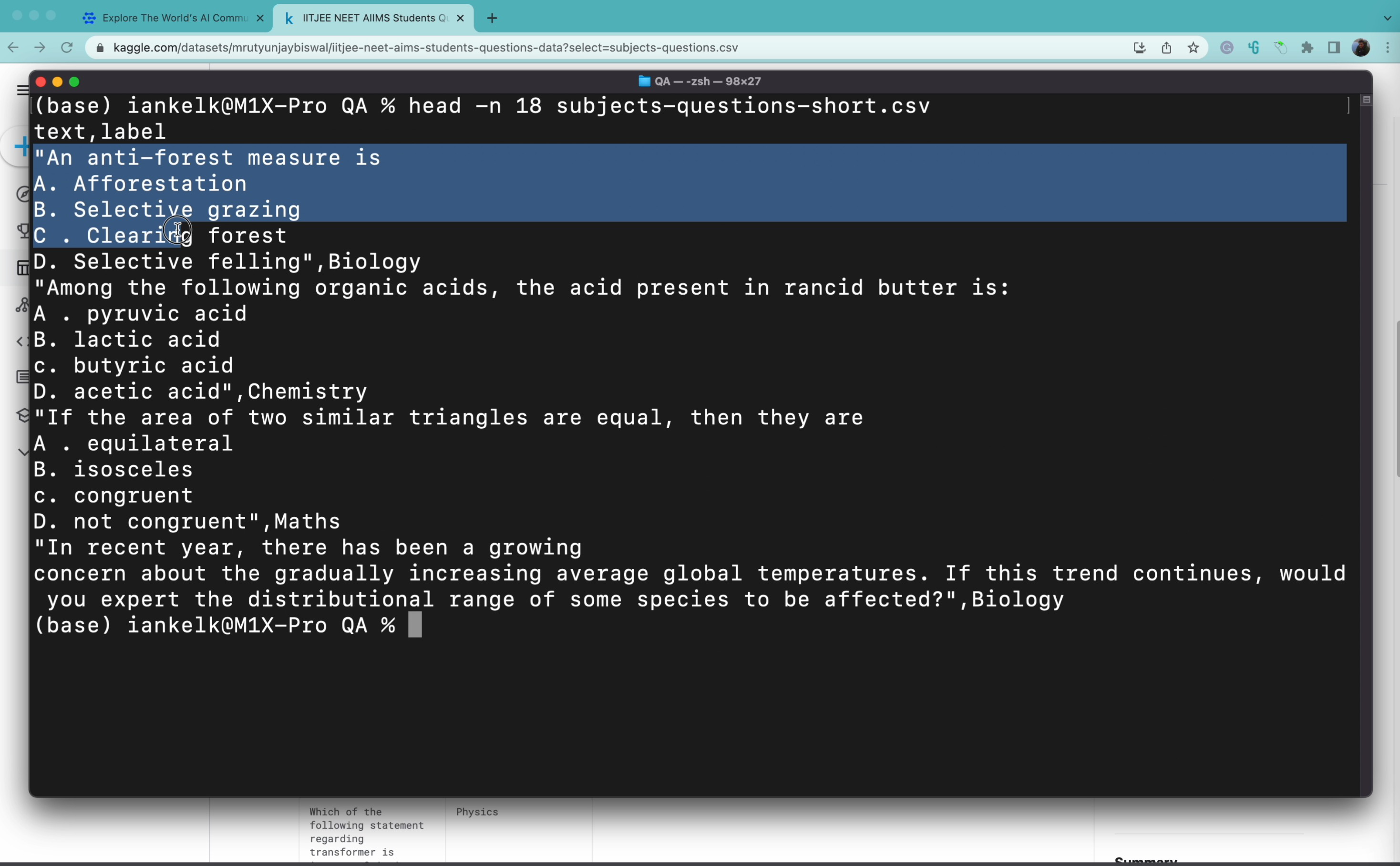

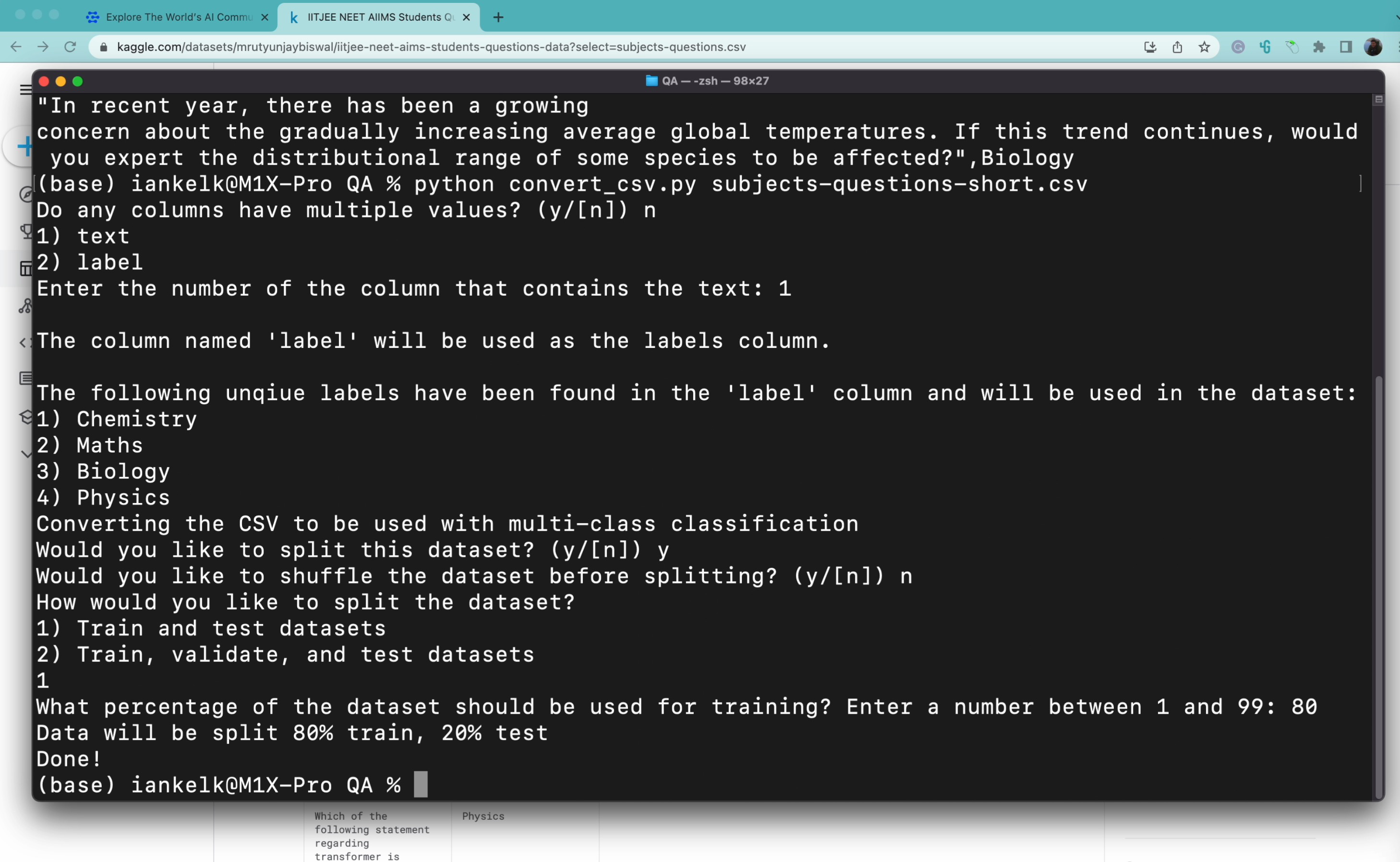

Examining our dataset, we find it framed as a standard CSV file carrying a 'text' and a 'label' column. The 'text' column homes in on the question, spanning various subjects such as biology, chemistry, math, or physics. On the other side, the 'label' column pinpoints the specific topic pertaining to the question.

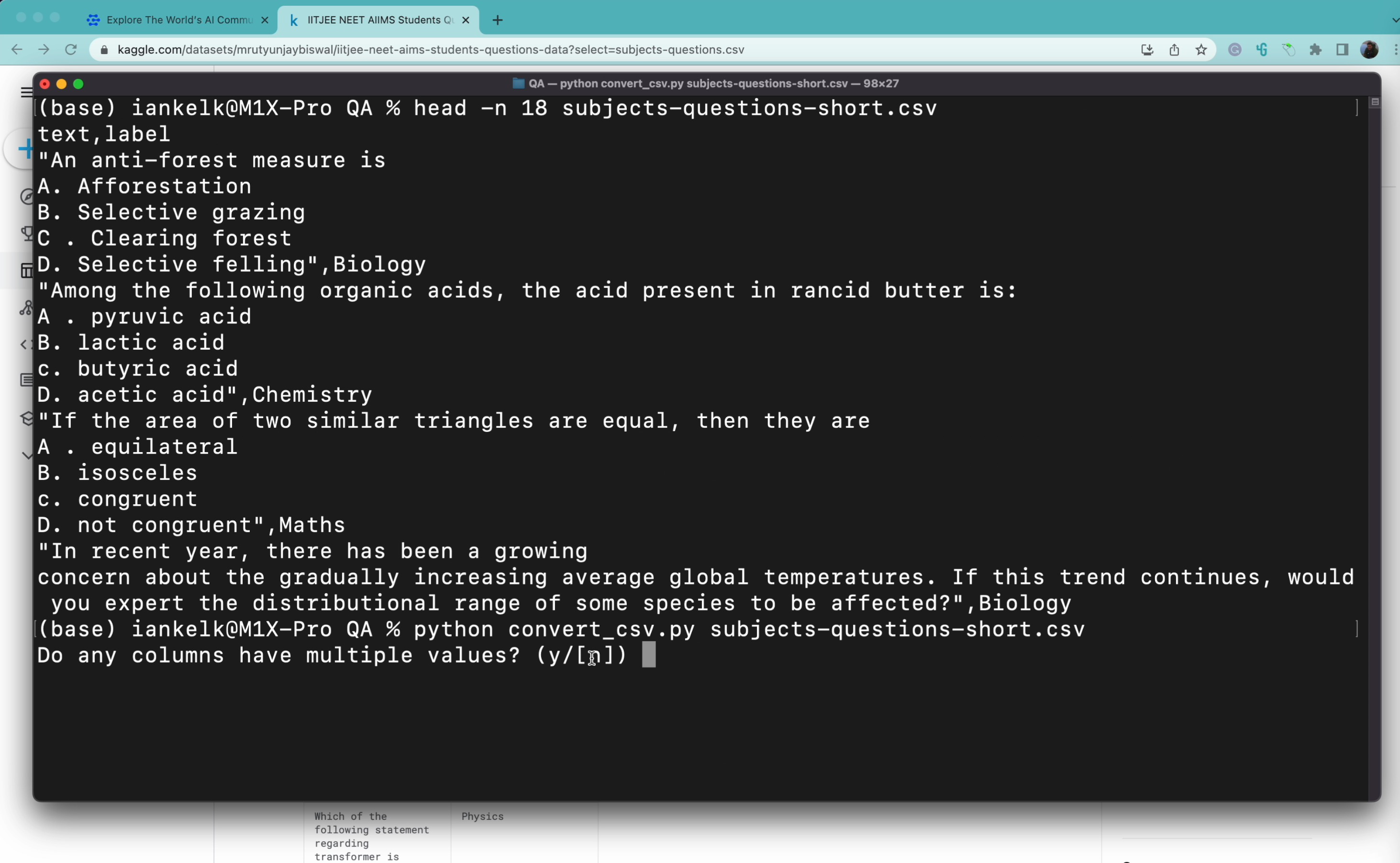

This process commences with data conversion, which we'll do using a Python script. The insightful thing about this Python tool is that when run, it initially inquires if any columns bear several values. In our case scenario, they don't, so our response will be a 'no'.

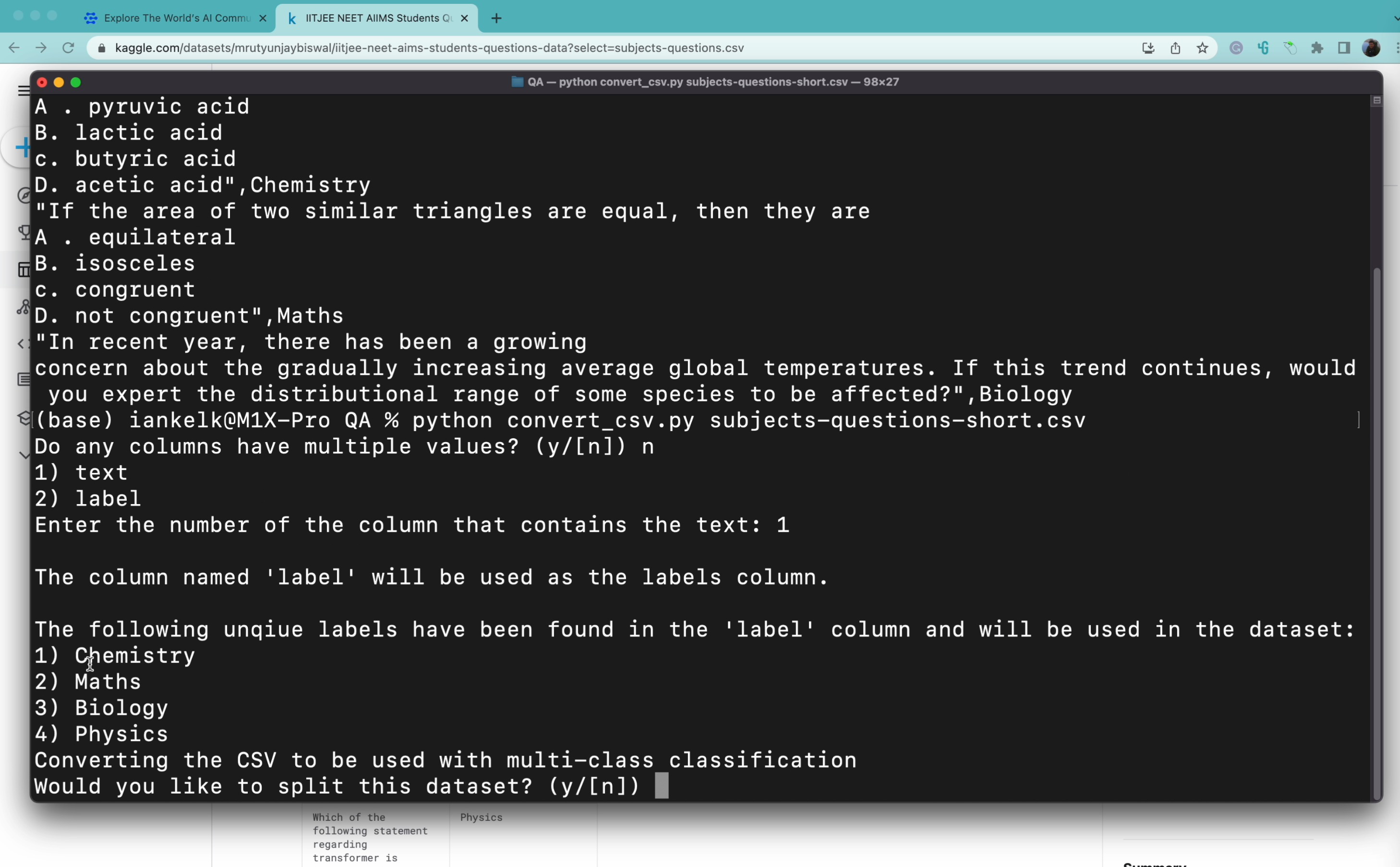

Next, it seeks out the column providing the text, which in this instance happens to be the first column. The tool is smart enough to discern multiple categories for the second column, taking note of chemistry, math, biology, and physics. Probing further, it concludes that there only lies one more column apart from the 'text' column, thereby concluding automatically that we should use 'labels' as the labels column.

The Python tool then asks whether we'd prefer to partition our dataset into a training and testing set. This we accept, and the answer is 'yes.' The rationale behind this choice is the desire to gauge how well our model tackles data it has never encountered before. As there's no need to shuffle the data for this particular project, we'll respond with a 'no' when asked to do so. Our choice falls on splitting the dataset merely into training and testing sets, eliminating the need for a validation set.

Having made our decisions, we'll dedicate 80% of our dataset to training and reserve the rest for testing. Now our data neatly arranges into two distinct files: a training set and a testing set.

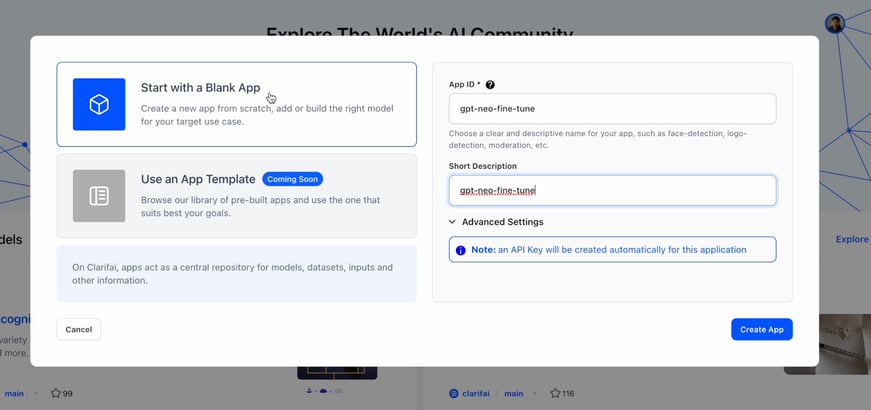

With our dataset well prepared, we can now venture into the fine-tuning process. We do this by navigating to the 'models' tab of Clarifai Community and adding a new application.

We can cancel out of the model creation screen, since we haven't yet added our training data.

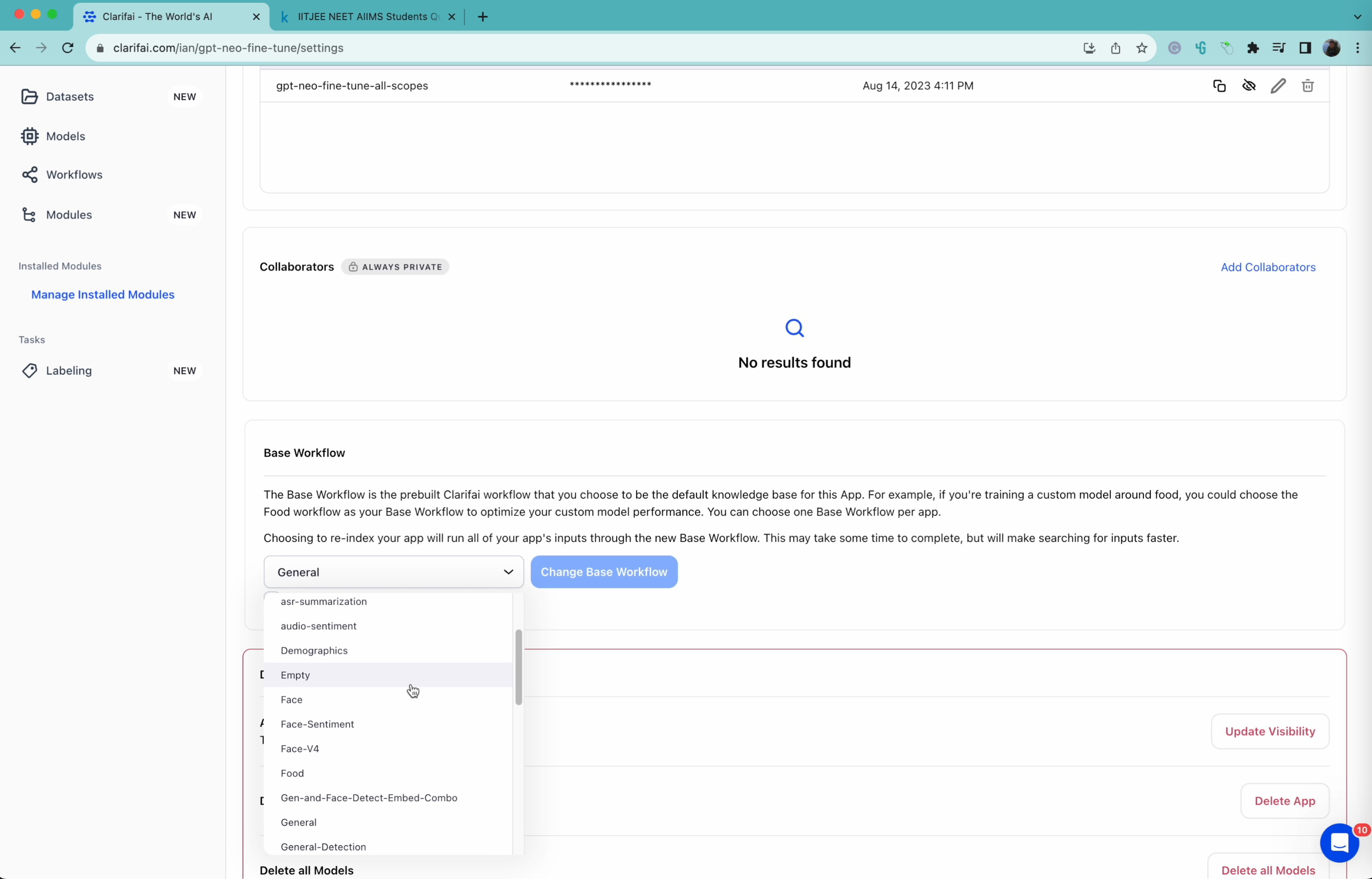

Navigating to the "Settings" of the app, we change the "Base Workflow" of the app to "Empty". This is because the default "General" workflow is for images, and won't accept text uploads.

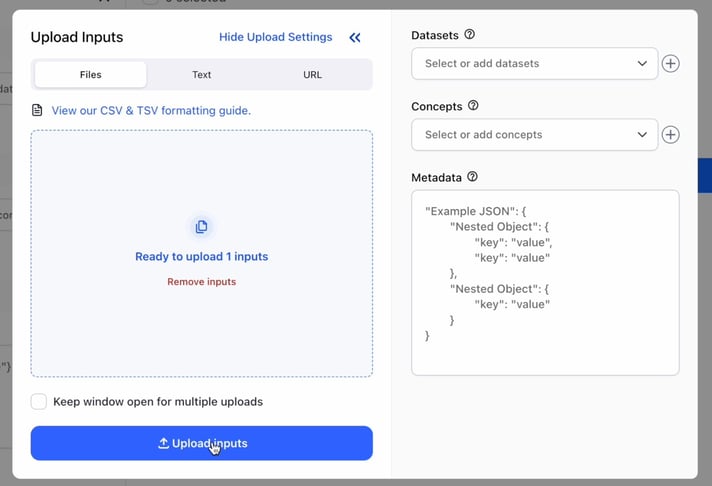

We then choose "Inputs" from the left sidebar, and upload our training CSV that we created in the preprocessing step.

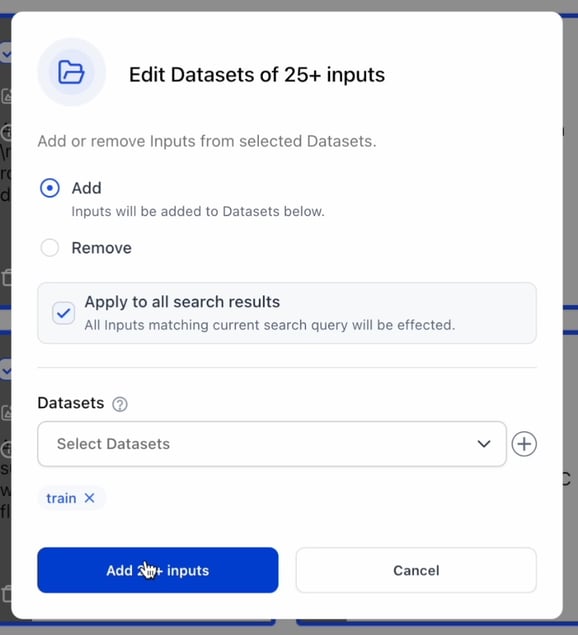

Once the inputs have finished uploading, you can "Select All" as well as "Apply to all Search Results" and add all the inputs to the "train" dataset.

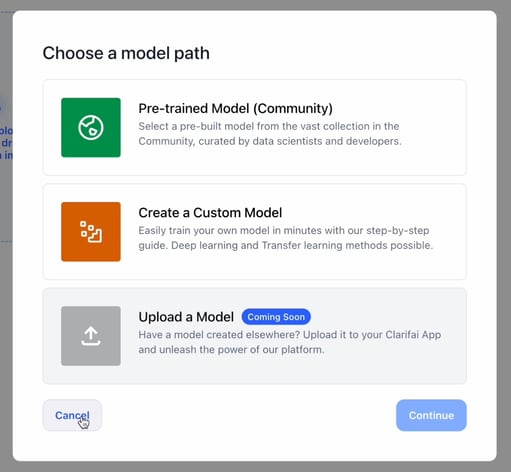

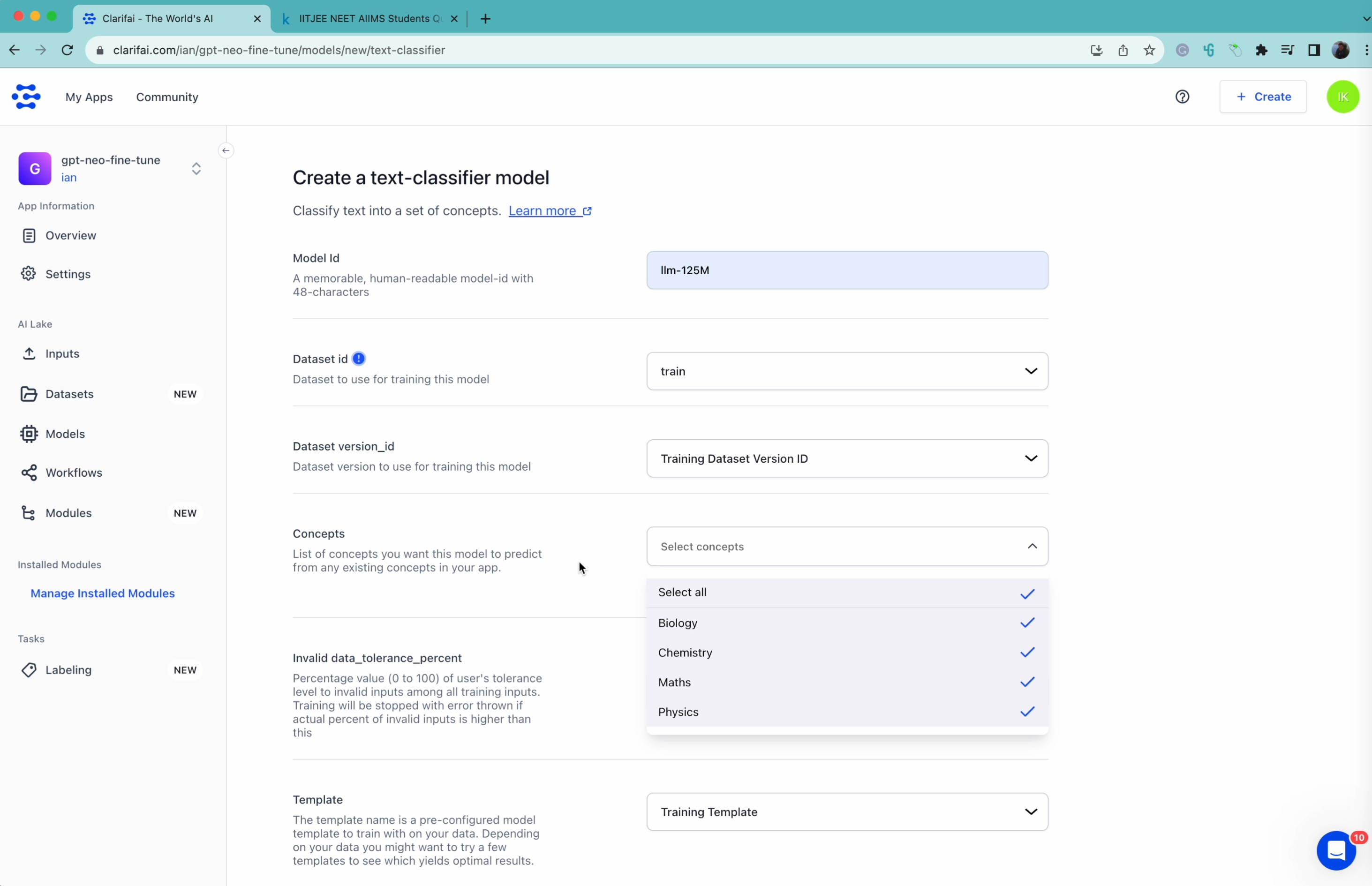

We then choose the models to fine-tune by navigating to the 'Models' tab of our app and adding a new model. We choose to build a custom model, opting for the text classifier. We then pick a name, choose the 'train' dataset, and choose ALL the concepts to label.

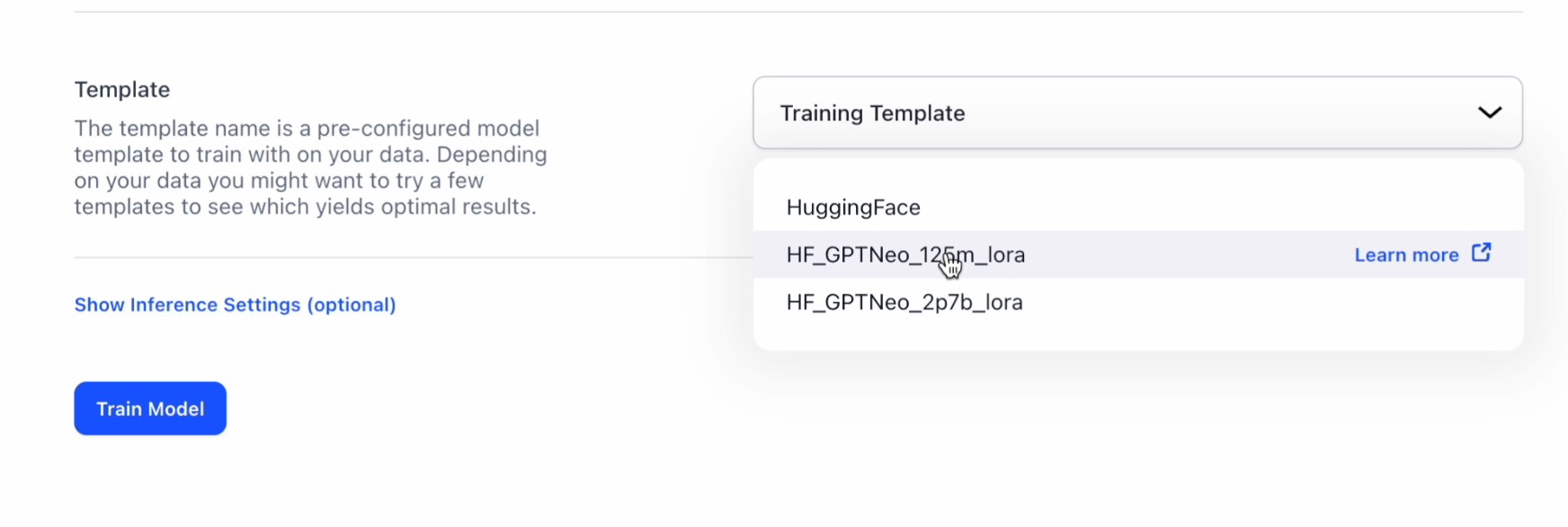

We then choose the template for the 125 million parameters version of GPT-Neo, and hit train.

We'll also perform the same process with the 2.7 billion parameter version of the model. Both models, after around 30 minutes, finish their training, paving the way for us to evaluate the quality of our fine-tuning exercise.

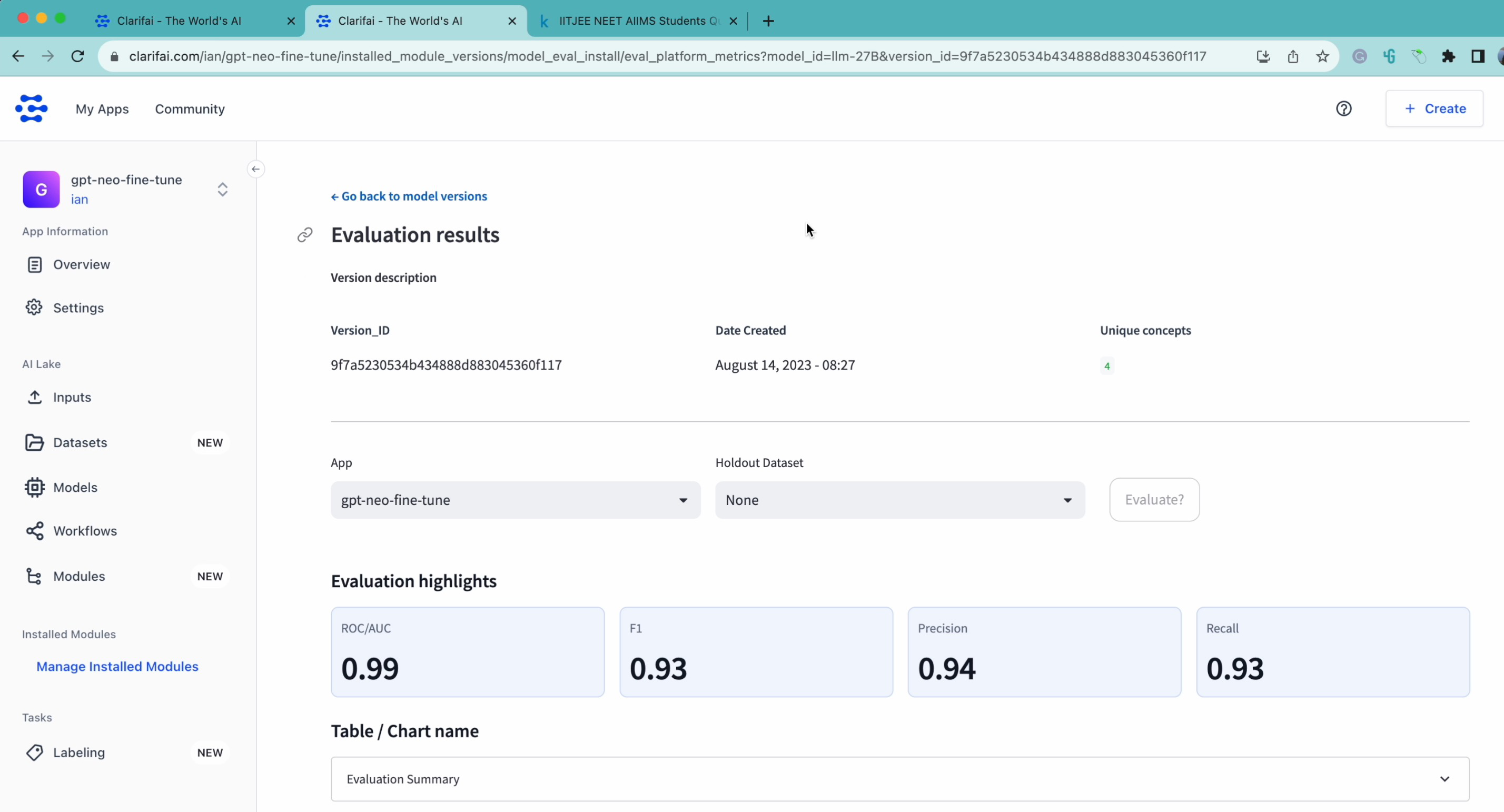

The evaluation findings for the 125 million parameter model present an Area Under the Curve (AUC) of 92.86. Simultaneously, the 2.7 billion parameter model demonstrates an AUC of 99.07. These metrics, however, were computed using the training set. Therefore, to verify that our models haven't excessively adjusted to the training data or overfit—leading to a poorer ability to generalize—it's essential to evaluate them with the test data as well.

Training data results for the 2.7 billion parameter version

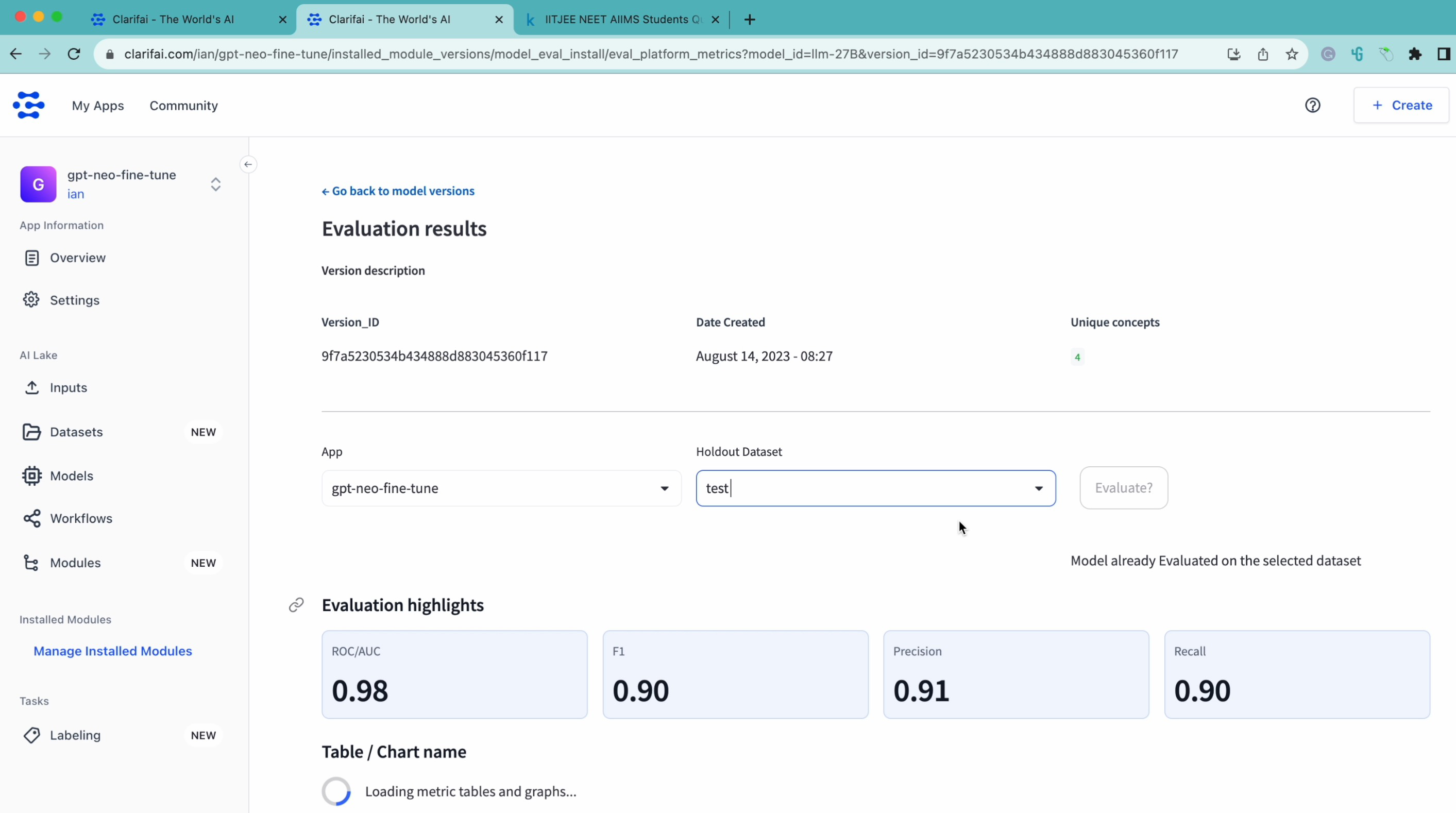

Test data results for the 2.7 billion parameter version

As we anticipated, the performance metrics slightly retract when assessed with the test dataset. Nevertheless, the 2.7 billion parameter GPT-Neo model, despite the marginal decrease, showcases a strong performance. This exercise conclusively shows how you can fine-tune the model for text classification with relative ease.

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy