The Rise of AI-Assisted Labeling

Data labeling is an indispensable element in the machine-learning ecosystem. For models to be trained effectively, they require clean, labeled data. Over the years, data labeling has undergone a significant transformation, mirroring the rapid advancements in technology and our understanding of machine learning methodologies.

In the early days of machine learning, data labeling was predominantly manual. Researchers and developers would sit for hours, meticulously assigning labels to individual data points. For instance, in image recognition tasks, images would be manually tagged with their respective categories, be it "cat," "dog," "car," and so forth. This process was time-consuming and prone to human errors and inconsistencies.

As datasets grew larger, manual labeling became even more challenging. This led to crowd-sourced platforms, like Amazon Mechanical Turk, where many users could contribute to labeling tasks. While this decentralized approach addressed the volume challenge somewhat, it introduced another layer of complexity in maintaining consistency and quality.

The idea of AI-assisted labeling began to take root in the late 2010s as machine-learning models became more sophisticated. Researchers realized that instead of starting from scratch with every new batch of data, previously trained models could be leveraged to make preliminary predictions or "suggestions" for labels. This didn't eliminate the need for human intervention but significantly reduced the manual labor involved. The human labelers could then validate and correct these suggestions, ensuring accuracy. This hybrid approach was both efficient and effective.

The inception of AI-assisted labeling was a game-changer, especially for large-scale projects. Combined with the computational prowess of algorithms and the discerning judgment of human experts, it was now possible to label vast datasets more accurately and in a fraction of the time previously required.

Clarifai has embraced and enhanced this technique, integrating AI-assisted labeling into our toolsets, making the labeling process even more streamlined and intuitive for developers and AI enthusiasts. Given the link between data quality and the performance of ML models, it’s imperative for AI developers to prioritize top-notch data annotation solutions right from the start of their projects.

At Clarifai, we’re constantly releasing new features that help AI developers optimize their training data production throughput, efficiency, and quality. Let’s take a look at how our newly released AI-Assist features can help you spend less time labeling and more time training.

What is Model-Assisted Labeling?

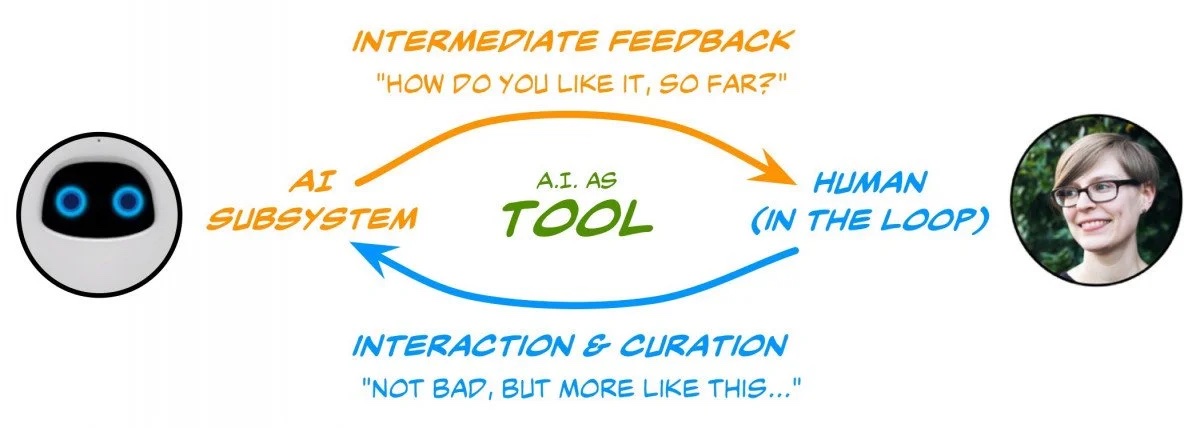

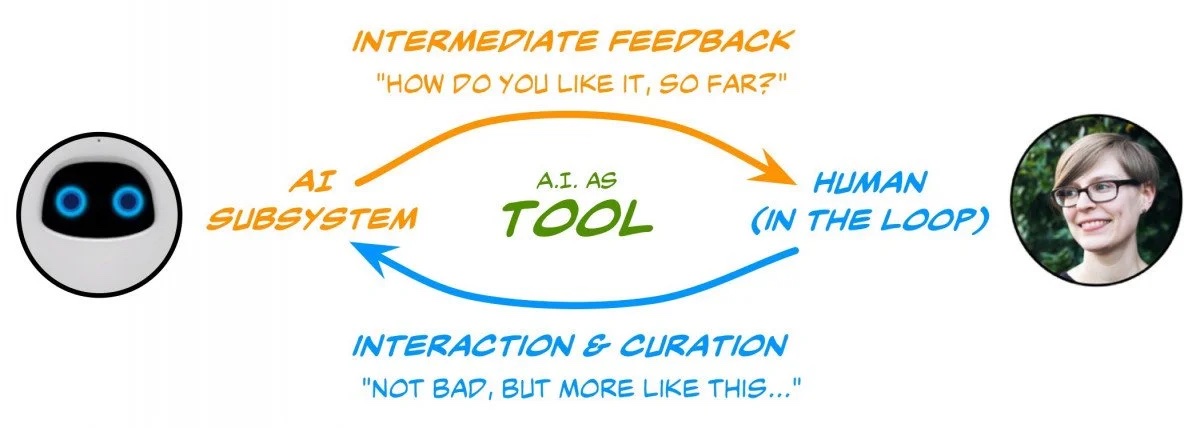

Model-assisted labeling (MAL) is a technique in which predictions from machine learning models are used to assist or augment the human labeling process. It is often employed within the context of broader human-in-the-loop (HITL) model development workflows, which can be understood as an iterative, human-machine process typically involving the following steps:

- Humans add ground-truth annotations to unlabeled data

- ML models are trained in a supervised fashion on labeled data

- Predictions from trained models are used to annotate new unlabeled data upon human verification

The HITL process operates as a continuous feedback loop, where the training, tuning, and testing tasks continuously inform and improve the algorithm. This iterative approach enhances the algorithm's effectiveness and accuracy over time, resulting in highly precise and extensive training data for specific use cases. The human involvement in this process is instrumental. Organizations can achieve the most accurate and actionable decision-making capabilities by fine-tuning and testing their models iteratively.

Advantages of HITL

Beyond the advantages of precision and accuracy, the HITL methodology also introduces significant economic efficiencies into the machine learning lifecycle. Integrating humans and AI in an iterative cycle offers a blend of human expertise and computational efficiency, which has far-reaching cost implications.

Firstly, there's the matter of manpower costs. Traditional data labeling and model refining processes without AI assistance often require extensive human resources. With the HITL approach, AI assists in the initial labeling stages, reducing the reliance on large annotating teams. Consequently, projects can achieve substantial savings, especially when dealing with vast datasets that would otherwise demand significant man-hours.

Time-related savings are another dimension worth highlighting. In traditional setups, the extended durations of labeling and refining can push back model deployment, causing a ripple effect of delayed ROI realization. However, the HITL process, with AI's acceleration, ensures quicker label validations and model refinements. As a result, projects not only maintain their quality standards but also adhere to tighter timelines, enabling businesses to deploy their AI solutions more promptly. This accelerated deployment is pivotal for organizations in competitive markets, where the first-mover advantage can dictate market share and profitability.

Infrastructural overhead traditionally associated with large-scale data processing projects is also significantly reduced. With the HITL model's streamlined approach, there’s less of a need for expansive infrastructure. Clarifai’s platform offers the added advantage of scalability without the accompanying capital expenditure, reducing the direct costs associated with infrastructure and cutting down on overheads like maintenance, administration, and utilities.

Putting AI-Assist to work

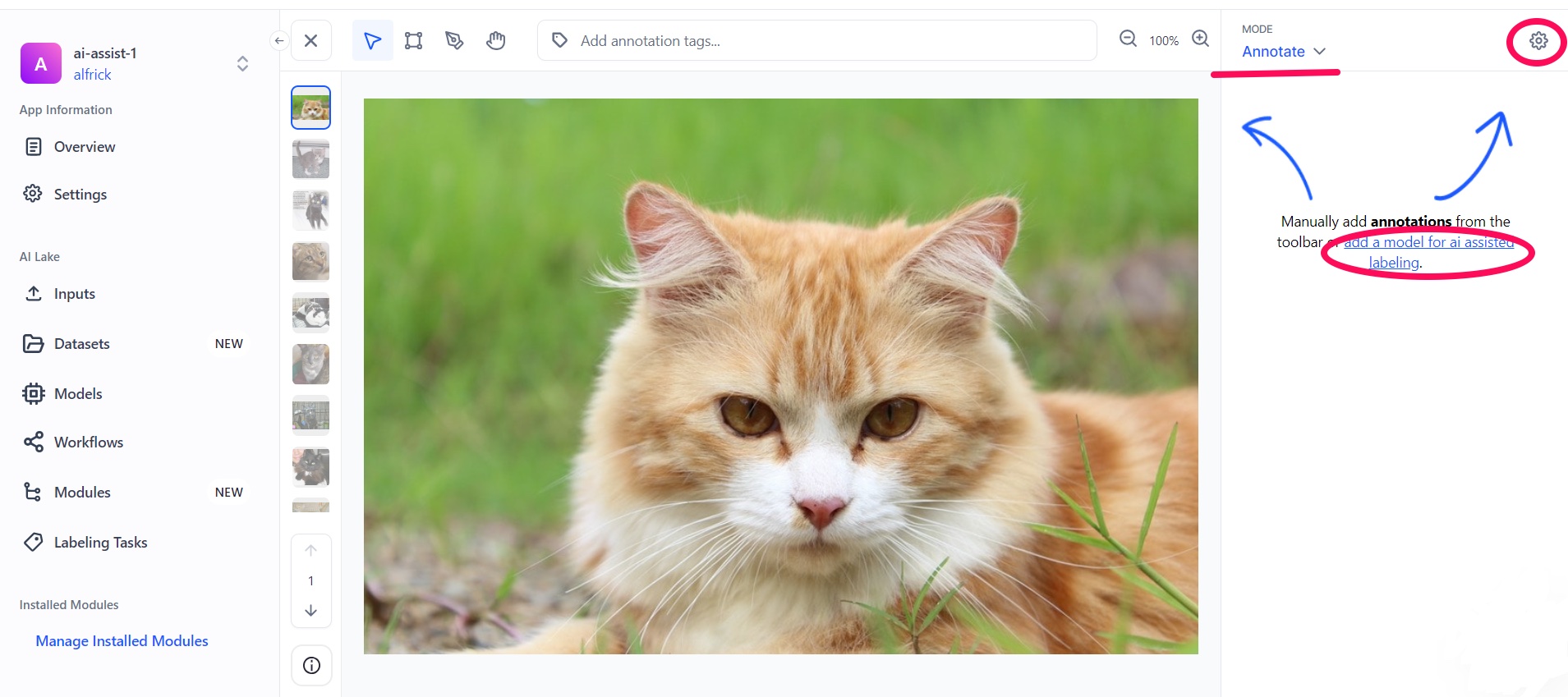

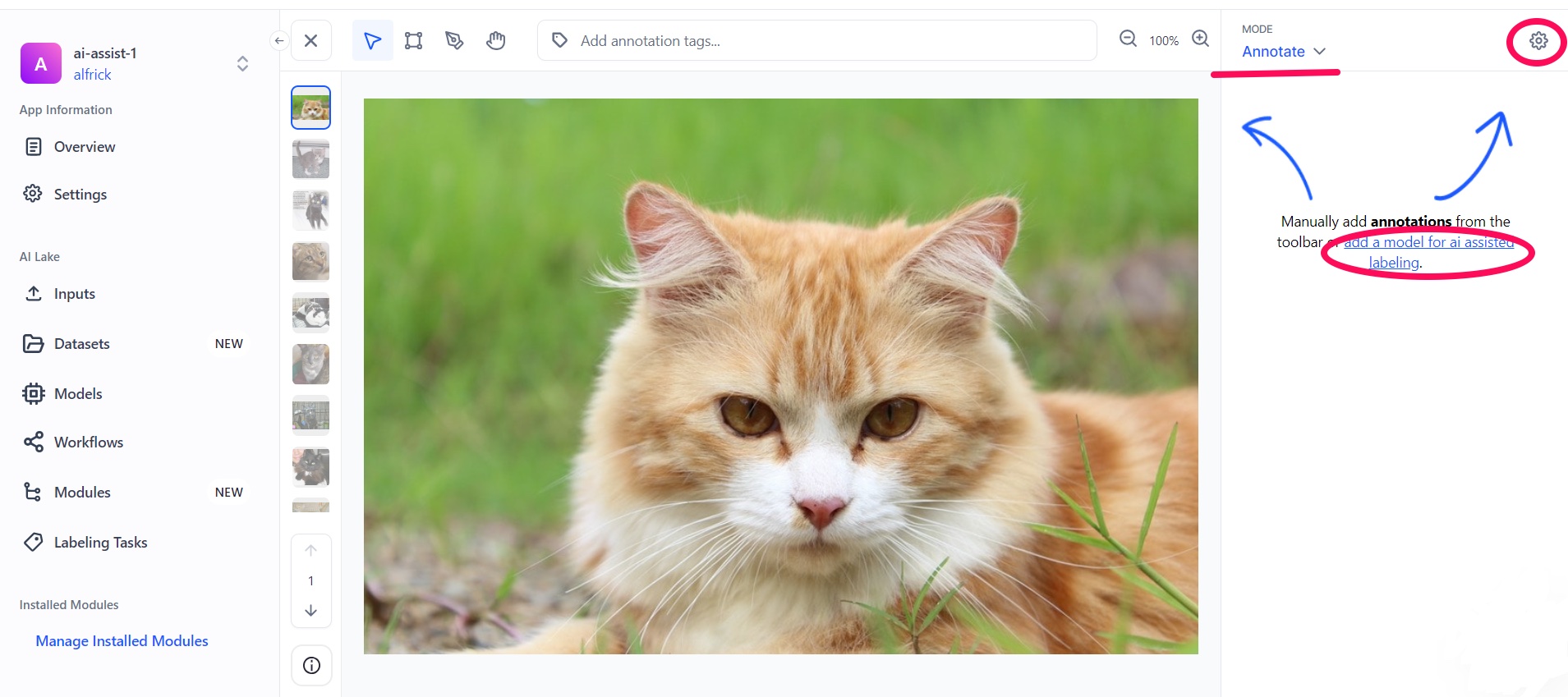

Compared with existing Smart Search and Bulk Labeling features that can be used within the Input-Manager screen to accelerate labeling, our newest AI-Assist features can be employed while working with individual Inputs within the Input-Viewer screen.

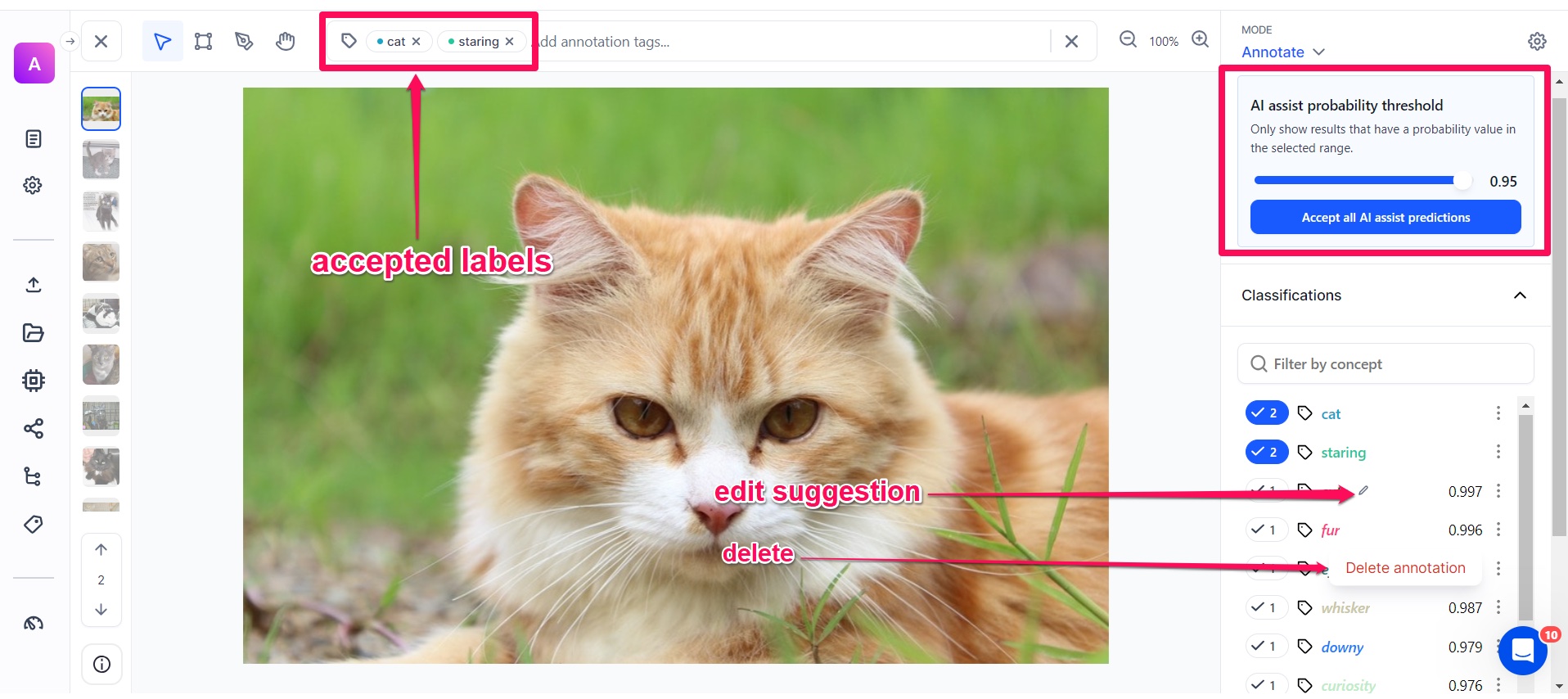

To start, simply select an existing Model or Workflow (either owned by you or from Clarifai’s Community) within the Annotate Mode settings in the right-hand sidebar.

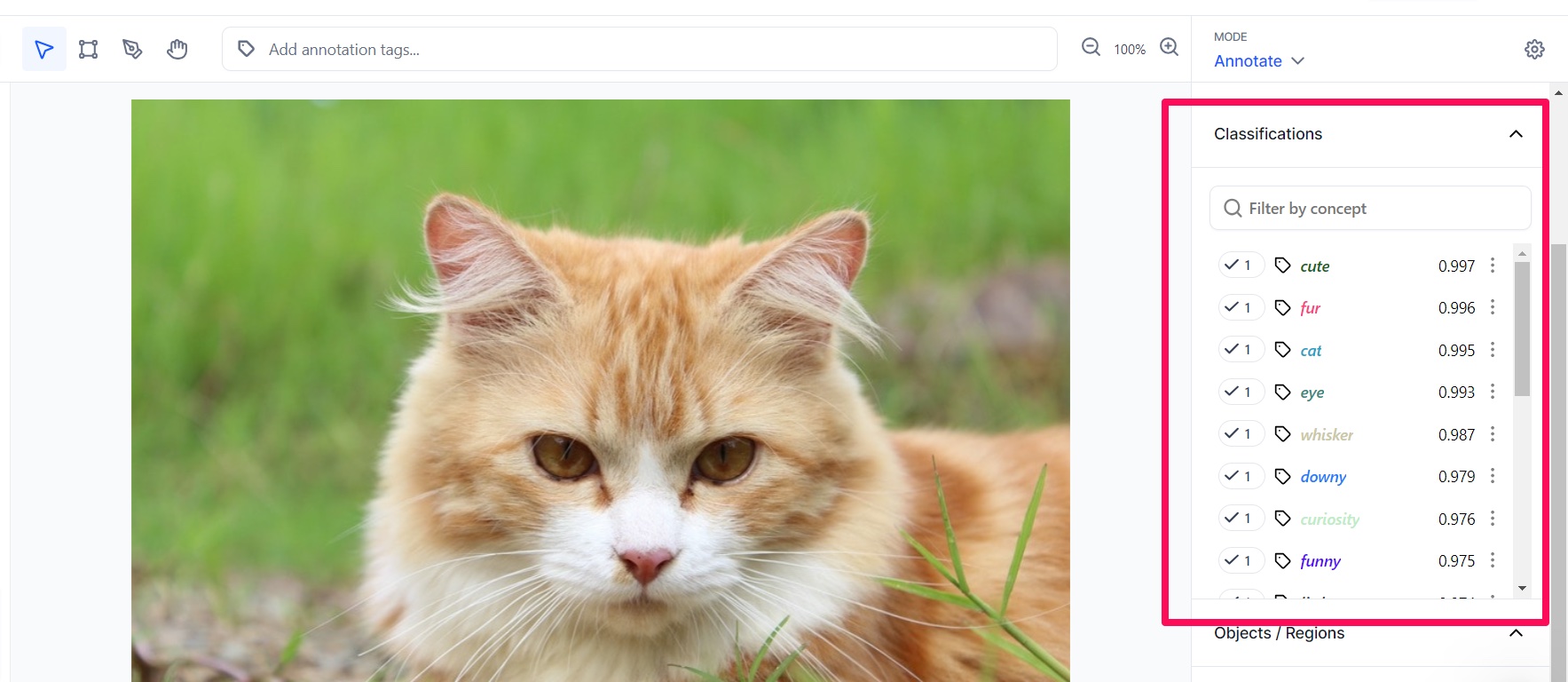

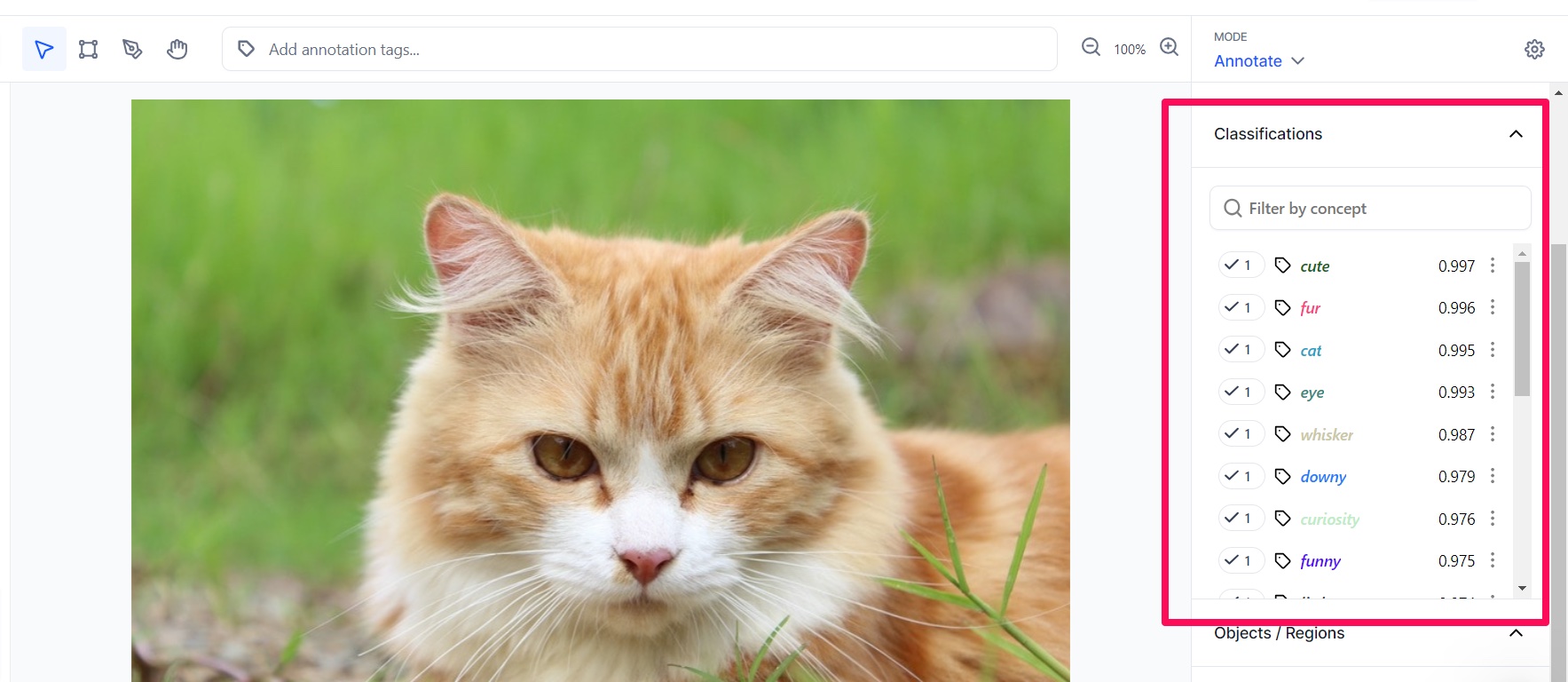

After choosing a model or workflow, suggested Annotations are sorted in descending order based on confidence score.

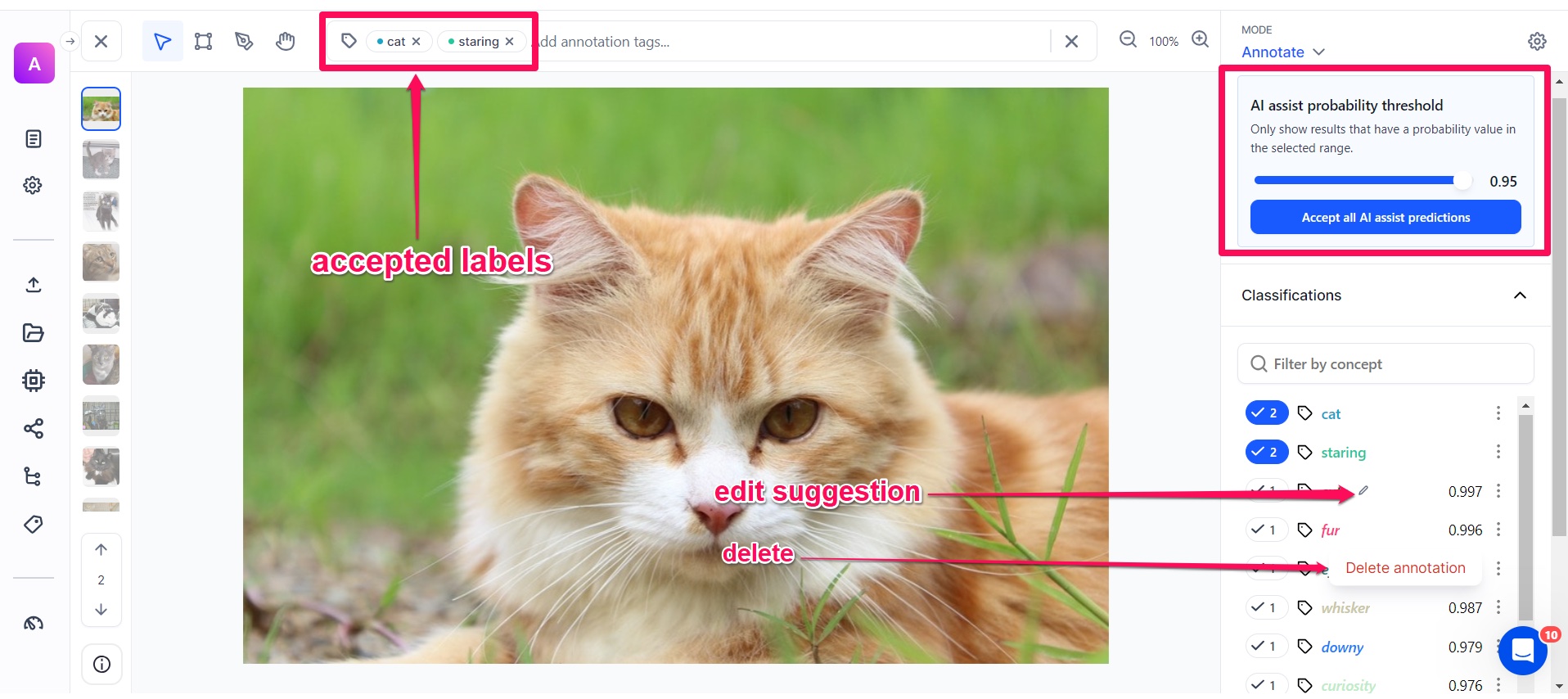

From here, suggestions can be accepted individually or in bulk using the minimum confidence threshold slider.

Selecting the Best Annotation Tool For Your Task

Choosing the right labeling tools for your AI development team is a critical decision that can significantly impact your project's success. You can either develop your own custom tool set or leverage existing commercial tools. However, building in-house tools can be complex and time-consuming, especially if you hope to utilize them in human-in-the-loop model development workflows.

Compared to in-house tooling, Clarifai’s labeling tools make it easier to jump into labeling your data at a fraction of the upfront cost. Our labeling tools are continuously evolving, with new features like AI-Assist designed to enhance label quality while minimizing the effort required from your team. That’s why we are building an end-to-end AI development platform that ensures you get the best results possible from your ML models. If you’re looking to train and deploy high-quality ML models in the most efficient way possible, sign up for a free Clarifai Community account today!