Often, when we discuss moderation, we talk only about blocking NSFW (not safe for work) content. However, for many companies, moderation is a tool that takes on many forms depending on each company's unique needs. In this post, I'll walk you through a few examples of moderation in action. Want an even deeper dive on each use case and moderation in general? Download our guide here!

Keeping Users On-Topic:

Even the most expansive of online marketplaces have limits on what they allow users to sell on their platforms. Most prohibit the sale of animals, body parts and offensive material. If they have a specific item focus, like food, they may also prohibit the sale of certain products, like makeup. Still, that doesn’t stop users from making these listings, crowding out listings that show permitted items.

The goal of moderation here is to filter out or remove these posts. Some platforms rely on self-selections or rules-based moderation to do this. Basically, when a user is uploading their item and selecting features like the item type or year it was made, a the rules put in place will automatically reject the listing. However, in instances where a user is being purposefully deceptive, this type of moderation is easy to circumvent.

With computer vision moderation, these posts can be filtered out before they’re posted, even where thousands of listings are uploaded to the platform daily. By eliminating these irrelevant listings, companies can keep their marketplaces clutter free, so customers will only see posts from valid vendors.

Protecting the Brand Image:

Sometimes moderation is more about ensuring the content is up to the standards expected by the brand. This might mean anything from blocking images that are pixelated or low-quality to finding and removing watermarks, guaranteeing consistent backgrounds (such as white backgrounds), and identifying misused symbols.

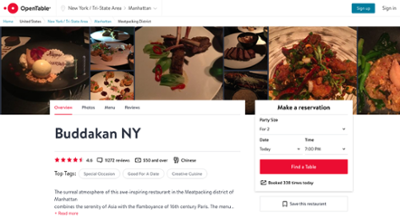

For example, OpenTable features real images diners share to social media on the pages of their restaurants to offer a look at what the dining experience is like. This type of authentic experience has become more standardized and expected over the past couple of years with 93% of consumers finding UGC to be helpful when making a purchasing decision according to Adweek.

However, they want to ensure that all of the content is not only relevant but also nice to look at and does of good job of enticing future diners to book a reservation. To scale this process, they utilize Clarifai's computer vision technology to moderate and curate the photos appropriately.

And, of course, NSFW moderation:

Of course, many companies do need to moderate NSFW content. Photobucket, for example, is one of the world’s most popular online image and video hosting communities. The platform hosts over 15 billion images, with two million more uploads every day. While user-generated content (UGC) is Photobucket’s bread and butter, it also poses a Trust and Safety risk of users who upload illegal or unwanted content. With a firehose of content continually flowing in, it’s impossible for a team of human moderators to catch every image that goes against Photobucket’s terms of service.

Before turning to Clarifai for computer vision-powered moderation, Photobucket used a team of five human moderators to monitor user-generated content for illegal and offensive content. These moderators would manually review a queue of randomly selected images from just 1% of the two million image uploads each day. Not only was Photobucket potentially missing 99% of unwanted content uploaded to their site, but their team of human moderators also suffered from an unrewarding workflow resulting in low productivity.

To catch more unwanted UGC, Photobucket chose Clarifai’s Not Safe for Work (NSFW) nudity recognition model to automatically moderate offensive content as it is uploaded to their site. Now, 100% of images uploaded every day on Photobucket pass through Clarifai’s NSFW filter in real-time. The images that are flagged ‘NSFW’ then route to the moderation queue where only one human moderator is required to review the content. Where’s rest of the human moderation team? They’re now doing customer support and making the Photobucket user experience even better.