Introduction

Choosing the right GPU is a critical decision when running machine learning and LLM workloads. You need enough compute to run your models efficiently without overspending on unnecessary power. In this post, we compare two solid options: NVIDIA’s A10 and the newer L40S GPUs. We’ll break down their specs, performance benchmarks against LLMs, and pricing to help you choose based on your workload.

There is also a growing challenge in the industry. Nearly 40% of companies struggle to run AI projects due to limited access to GPUs. The demand is outpacing supply, making it harder to scale reliably. This is where flexibility becomes important. Relying on a single cloud or hardware provider can slow down your projects. We’ll explore how Clarifai’s Compute Orchestration helps you access both A10 and L40S GPUs, giving you the freedom to switch based on availability and workload needs while avoiding vendor lock-in.

Let’s dive in and take a look at these two different GPU architectures.

Ampere GPUs (NVIDIA A10)

NVIDIA’s Ampere architecture, launched in 2020, introduced third-generation Tensor Cores optimized for mixed-precision compute (FP16, TF32, INT8) and improved Multi-Instance GPU (MIG) support. The A10 GPU is designed for cost-effective AI inference, computer vision, and graphics-heavy workloads. It handles mid-sized LLMs, vision models, and video tasks efficiently. With second-gen RT Cores and RTX Virtual Workstation (vWS) support, the A10 is a solid choice for running graphics and AI workloads on virtualized infrastructure.

Ada Lovelace GPUs (NVIDIA L40S)

The Ada Lovelace architecture takes performance and efficiency further, designed for modern AI and graphics workloads. The L40S GPU features fourth-gen Tensor Cores with FP8 precision support, delivering significant acceleration for large LLMs, generative AI, and fine-tuning. It also adds third-gen RT Cores and AV1 hardware encoding, making it a strong fit for complex 3D graphics, rendering, and media pipelines. Lovelace architecture enables the L40S to handle multi-workload environments where AI compute and high-end graphics run side by side.

A10 vs. L40S: Specifications Comparison

Core Count and Clock Speeds

The L40S features a higher CUDA core count than the A10, providing greater parallel processing power for AI and ML workloads. CUDA cores are specialized GPU cores designed to handle complex computations in parallel, which is essential for accelerating AI tasks.

The L40S also runs at a higher boost clock of 2520 MHz, a 49% increase over the A10’s 1695 MHz, resulting in faster compute performance.

VRAM Capacity and Memory Bandwidth

The L40S offers 48 GB of VRAM, double the A10’s 24 GB, allowing it to handle larger models and datasets more efficiently. Its memory bandwidth is also higher at 864.0 GB/s compared to the A10’s 600.2 GB/s, improving data throughput during memory-intensive tasks.

A10 vs L40S: Performance

How do the A10 and L40S compare in real-world LLM inference? Our research team benchmarked the MiniCPM-4B, Phi4-mini-instruct, and Llama-3.2-3b-instruct models running in FP16 (half-precision) on both GPUs. FP16 enables faster performance and lower memory usage—perfect for large-scale AI workloads.

We tested latency (the time taken to generate each token and complete a full request, measured in seconds) and throughput (the number of tokens processed per second) across various scenarios. Both metrics are crucial for evaluating LLM performance in production.

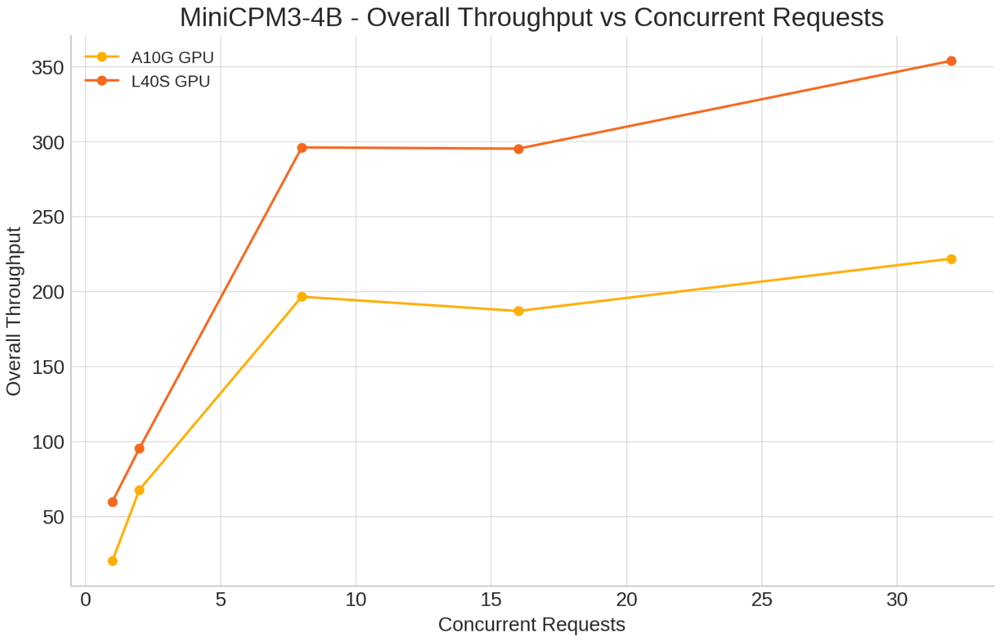

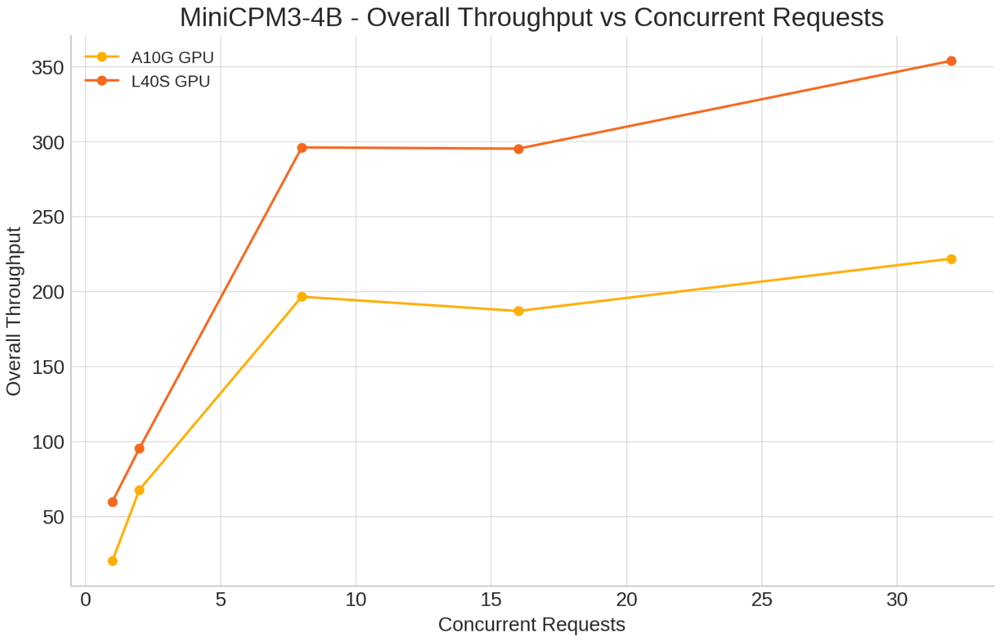

MiniCPM-4B

Scenarios tested:

- Concurrent Requests: 1, 2, 8, 16, 32

- Input tokens: 500

- Output Tokens: 150

Key Insights:

-

Single Concurrent Request: L40S significantly improved latency per token (0.016s vs. 0.047s on A10G) and increased end-to-end throughput from 97.21 to 296.46 tokens/sec.

-

Higher Concurrency (32 concurrent requests): L40S maintained better latency (0.067s vs. 0.088s) and throughput of 331.96 tokens/sec, while A10G reached 258.22 tokens/sec.

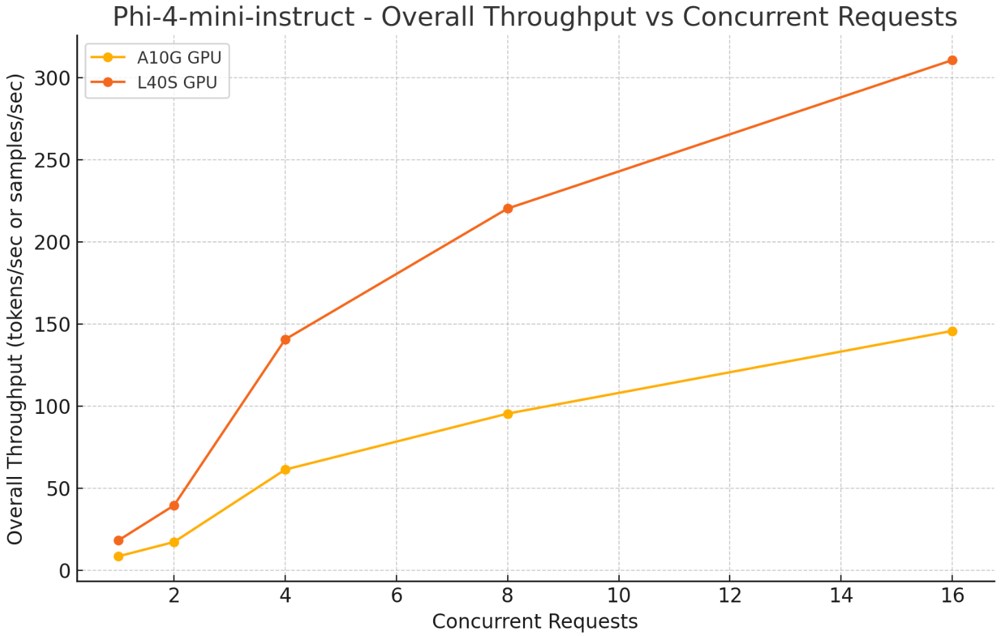

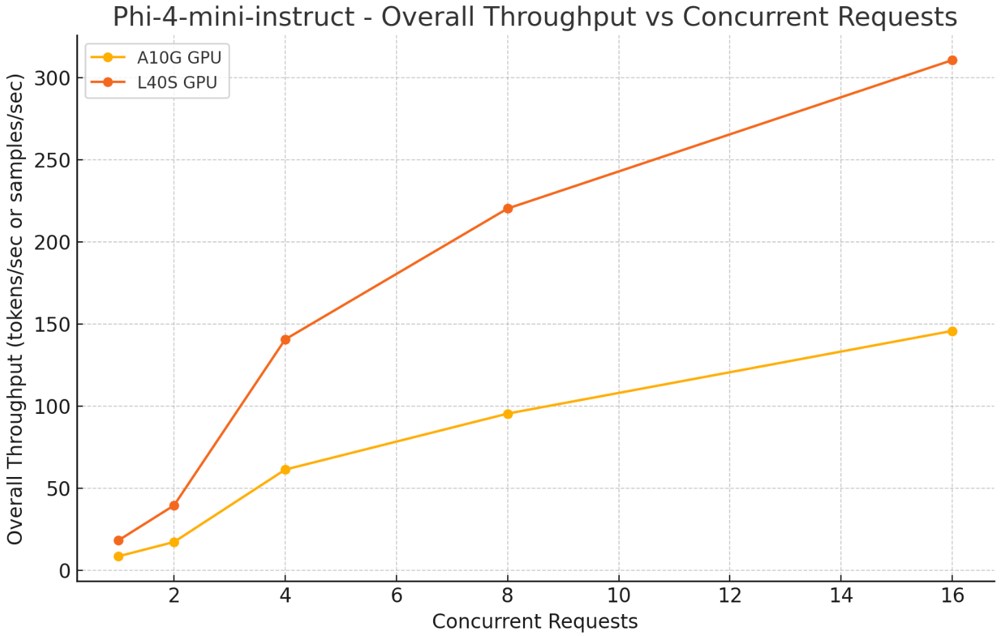

Phi4-mini-instruct

Scenarios tested:

- Concurrent Requests: 1, 2, 8, 16, 32

- Input Tokens: 500

- Output Tokens: 150

Key Insights:

- Single Concurrent Request: L40S cut latency per token from 0.02s (A10) to 0.013s and improved overall throughput from 56.16 to 85.18 tokens/sec.

- Higher Concurrency (32 concurrent requests): L40S achieved 590.83 tokens/sec throughput with 0.03s latency per token, surpassing A10’s 353.69 tokens/sec.

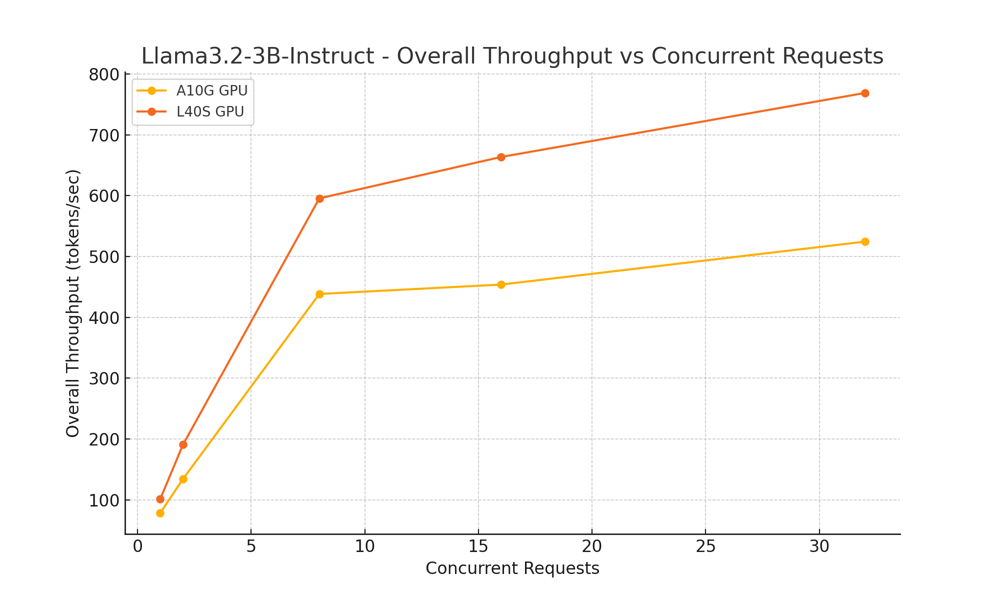

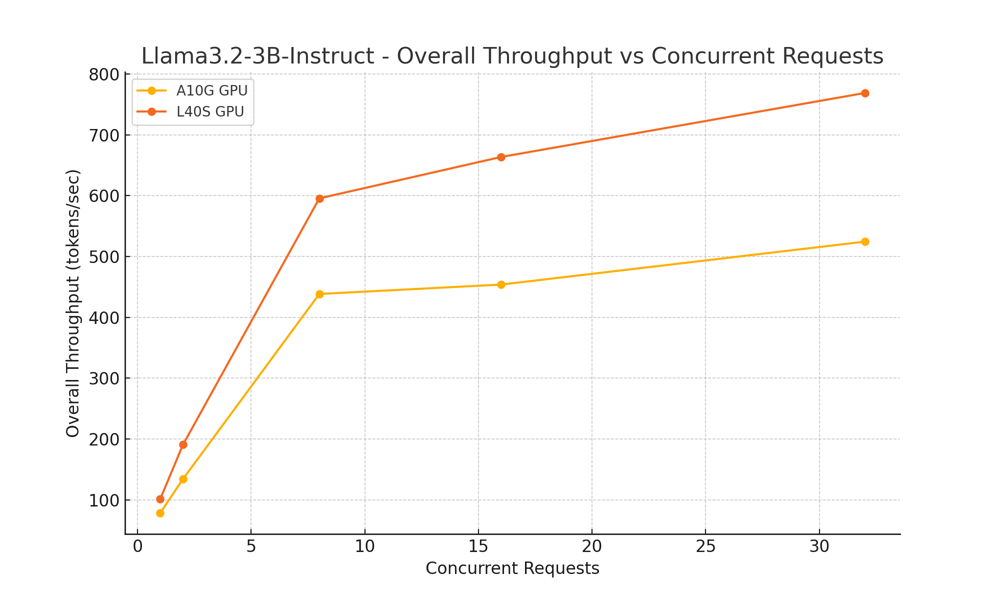

Llama-3.2-3b-instruct

Scenarios Tested:

- Concurrent Requests: 1, 2, 8, 16, 32

- Input tokens: 500

- Output Tokens: 150

Key Insights:

- Single Concurrent Request: L40S improved latency per token from 0.015s (A10) to 0.012s, with throughput increasing from 76.92 to 95.34 tokens/sec.

- Higher Concurrency (32 concurrent requests): L40S delivered 609.58 tokens/sec throughput, outperforming A10’s 476.63 tokens/sec, and reduced latency per token from 0.039s (A10) to 0.027s.

Across all tested models, the NVIDIA L40S GPU consistently outperformed the A10 in reducing latency and enhancing throughput.

While the L40S demonstrates strong performance improvements, it is equally important to consider factors such as cost and resource requirements. Upgrading to the L40S may require a higher upfront investment, so teams should carefully evaluate the trade-offs based on their specific use cases, scale, and budget.

Now, let’s take a closer look at how the A10 and L40S compare in terms of pricing.

A10 vs L40S: Pricing

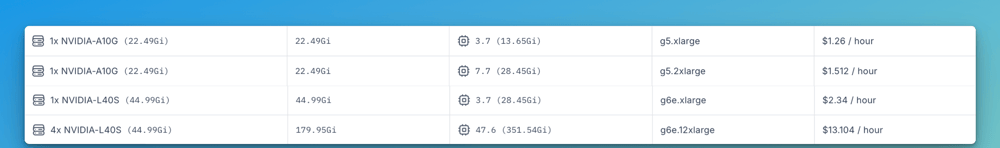

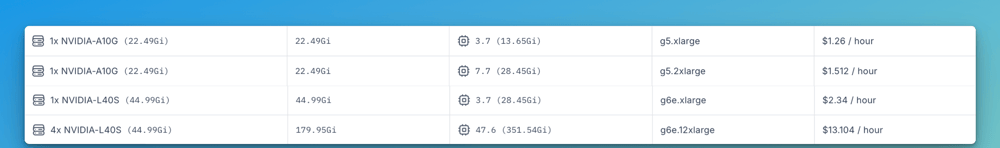

While the L40S is more powerful than the A10, it’s also significantly more expensive to run. Based on Clarifai’s Compute Orchestration pricing, the L40S instance (g6e.xlarge) costs $2.34 per hour, nearly double the cost of the A10-equipped instance (g5.xlarge) at $1.26 per hour.

There are two variants available for both A10 and L40S:

- A10 comes in g5.xlarge ($1.26/hour) and g5.2xlarge ($1.512/hour) configurations.

- L40S comes in g6e.xlarge ($2.34/hour) and g6e.12xlarge ($13.104/hour) for larger workloads.

Choosing the Right GPU

Selecting between the NVIDIA A10 and L40S depends on your workload demands and budget considerations:

- NVIDIA A10 is well-suited for enterprises looking for a cost-effective GPU capable of handling mixed workloads, including AI inference, machine learning, and professional visualization. Its lower power consumption and solid performance make it a practical choice for mainstream applications where extreme compute power is not required.

- NVIDIA L40S is designed for organizations running compute-intensive workloads such as generative AI and LLM inference. With significantly higher performance and memory bandwidth, the L40S delivers the scalability needed for demanding AI and graphics tasks, making it a strong investment for production environments that require top-tier GPU power.

Scaling AI Workloads with Flexibility and Reliability

We have seen the difference between the A10 and L40S and how choosing the right GPU depends on your specific use case and performance needs. But the next question is—how do you access these GPUs for your AI workloads?

One of the growing challenges in AI and machine learning development is navigating the global GPU shortage while avoiding dependence on a single cloud provider. High-demand GPUs like the L40S, with its superior performance, are not always readily available when you need them. On the other hand, while the A10 is more accessible and cost-effective, availability can still fluctuate depending on the cloud region or provider.

This is where Clarifai’s Compute Orchestration comes in. It gives you flexible, on-demand access to both A10 and L40S GPUs across multiple cloud providers and private infrastructure without locking you into a single vendor. You can choose the cloud provider and region where you want to deploy, such as AWS, GCP, Azure, Vultr, or Oracle, and run your AI workloads on dedicated GPU clusters within those environments.

Whether your workload needs the efficiency of the A10 or the power of the L40S, Clarifai routes your jobs to the resources you select while optimizing for availability, performance, and cost. This approach helps you avoid delays caused by GPU shortages or pricing spikes and gives you the flexibility to scale your AI projects with confidence without being tied to one provider.

Conclusion

Choosing the right GPU comes down to understanding your workload requirements and performance goals. The NVIDIA A10 offers a cost-effective option for mixed AI and graphics workloads, while the L40S delivers the power and scalability needed for demanding tasks like generative AI and large language models. By matching your GPU choice to your specific use case, you can achieve the right balance of performance, efficiency, and cost.

Clarifai’s Compute Orchestration makes it easy to access both A10 and L40S GPUs across multiple cloud providers, giving you the flexibility to scale without being limited by availability or vendor lock-in.

For a breakdown of GPU costs and to compare pricing across different deployment options, visit the Clarifai Pricing page. You can also join our Discord channel anytime to connect with experts, get your questions answered about choosing the right GPU for your workloads, or get help optimizing your AI infrastructure.