Advances in machine learning have made it easy to identify objects in images, and we've made even video recognition simple. We’re going to showcase identifying objects in videos with relative ease using Clarifai.

In this tutorial, we'll go over how to use Clarifai’s APIs to automatically analyze a short video clip and give us predictions.

I’m going to be using PyCharm and the latest version of Python. Our goal is to look at the timestamp for the video we fed, the time the image was created, and the tags Clarifai has assigned in the video.

Getting Started by Setting Up Your Environment

In our terminal, we first need to download the Clarifai Python client. You can achieve this by simply running the following pip command:

$pip install clarifai

We’re going to be working with JSON responses, so it’s helpful to have a JSON editor or a JSON parsing module. Personally, I’m used to working with this JSON editor to better visualize my data. However, if you’d prefer to stay in the terminal, install the python json module:

$pip install json

If you’re unfamiliar with the json module, the python docs give you a good rundown.

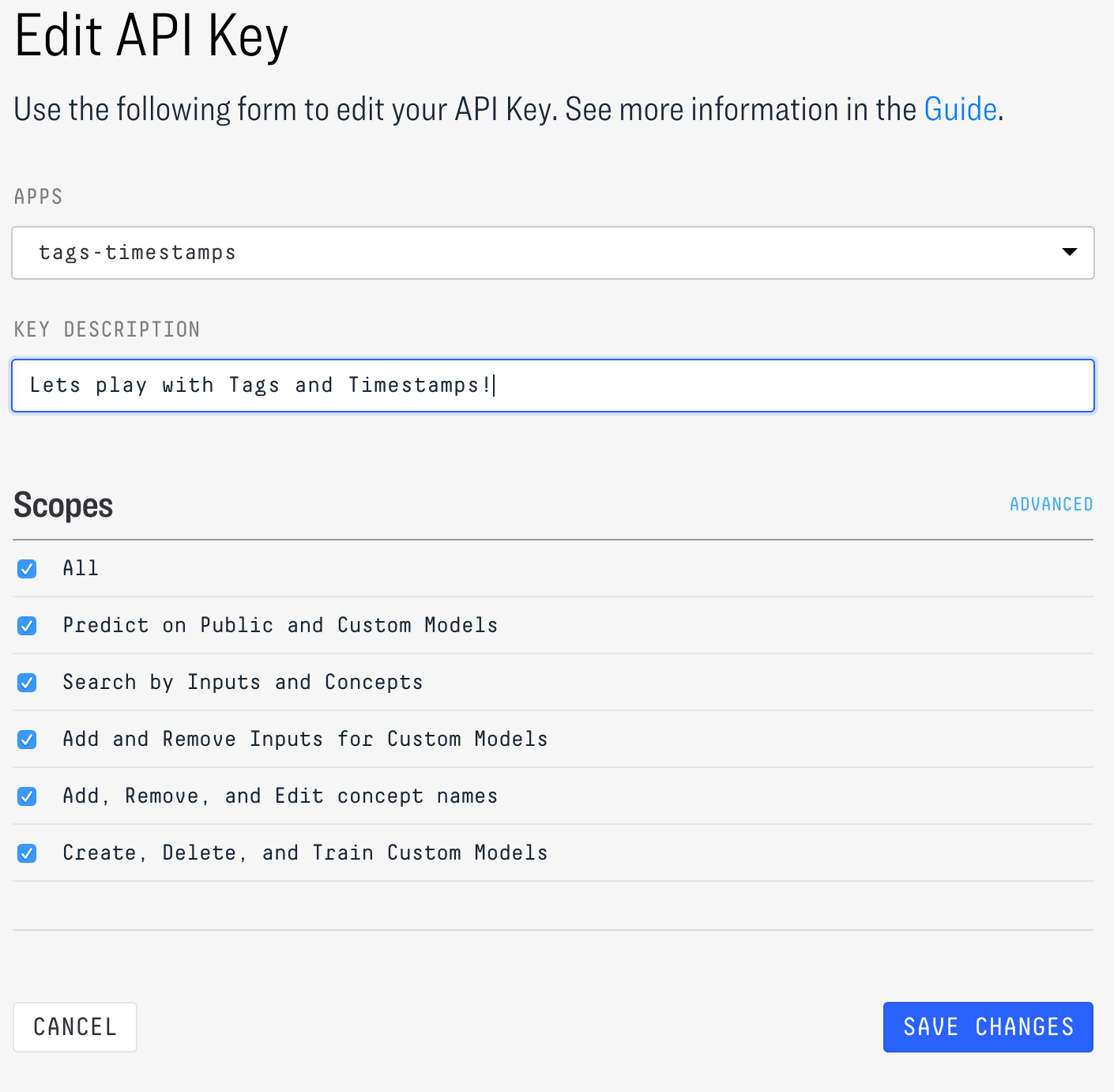

With these details out of the way, sign up for Clarifai and get your free API Key.

When you create an API Key, you’ll be prompted to check which scopes you’d like to have your key access to. For our sake in this demo we will leave All checked. We are just looking at the prediction outputs of a public model, so you could choose just "Predict on Public and Custom Models" as well. Scopes are an option because in a production environment, best practice dictates selecting only the minimum necessary scope for each API Key to maximize security. For more detail, you can refer to this document on scopes.

Get Your Video Recognition Code On

Crack your fingers, it’s time to get into the guts of the code. Clarifai really makes interfacing with their tools easy. We won’t even have much coding to do.

Right now all you need is some video, I’m going to use this angry sloth gif. In order to make the most use of the video API follow these guidelines:

- Video should be in MP4, MKV, AVI, MOV, OGG, or GIF file formats

- Your video file should be under 80 MB and 10 minutes long for uploading through a URL. If you’re sending bytes, it will need to be less than 10 MB per API call.

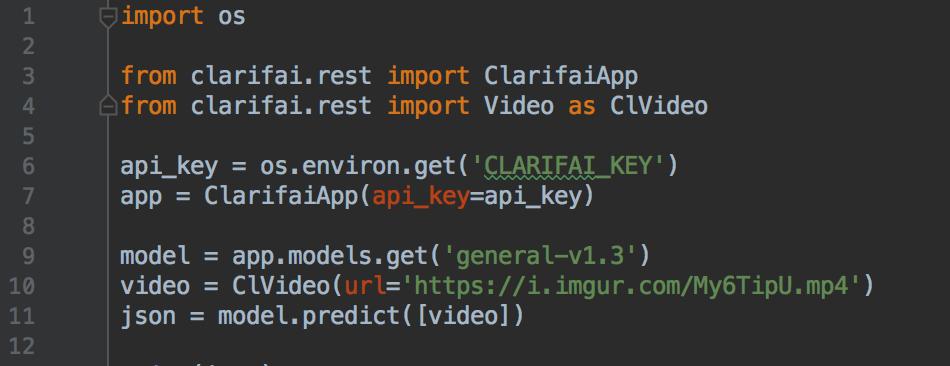

This screenshot shows all the boilerplate code you need to get image prediction responses from Clarifai:

import os

from clarifai.rest import ClarifaiApp

from clarifai.rest import Video as ClVideo

api_key = os.environ.get(‘CLARIFAI_KEY’)

app = ClarifaiApp(api_key=api_key)

model = app.models.get(‘general-v1.3’)

video = ClVideo(url=’https://i.imgur.com/My6TipU.mp4')

json = model.predict([video])

What we are doing in the code above is:

- accessing our environment variable, “CLARIFAI_KEY”, (see how to set and get environment variables)

- using the pre-trained General v1.3 Clarifai model

- sending Clarifai a video to process (as url)

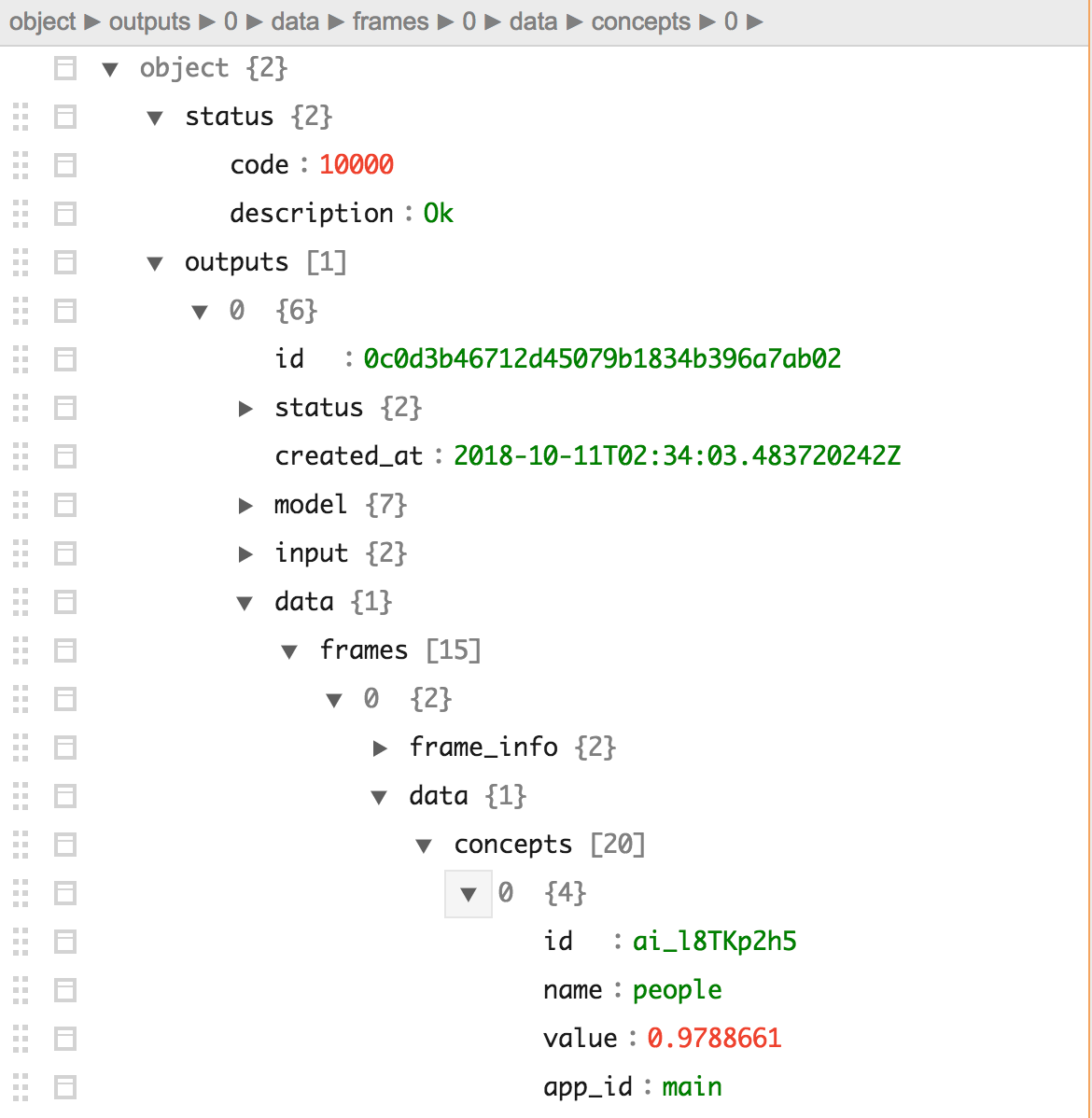

After processing the video, Clarifai sends a JSON object in response, like this:

{‘status’: {‘code’: 10000, ‘description’: ‘Ok’}, ‘outputs’: [{‘id’: ‘0c0d3b46712d45079b1834b396a7ab02’, ‘status’: {‘code’: 10000, ‘description’: ‘Ok’}, ‘created_at’: ‘2018–10–11T02:34:03.483720242Z’, ‘model’: {‘id’: ‘aaa03c23b3724a16a56b629203edc62c’, ‘name’: ‘general-v1.3’, ‘created_at’: ‘2016–03–09T17:11:39.608845Z’, ‘app_id’: ‘main’, ‘output_info’: {‘message’: ‘Show output_info with: GET /models/{model_id}/output_info’, ‘type’: ‘concept’, ‘type_ext’: ‘concept’}, ‘model_version’: {‘id’: ‘aa9ca48295b37401f8af92ad1af0d91d’, ‘created_at’: ‘2016–07–13T01:19:12.147644Z’, ‘status’: {‘code’: 21100, ‘description’: ‘Model trained successfully’}}, ‘display_name’: ‘General’}, ‘input’: {‘id’: ‘0b8805331ce74281bf0aaac8d4a9f076’, ‘data’: {‘video’: {‘url’: ‘https://i.imgur.com/My6TipU.mp4'}}}, ‘data’: {‘frames’: [{‘frame_info’: {‘index’: 0, ‘time’: 0},

With large responses, I like to sift through the data using a JSON editor which displays the data as follows:

We can parse through to get what we need. For example, it includes the time in milliseconds and the tags associated with each frame. Let’s start with the frames first.

The tree path we’re interested in following is:

Json > outputs > 0 > data > frames

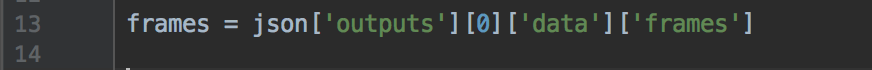

The code to retrieve these frame values in Python looks as follows:

frames = json[‘outputs’][0][‘data’][‘frames’]

Next, we’re going to get all the tags predicted for the 15 image frames we’ve given. Clarifai gives each tag a confidence score, so you can throw in some logic to filter by prediction confidence. In our case, we want to see all tags with a score greater than .94 from Clarifai.

We will find the tags nested under 'concepts' in our JSON results:

json > outputs > 0 > data > concepts

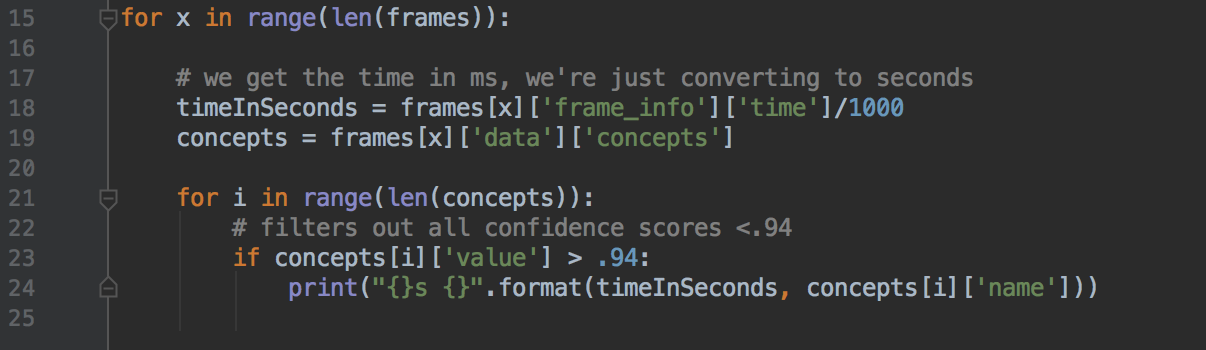

Within the concepts array, we have 20 objects. Throw it in a nested for-loop, and we can iteratively print out all the tags.

for x in range(len(frames)):

# we get the time in ms, we’re just converting to seconds

timeInSeconds = frames[x][‘frame_info’][‘time’]/1000

concepts = frames[x][‘data’][‘concepts’]

for i in range(len(concepts)):

# filters out all confidence scores <.94

if concepts[i][‘value’] > .94:

print(“{}s {}”.format(timeInSeconds, concepts[i][‘name’]))

For each of the frame objects (i.e. for each second), we’re getting the time in milliseconds (which we convert to seconds), and the frame's concepts list. In this list lie our tags of interest. The "if" statement in the nested "for" loops applies a filter so we only get tags with high confidence scores.

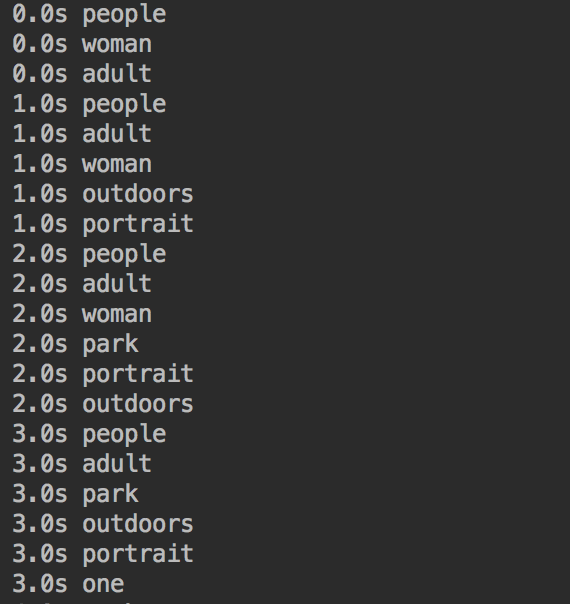

Voilà! We get the tags at each frame with a confidence score greater than .94:

These results are grouped by each second of the video and ranked in order of confidence score. Notice how there are multiple tags for each second. This is because there can be more than one object detected by Clarifai. You can also check out the inspiration for this post (adapted from a tutorial originally published by Jeet Gajjar of Major League Hacking), a project from HackGT: Framehunt.

See what you can come up with using this method on your existing videos. Or, build video object recognition into your mobile app with Clarifai SDKs and get concepts to your app in real-time!