Armada Predict

High performance, fully managed inference serving

Save up to 70% on inference costs with Clarifai

A fully managed model orchestration service associates models to the most efficient compute nodes, scales up and down to maximize your compute while meeting enterprise-grade production volume.

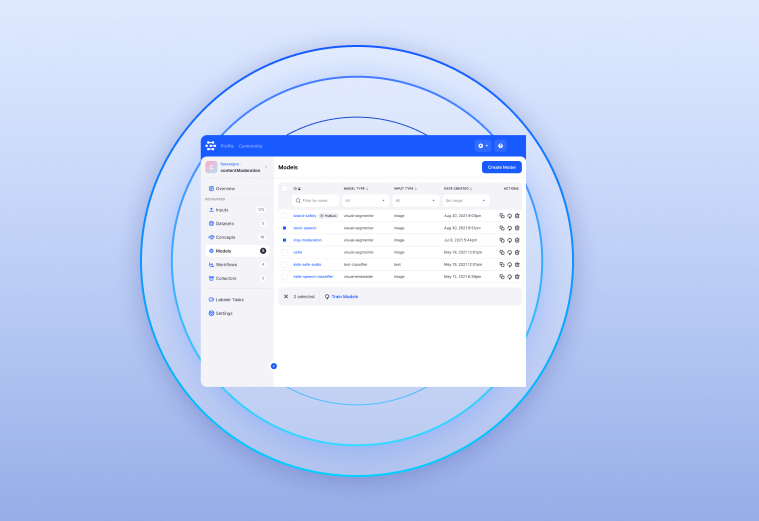

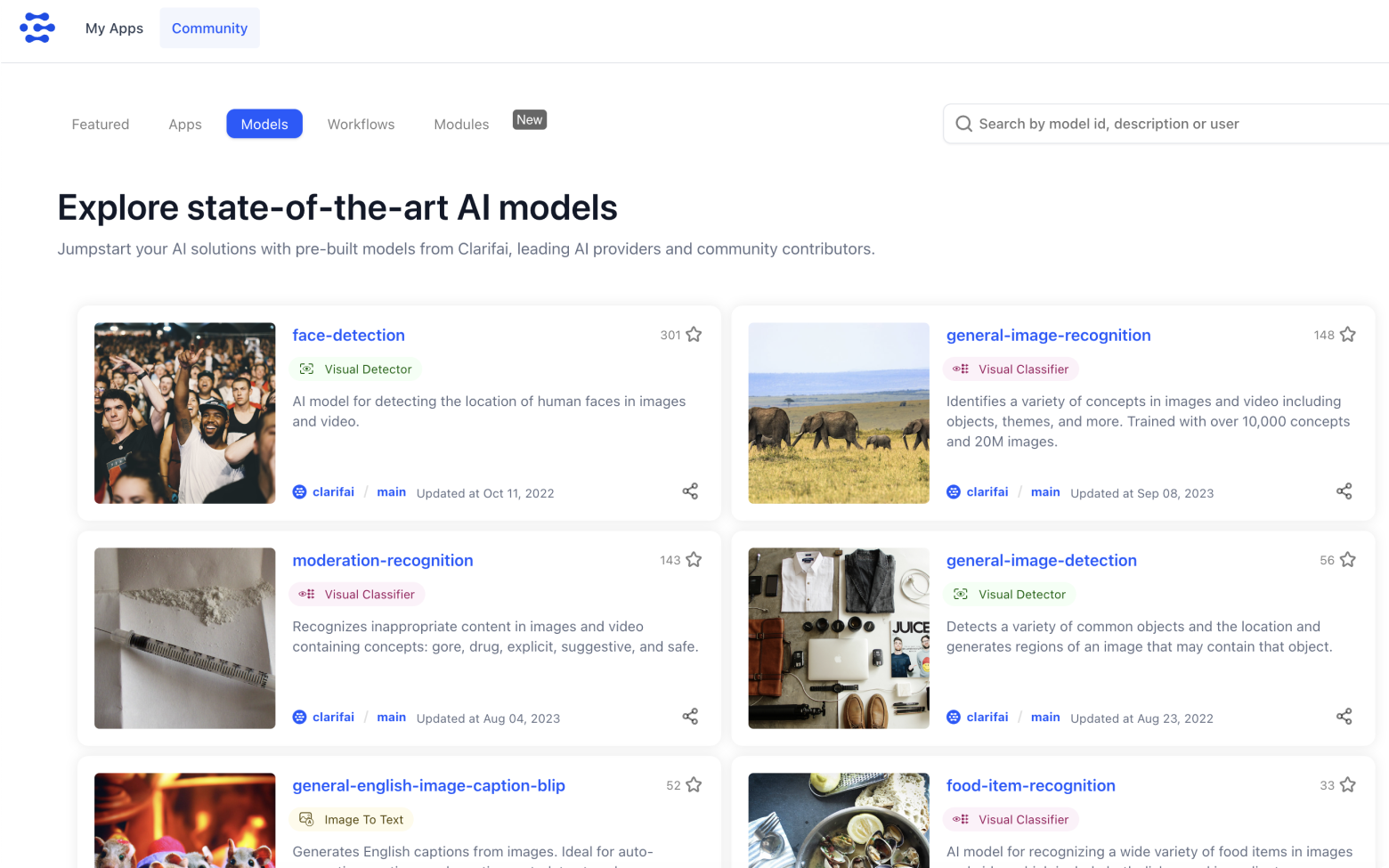

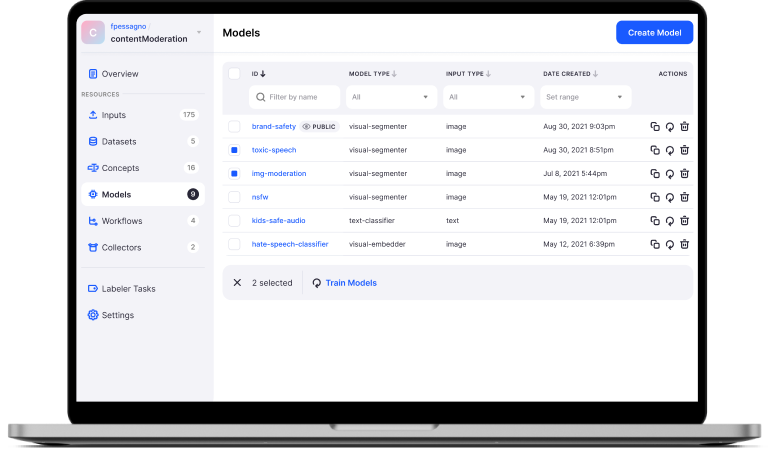

Rapidly deploy models yourself

Use our UI or our SDKs to upload your own model or choose from thousands of the world’s best models in our community. Once a model is uploaded, it’s automatically available for any amount of production traffic. Clarifai solves the ML Ops headaches for you so you can focus on building value.

Optimal GPU usage sharing and battle-tested auto scaling

Our inference orchestration maps models to the most efficient CPUs or GPUs. Our battle-tested endpoints handle massive autoscaling, with options for fully configurable scaling policies. You gain effortless accuracy vs. performance trade-offs for real world applications.

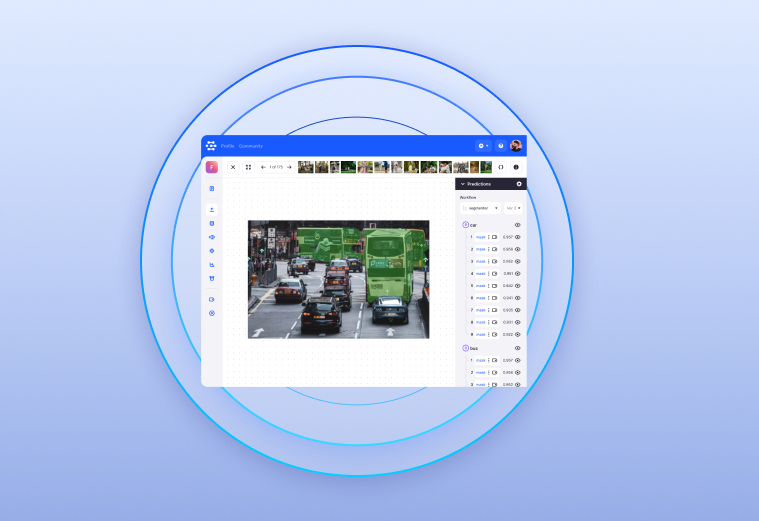

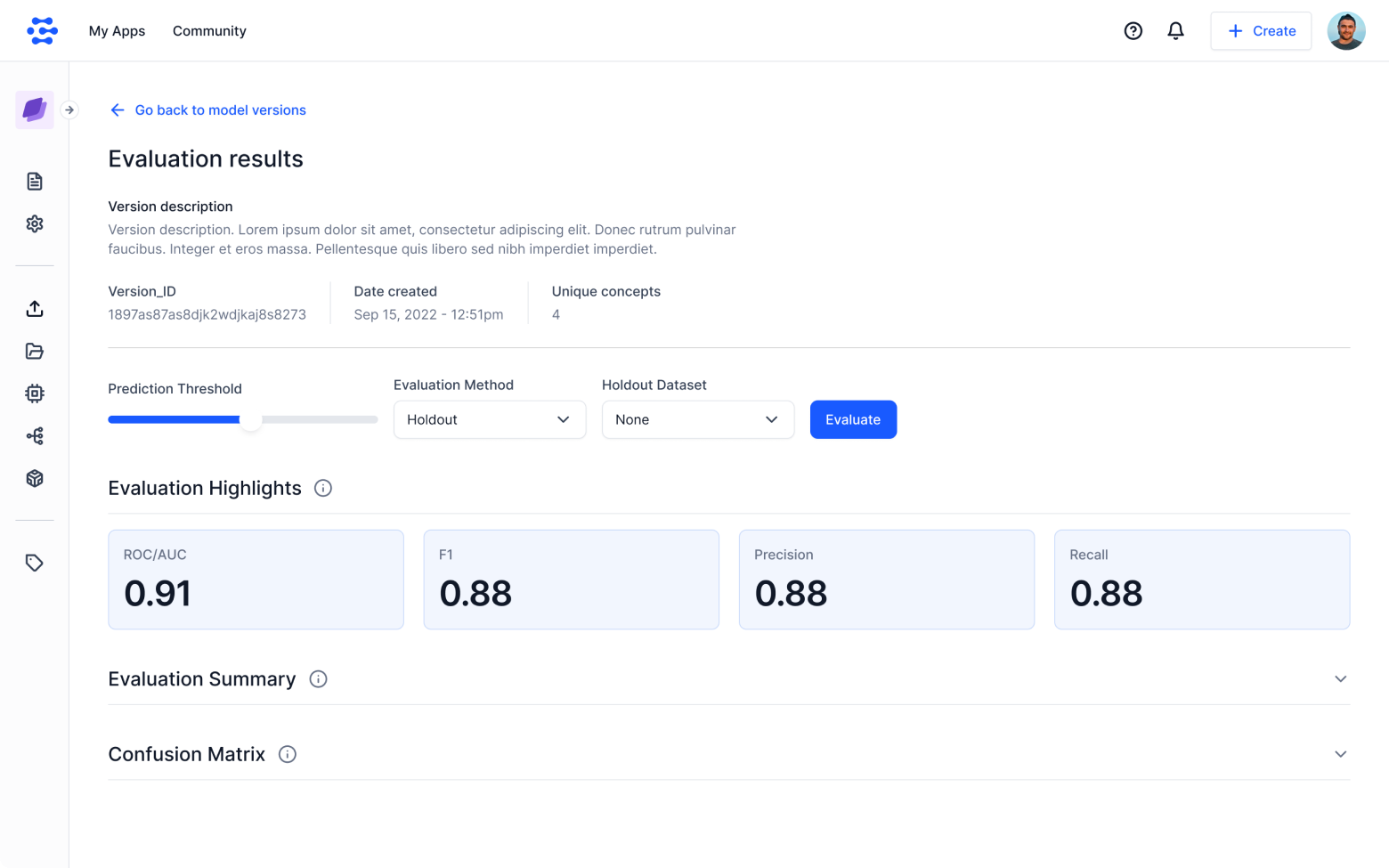

Best in class evaluation tools

Compare multiple models against each other, or against datasets to easily gauge how your models will perform.

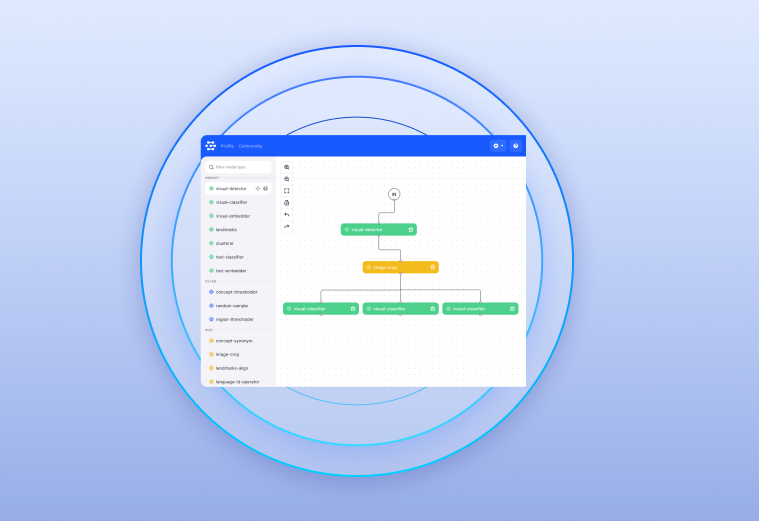

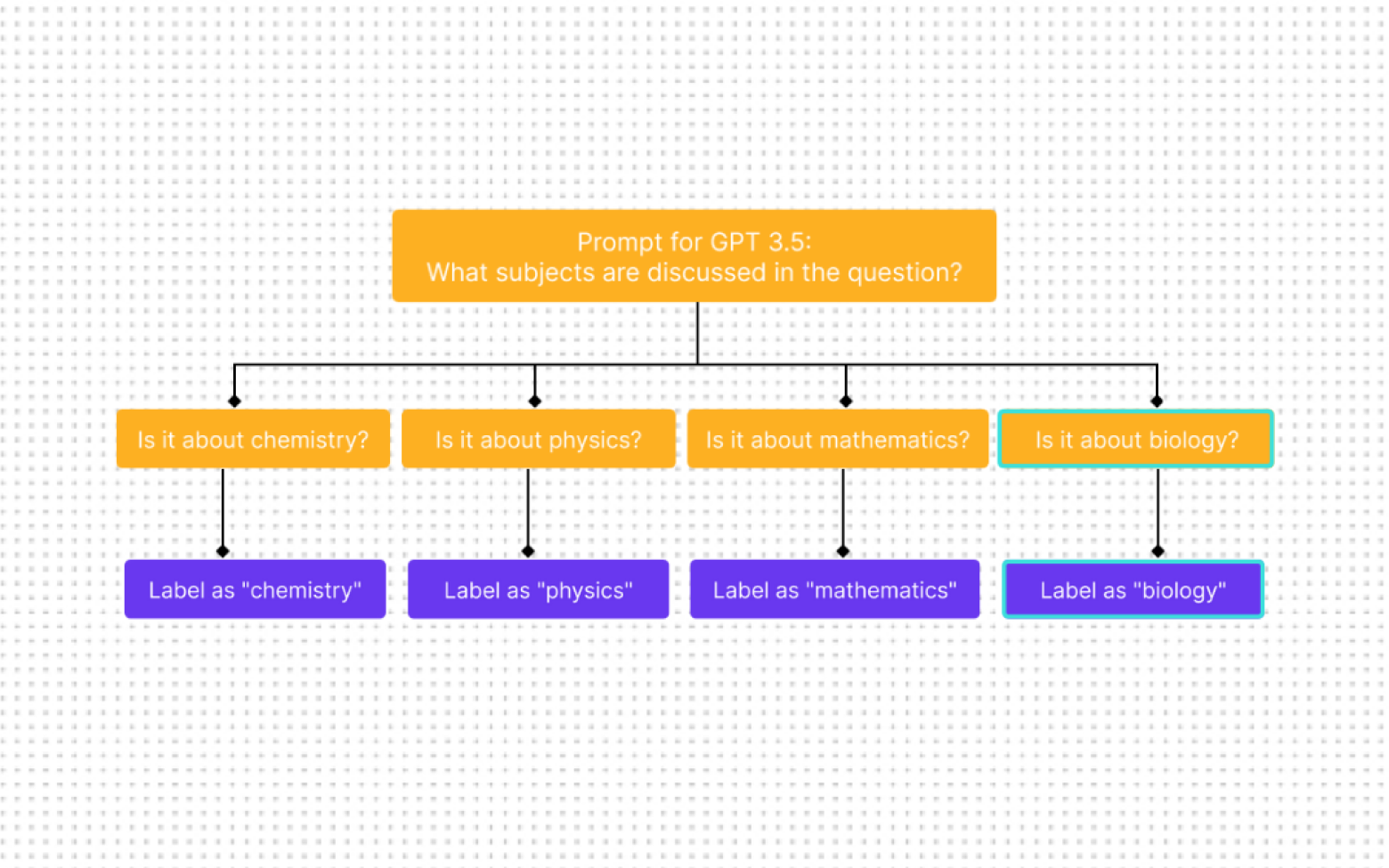

Combine your models into advanced workflows

Connect one or more AI models and other functional logic together to gain insights beyond what a single AI model could do alone. These workflow engine becomes foundation for more advanced capabilities, including auto data labeling, search indexing and real-time data analysis.

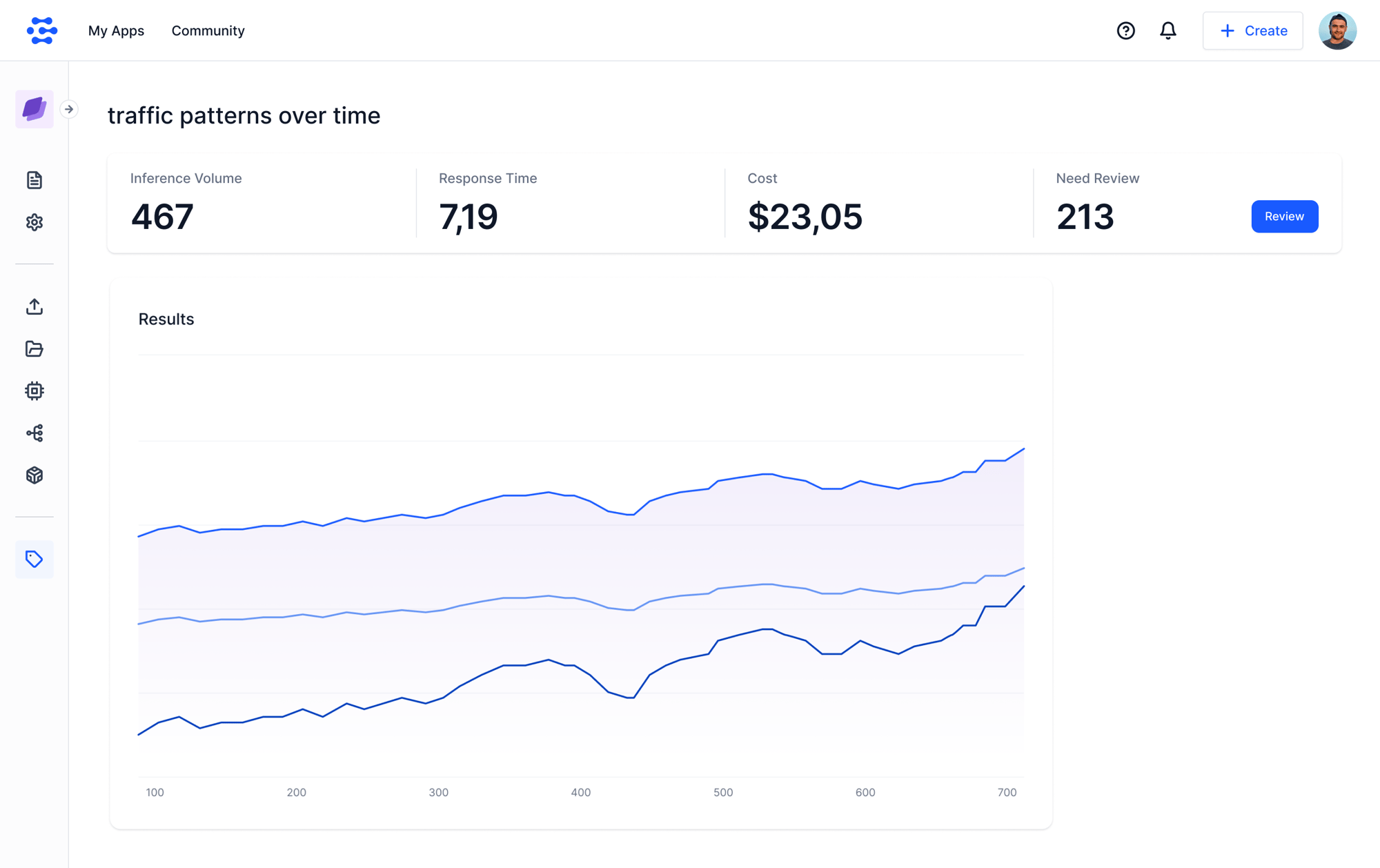

Collect production traffic for evaluation and fine tuning

Clarifai collectors help you understand your traffic patterns over time. They let you monitor and collect user/production data and identify model performance gaps. And if you are building custom models, collectors are a critical building block towards active learning because they let you curate datasets to quickly fine-tune your models based on production data.

Inference orchestration with built-in model optimization

Batch or real-time inference services for trained models with a simple and efficient one-click. Deployed anywhere.

Technology Partners

Clarifai combines its capabilities with world class technology partners to enable

organizations to solve a broader set of digital transformation challenges.

Clarifai: Your End-to-End AI Solution

Together with our patented AI platform, Clarifai provides a solution including data preparation, model development, and operationalization so you build and deploy models at scale.

Organize and collaborate

Model training & evaluation

AI workflows

Data management and vector search

Resources