The

Full Stack AI

Generative AI

LLM

Computer Vision

Developer Platform

Build on the fastest, production-grade deep learning platform for developers and ML engineers.

Build AI Faster

Unlock Value Instantly

Simplify how developers and teams create, share, and run AI at scale

Some of the world’s best teams build with Clarifai

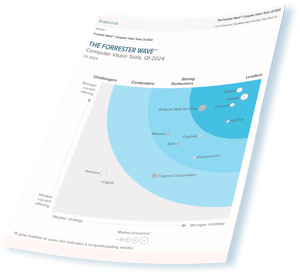

Report

The Forrester Wave™: Computer Vision Tools, Q1 2024: Download the report to learn why Clarifai is the Leader in Computer Vision.

Generative AI built for developers by developers

Where developers build production computer vision & LLMs with a Full Stack AI platform.

Build your next generative innovation

Leverage cutting-edge Large Language Models (LLMs) to craft coherent and contextually rich content, connect image generation models to create detailed visuals, utilize image captioning models for nuanced, descriptive narratives, and employ speech generation to render lifelike voice outputs. Harness the automation of workflows to seamlessly interlink generative models, enabling effortless innovation and customization.

Build RAG in four lines of code

Set up a seamless server-side Retrieval Augmented Generation (RAG) experience in four lines of code with our Python SDK. We make it easy for you to choose your LLM, create, index, and store embeddings, and build your chat interface effortlessly, enabling your instant RAG solution. Start with our defaults and customize as you gain experience.

Inspect Data with Advanced Model Analysis

Use advanced classification models to meticulously categorize and analyze data, enabling swift and accurate decision-making. Employ detection models to identify and locate objects, people, and more within images and videos, providing rich, detailed insights. Harness segmentation models to delineate and differentiate between various elements within an image, facilitating nuanced understanding and analysis. If we don’t have a pre-trained model to suit your needs, easily train another using our many architectures built into the platform.

Organize, share, reuse with AI Lake

Manage your AI applications with Clarifai's intuitive platform. Upload inputs, be it images, text, or videos, and harness them as the foundation to train sophisticated models. Structure your uploaded data as datasets, enabling precise subsets for model training and testing. Define concepts to categorize the classes within detection, classification, and segmentation models. Employ versioning to create and compare multiple iterations of your model, fine-tuning them with varied data to achieve high performance.

Don’t just take our word for it

What developers and clients say about us

“Clarifai provides an end-to-end platform with the easiest to use UI and API in the market. They’ve accelerated our AI development at scale allowing 1,000's of workers to label data and train 100,000's of AI models with significantly less development effort, and expedited go-to-market.”

“We evaluated the trillion dollar companies and a few niche retail players for our customer facing visual search use case. Clarifai was much easier to use than the trillion dollar companies, and their AI significantly outperformed both the niche players and the big guys in accuracy while having inference speeds 7x faster. The performance and the flexibility of the Clarifai platform has our executives exploring numerous other use cases to be powered by the Clarifai platform.”

“A pioneer in deep learning-based computer vision, Clarifai can tackle near-real-time visual search, facial recognition use cases, and deployment in the most secure, air-gapped environments that nearly all other vendors can’t match."

"Clarifai is a true leader in AI applications for DAM and serves our users by improving the searchability and discoverability of their content. Today, Widen users gain the ability to search by image, which is a game-changer during time-sensitive projects. By saving countless hours for users, this partnership with Clarifai improves the Widen experience and delivers true ROI for our customers."

"My organization has worked with Clarifai for almost three years, close to a daily basis, and the engagements and collaboration are top notch. The Clarifai platform and capabilities can be adapted, trained, integrated and deployed to a variety of problem sets with stellar performance. We chose Clarifai over Microsoft, Google, AWS and IBM because of their production functionality and performance, product vision and their strong consulting partnership."

"The team was a pleasure to work with. We had a highly dynamic (some would say chaotic) deployment schedule, but the Clarifai team did their best to be malleable to our needs. They were professional, engaging and collaborative. Through constant interaction with us, they iterated on their solution and developed novel approaches to our problem."

“Clarifai's pay-per-usage is an incredible feature. I would be more than happy to use clarifai end points than anything else. The fine-tuning functionality is also makes our life easy.”

“There's so much there in Clarifai it's sometimes hard to be overwhelmed”

“Got to say I like the environment Clarifai offers to build and prototype on ideas faster, it was my first time building with Clarifai.”

Computer Vision and LLM AI Lifecycle Platform

The developer platform for any deep learning use case

-

Chat with your data

-

Facial Recognition

-

Sentiment analysis

-

Speech synthesis

-

Summarization

-

Text moderation

-

Translation

-

Visual moderation

Chat with your data

Retrieval Augmented Generation (RAG) enables users to interact conversationally with their own data, using NLP to pull relevant information from datasets. RAG is a two-step process: first, it retrieves documents that are likely to contain the answers, then it generates responses based on the retrieved documents. This creates a chatbot that delivers personalized responses with zero hallucinations.

Facial Recognition

Clarifai's Facial Recognition technology allows for the accurate identification and analysis of human faces. This technology is versatile, aiding in applications such as security, user authentication, and user experience enhancement by quickly and precisely interpreting facial features. Whether it's automating access control or personalizing user interactions, Clarifai provides the tools to integrate facial recognition seamlessly into your applications.

Sentiment analysis

Textual Sentiment Analysis technology interprets and evaluates the emotions conveyed within a body of text. This sophisticated tool is instrumental in understanding user sentiments, allowing for enhanced customer interactions and feedback analysis. By transforming raw text into insightful data, it aids in refining product strategies, improving customer relations, and optimizing overall user experience, helping businesses to respond more effectively to their audience’s needs and preferences.

Speech synthesis

Speech Synthesis transforms text into natural, lifelike speech, allowing developers to create applications that talk in a human-like voice. This advanced technology enhances user engagement by providing auditory interaction, making information more accessible and interaction more intuitive. Whether it’s for assistive technologies, entertainment, or customer service applications, Speech Synthesis brings versatility to voice-enabled experiences, enabling a more inclusive and interactive future.

Summarization

Summarization distills lengthy texts down to their essential points, providing clear, concise summaries. This advanced tool is invaluable for quickly understanding and conveying key information from extensive documents or content, aiding in efficient knowledge acquisition and decision-making. Whether used for academic research, content creation, or business intelligence, our summarization technology enables users to save time and focus on what truly matters.

Text moderation

Text Moderation identifies and filters inappropriate or harmful text content, ensuring online spaces maintain a positive and safe environment for users. This technology is crucial for businesses and developers aiming to uphold community guidelines and standards across platforms, from social media to forums. By automating content moderation, it allows for a proactive approach to manage and mitigate risks associated with user-generated content.

Translation

Translation technology enables the conversion of text from one language to another with high accuracy, facilitating communication across language barriers. This solution is essential for developers looking to make their content accessible to a global audience, enhancing user understanding and interaction. Whether it’s for customer support, content creation, or multilingual platforms, our translation tools bridge linguistic gaps, fostering inclusivity and connection.

Visual moderation

Visual moderation empowers platforms to detect and filter out inappropriate or harmful visual content, creating a safer online environment. This solution is key for businesses and developers aiming to maintain a positive user experience on their platforms, ranging from social media to community forums. By leveraging image analysis, it proactively moderates content, helping to uphold community standards and protect user well-being.

Trusted by enterprises. Powered by partners.

Advance AI adoption with Clarifai’s network of partners

.png?width=109&height=70&name=l9e9olnz7xdc86xl6-AWS.png%20(2).png)

Build your first Generative app in under five minutes with Clarifai.