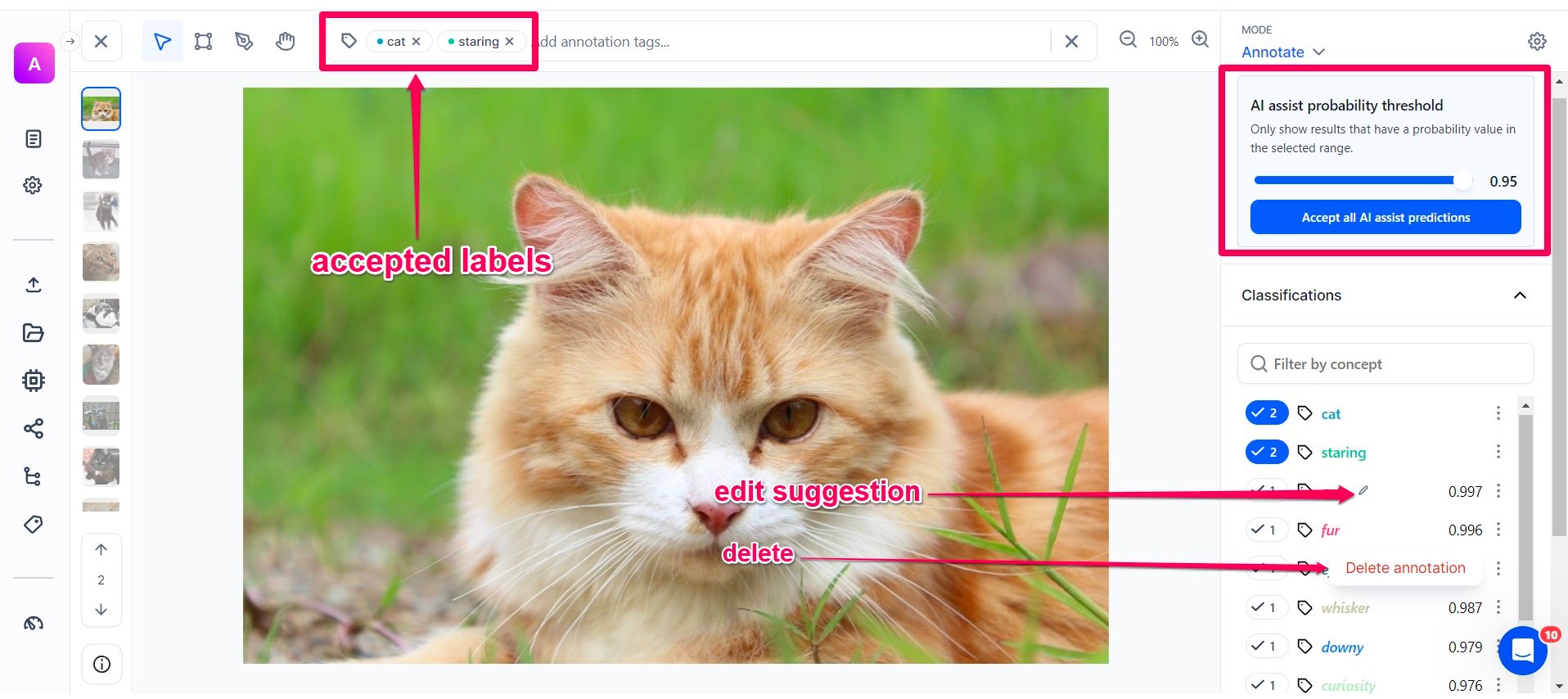

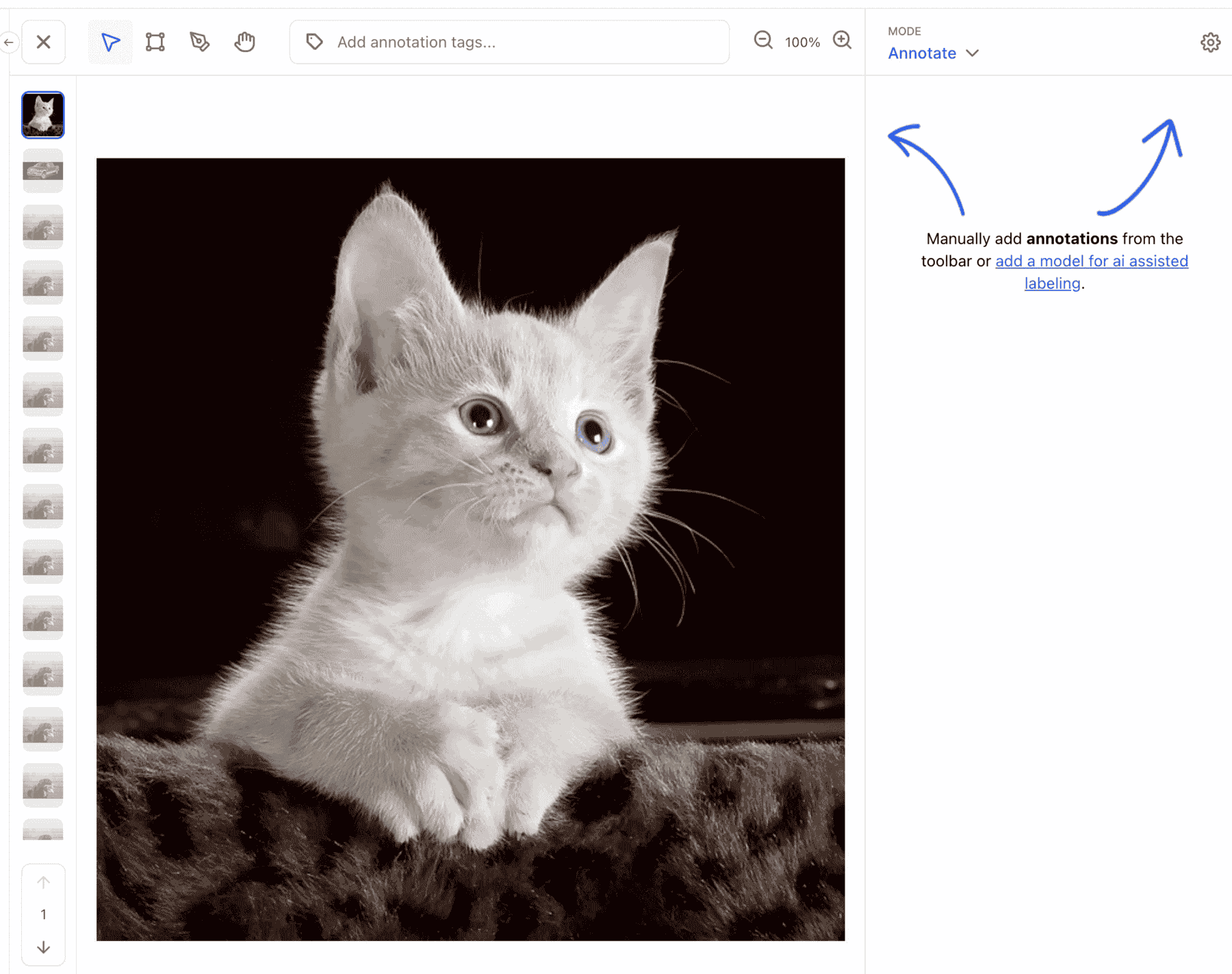

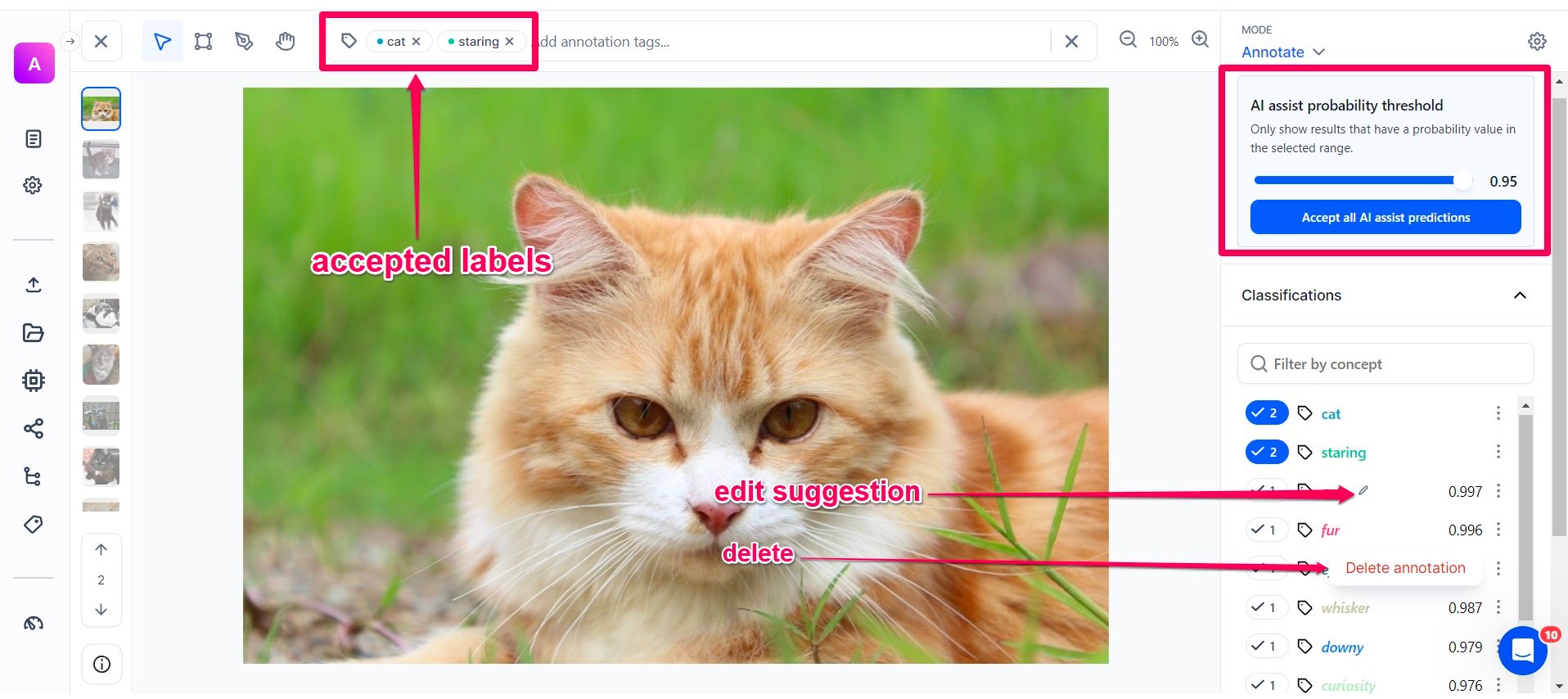

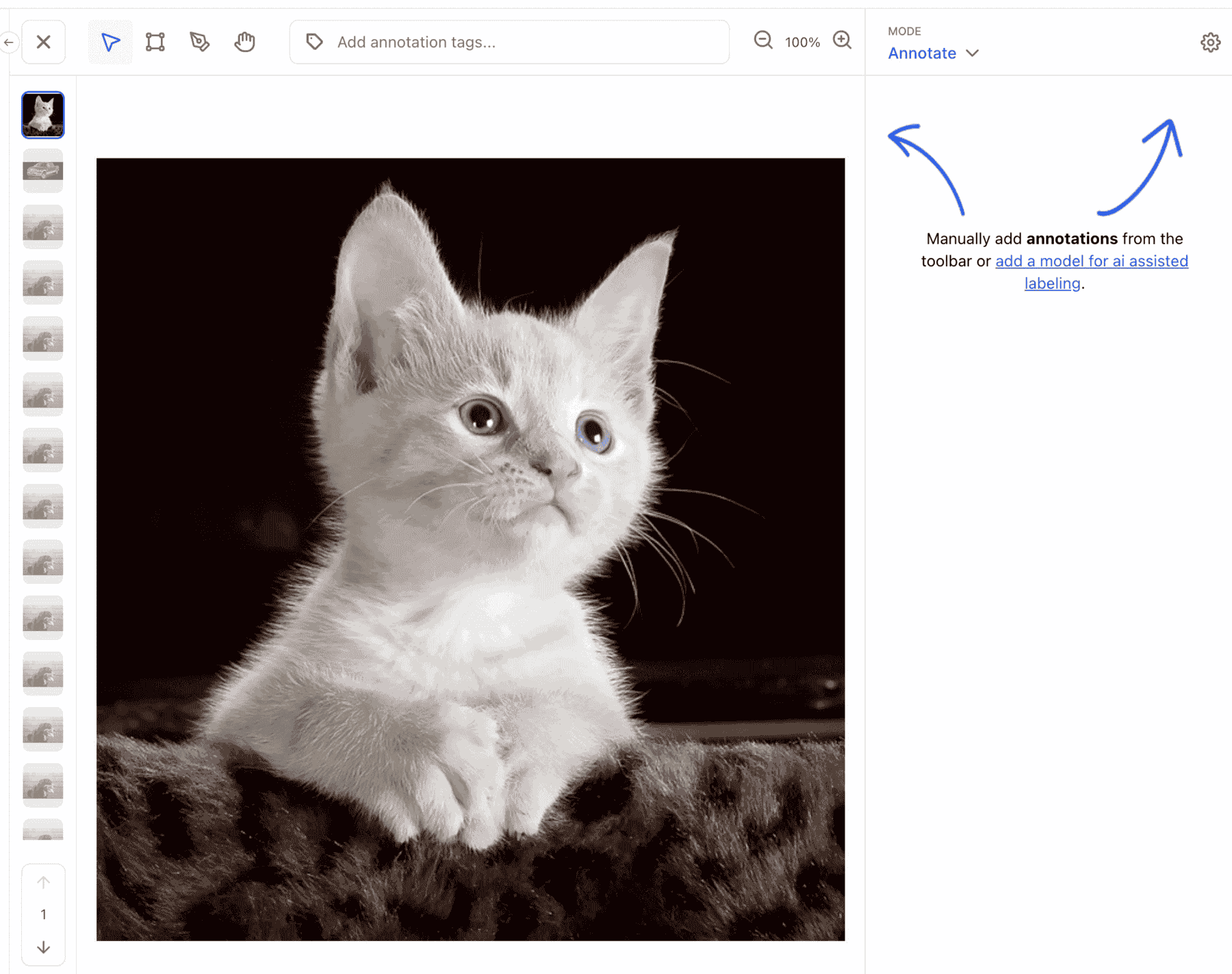

AI Assist

Added the innovative AI assist feature on the Input-Viewer screen. You can now use it to generate annotations for your inputs automatically

- Compared with existing Smart Search and Bulk Labeling features that can be used within the Input-Manager screen to accelerate labeling, our newest AI-Assist features can be employed while working with individual Inputs within the Input-Viewer screen.

- To start, simply select an existing Model or Workflow (either owned by you or from Clarifai’s Community) within the Annotate Mode settings in the right-hand sidebar.

- For the full story check out our blog!

API

Added inference parameters customization and flexible API key selection

- You can now configure inference parameters such as temperature, max tokens, and more, depending on the specific model you are using, for both text-to-text and text-to-image generative tasks. This empowers you to customize and fine-tune your model interactions to better suit your individual needs.

Bug Fixes

- Fixed an issue where the ListWorkflows endpoint fetched workflows from deleted apps. Previously, workflows from deleted applications were fetched in the response of the ListWorkflows endpoint. We fixed the issue.

Python SDK

Added inference parameters customization and flexible API key selection

- The SDK supports the following text data formats: plain text, CSV, and TSV (in roadmap).

- It supports the following image data formats: JPEG, PNG, TIFF, BMP, WEBP, and GIF.

- You have the flexibility to upload data from either URLs or local storage, whether it's within a single folder or spread across multiple sub-folders.

- You can upload text data enriched with metadata and text classification annotations.

- You can also upload image data enriched with annotations, geoinformation, and metadata information.

- You can efficiently create apps designed for text mode and image mode use cases.

- The SDK ensures transparent progress tracking during each upload and promptly reports any encountered errors.

Integrated YAML workflow interfaces with proto endpoints

- In the SDK, the workflow interfaces are now represented in YAML format, but they are seamlessly integrated with proto endpoints for efficient communication and interaction.

Enhanced the SDK's foundational structure

- The SDK now supports authentication only using Personal Access Tokens (PAT) and UserIDs.

- We plan to provide helper utilities for rich text formatting and printing to facilitate a user-friendly interaction.

- We are working to constantly add a robust error-handling system that covers various scenarios.

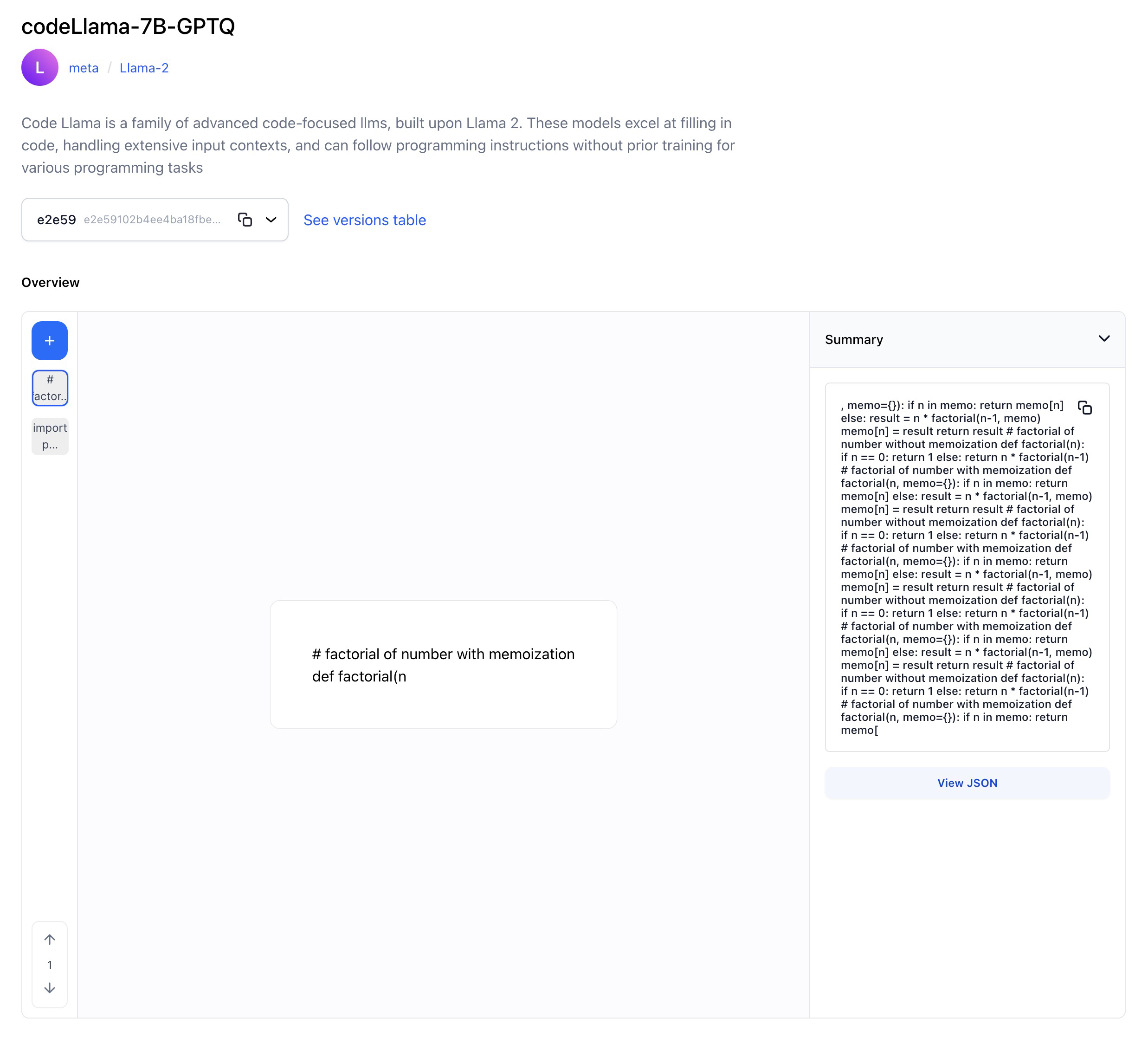

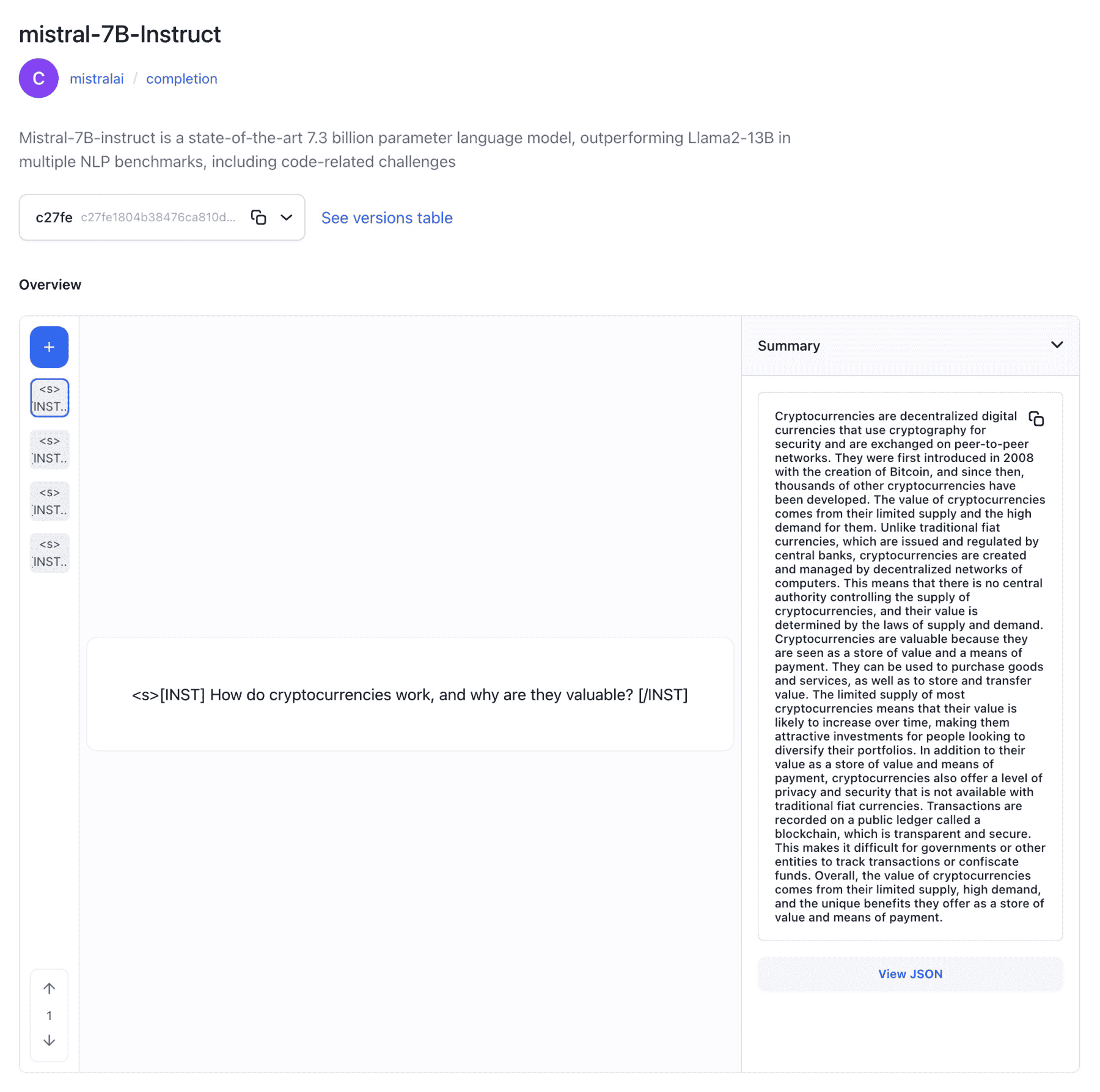

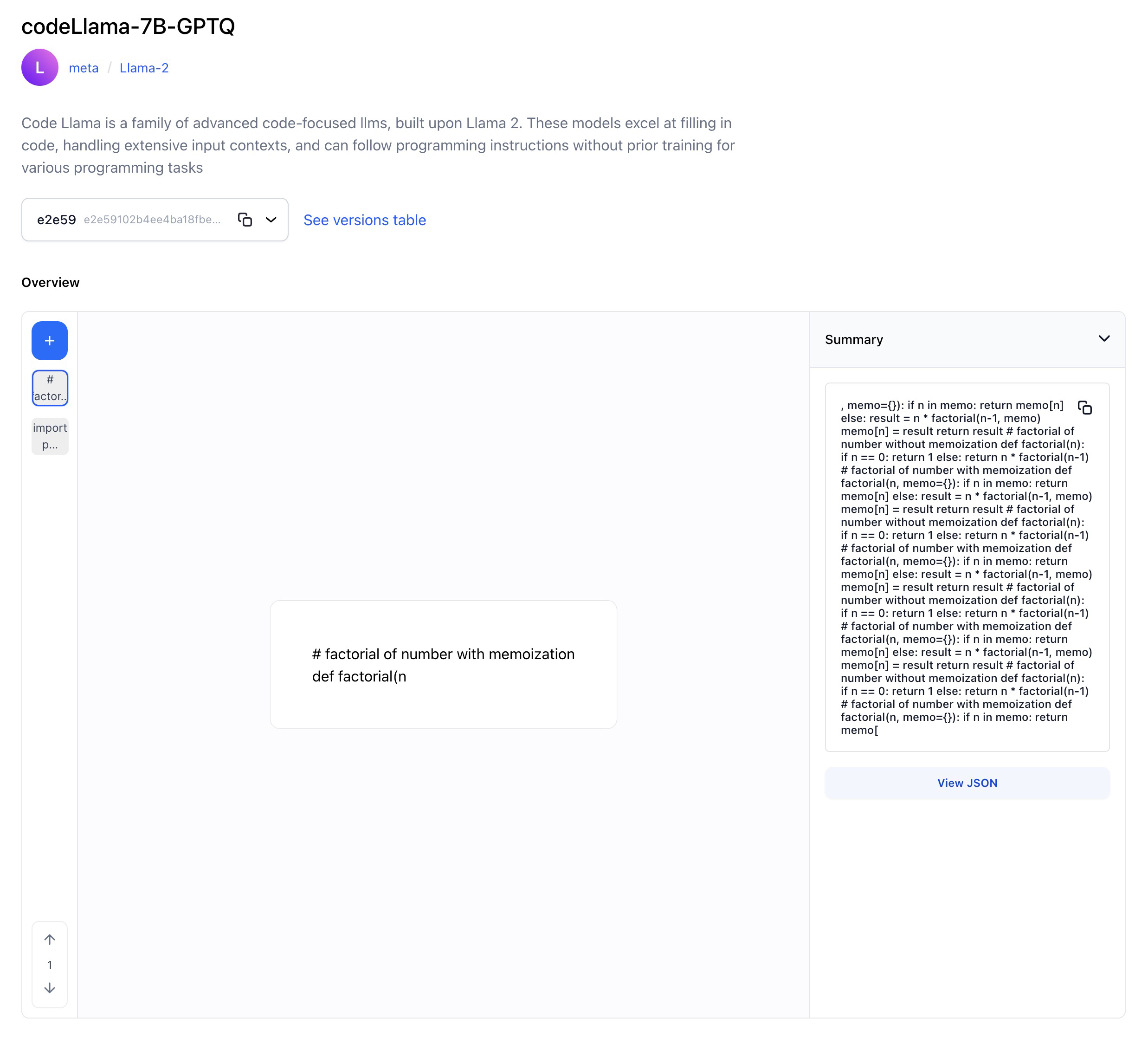

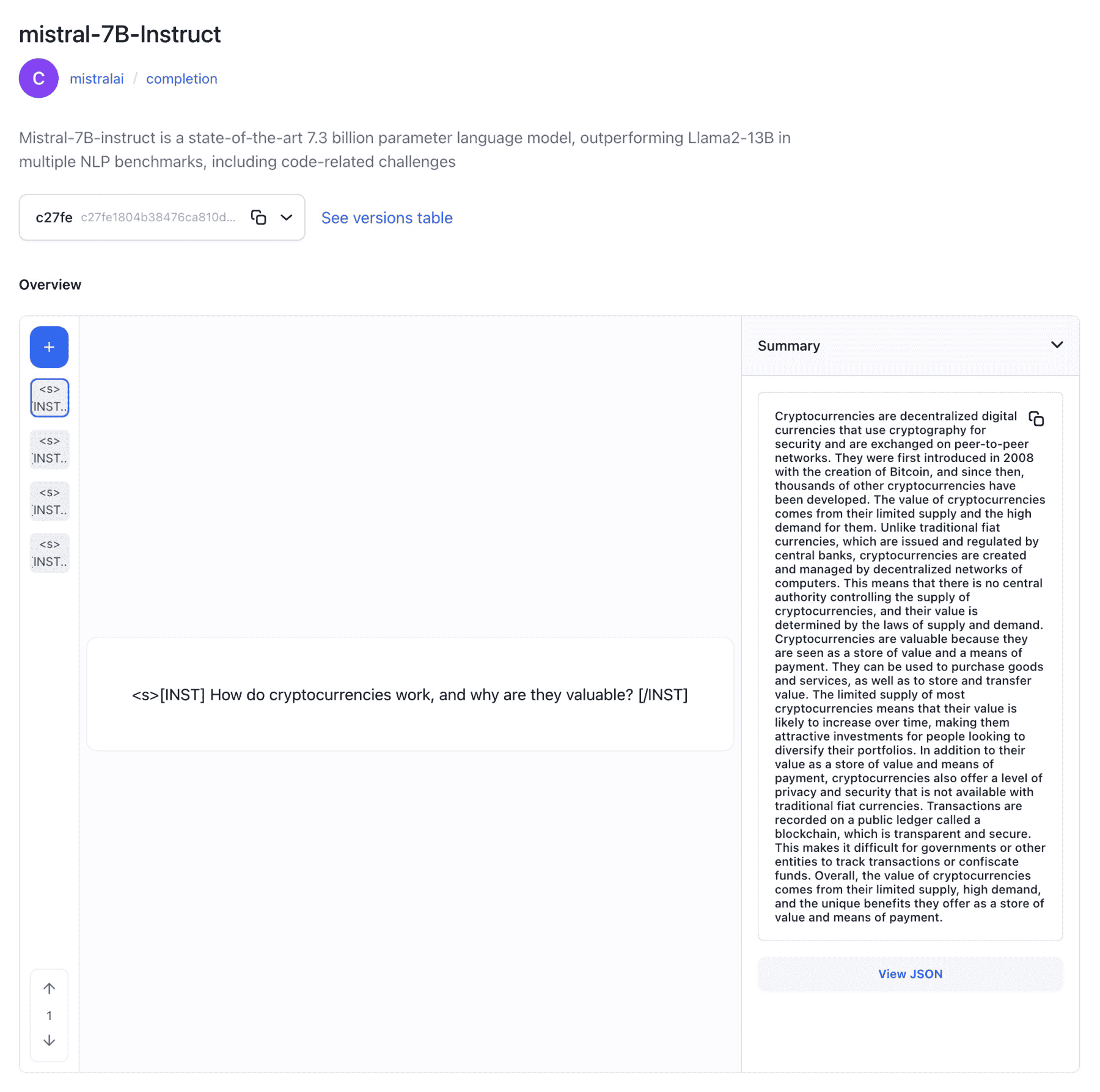

New Published Models

Published several new, ground-breaking models

- Wrapped Falcon-40B-Instruct, a causal decoder-only Large Language Model (LLM) built by TII based on Falcon-40B and fine-tuned on a mixture of Baize.

- Wrapped WizardLM-70B, a model fine-tuned on the Llama2-70B model using Evol+ methods; it delivers outstanding performance.

- Wrapped CodeLlama-13B-Instruct-GPTQ, CodeLlama-34B-Instruct-GPTQ, and CodeLlama-7B-GPTQ. Code Llama is a family of code-focused LLMs, built upon Llama 2. These models excel at filling in code, handling extensive input contexts, and following programming instructions without prior training for various programming tasks.

- Wrapped WizardLM-13B, an LLM fine-tuned on the Llama-2-13b model using the Evol+ approach; it delivers outstanding performance.

- Wrapped WizardCoder-15B, a code-based LLM that has been fine-tuned on Llama 2 using the Evol-Instruct method and has demonstrated superior performance compared to other open-source and closed-source LLMs on prominent code generation benchmarks.

- Wrapped WizardCoder-Python-34B, a code-based LLM that has been fine-tuned on Llama 2. It excels in Python code generation tasks and has demonstrated superior performance compared to other open-source and closed-source LLMs on prominent code generation benchmarks.

- Wrapped Phi-1, a high-performing 1.3 billion-parameter text-to-code language model, excelling in Python code generation tasks while prioritizing high-quality training data.

- Wrapped Phi-1.5, a 1.3 billion parameter LLM that excels at complex reasoning tasks and was trained on a high-quality synthetic dataset.

- Wrapped OpenAI's GPT-3.5-Turbo-Instruct, an LLM designed to excel in understanding and executing specific instructions efficiently. It excels at completing tasks and providing direct answers to questions.

- Wrapped Mistral-7B-Instruct, a state-of-the-art 7.3 billion parameter language model, outperforming Llama2-13B in multiple NLP benchmarks, including code-related challenges.

LlamaIndex

We now support integrating with the LlamaIndex data framework for various use cases, including:

- Ingesting, structuring, and accessing external data for the LLMs you fine-tune on our platform. This allows you to boost the accuracy of your LLM applications with private or domain-specific data.

- Storing and indexing your data for various purposes, including integrating with our downstream vector search and database services.

- Building an embeddings query interface that accepts any input prompt and leverages your data to provide knowledge-augmented responses.

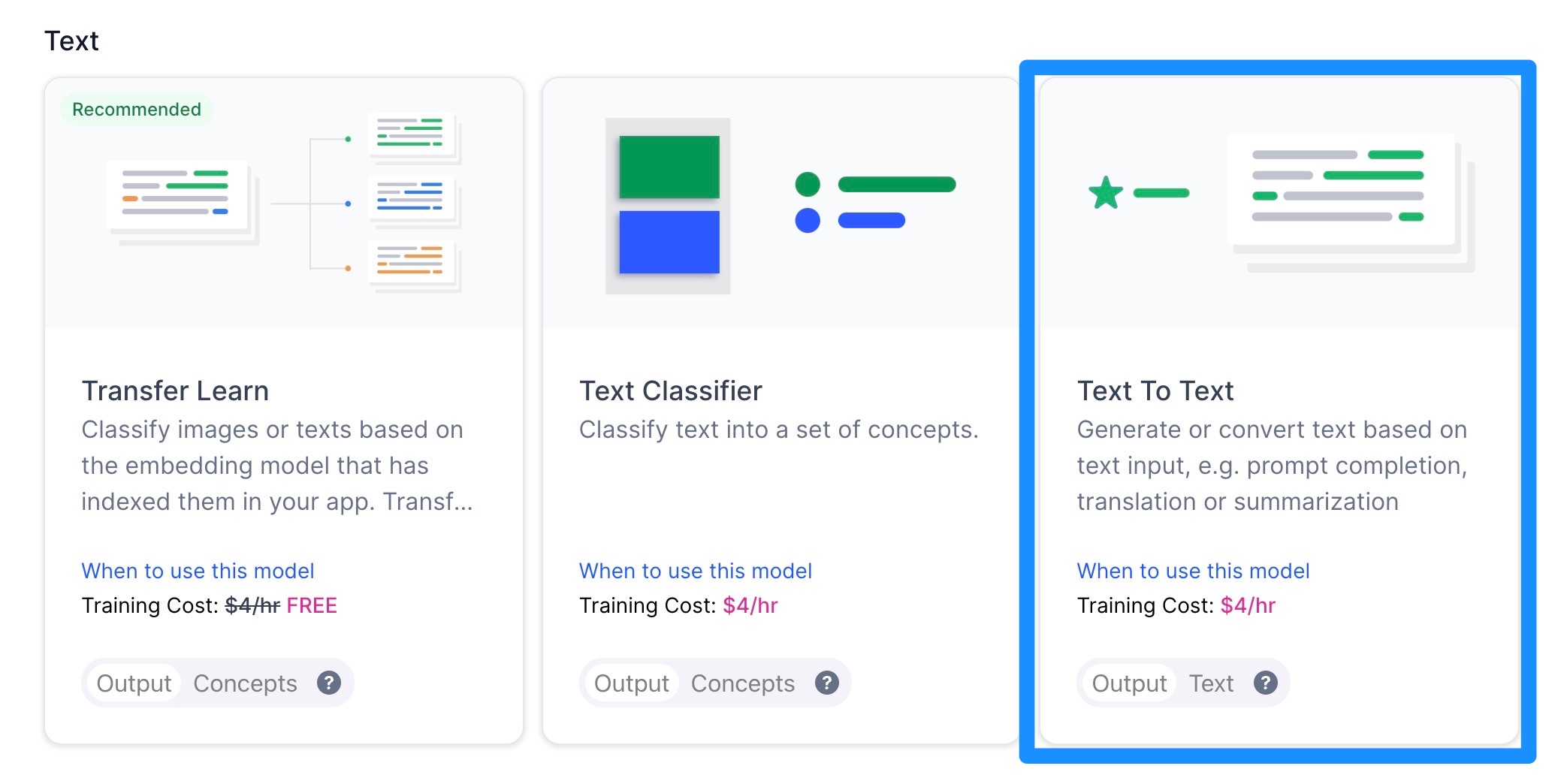

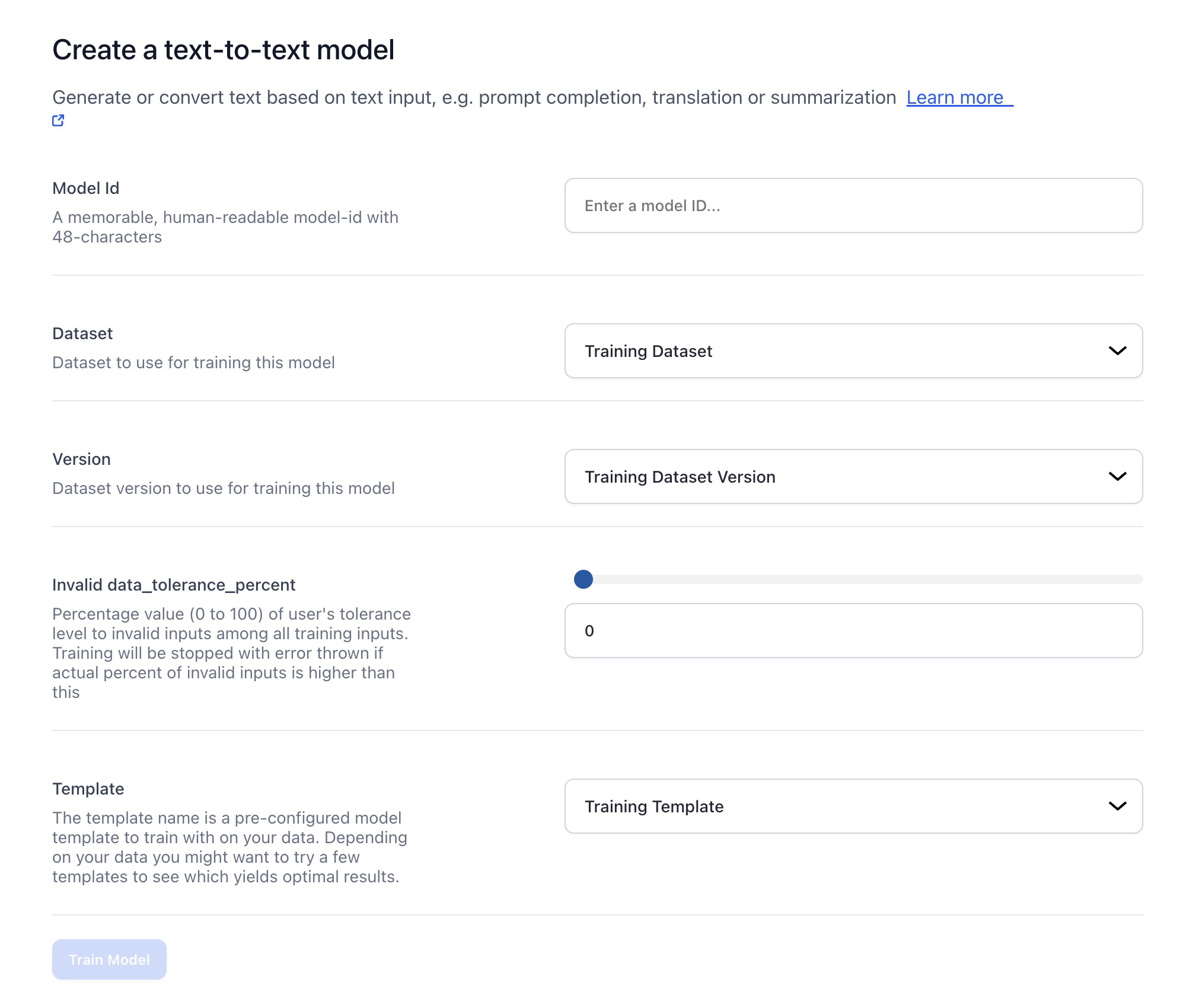

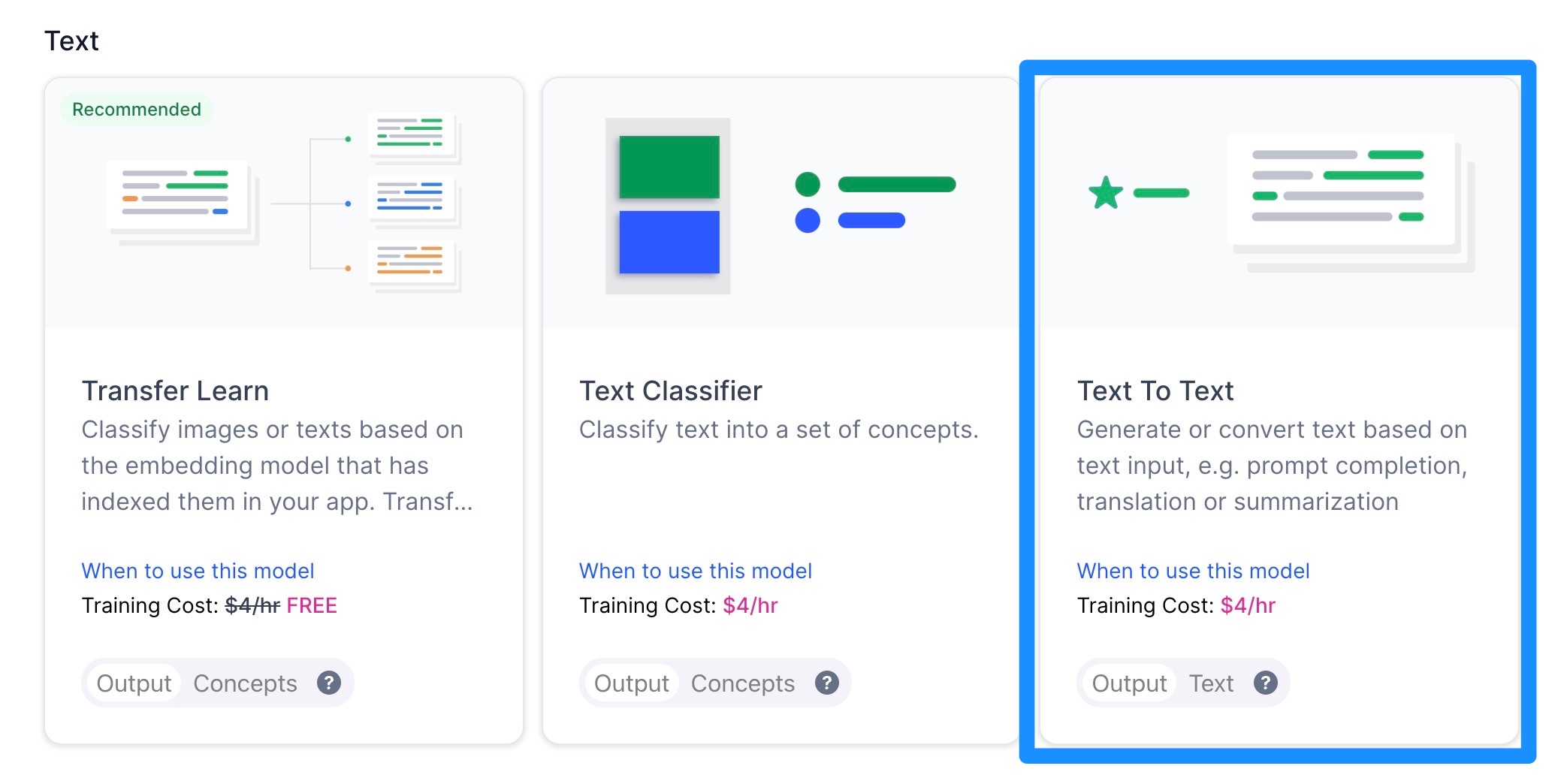

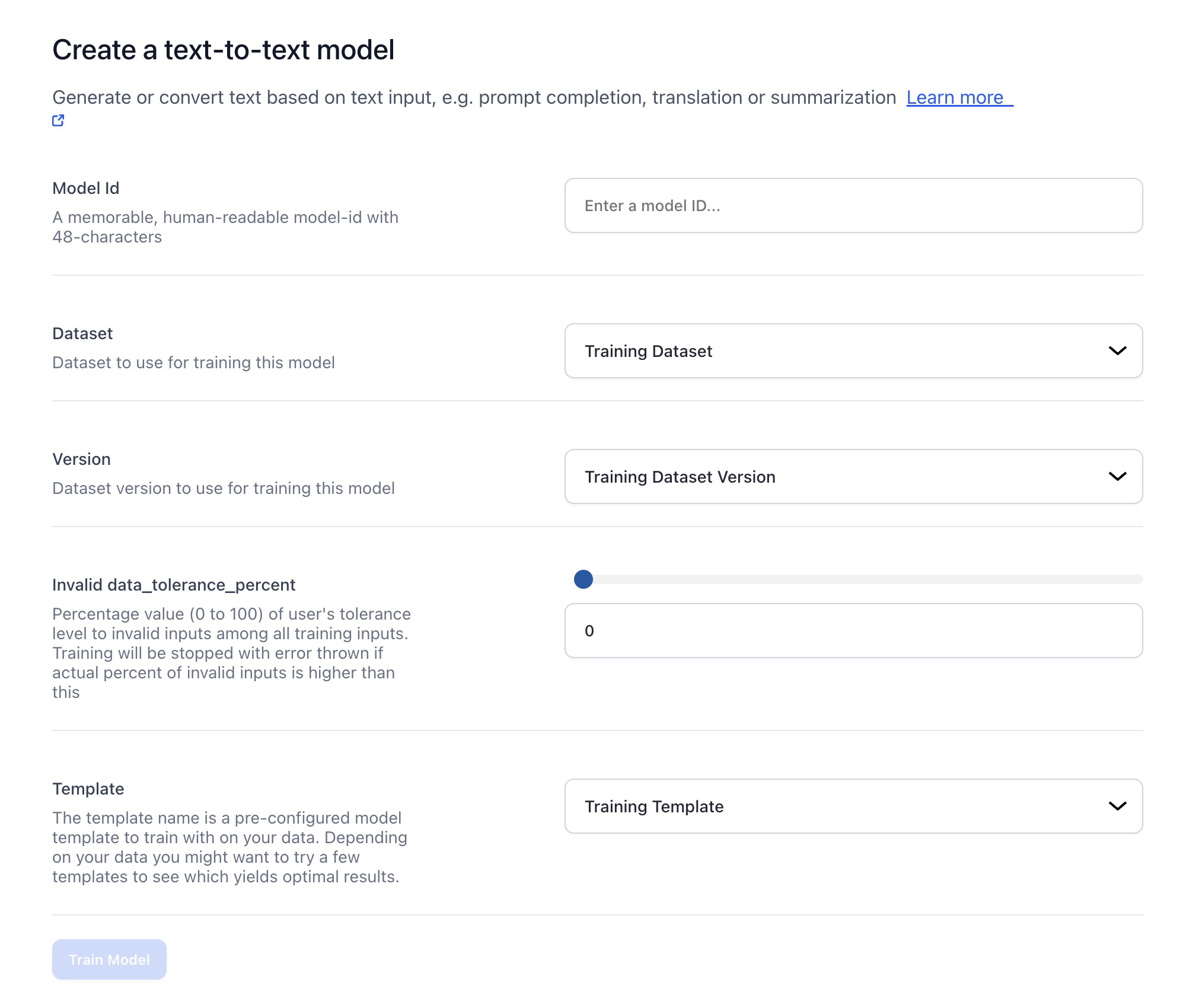

Models

Added ability to fine-tune text-to-text models

- Advanced model builders can now further customize the behavior and output of the text-to-text models for specific text generation tasks. They can train the models on specific datasets to adapt their behavior for particular tasks or domains.

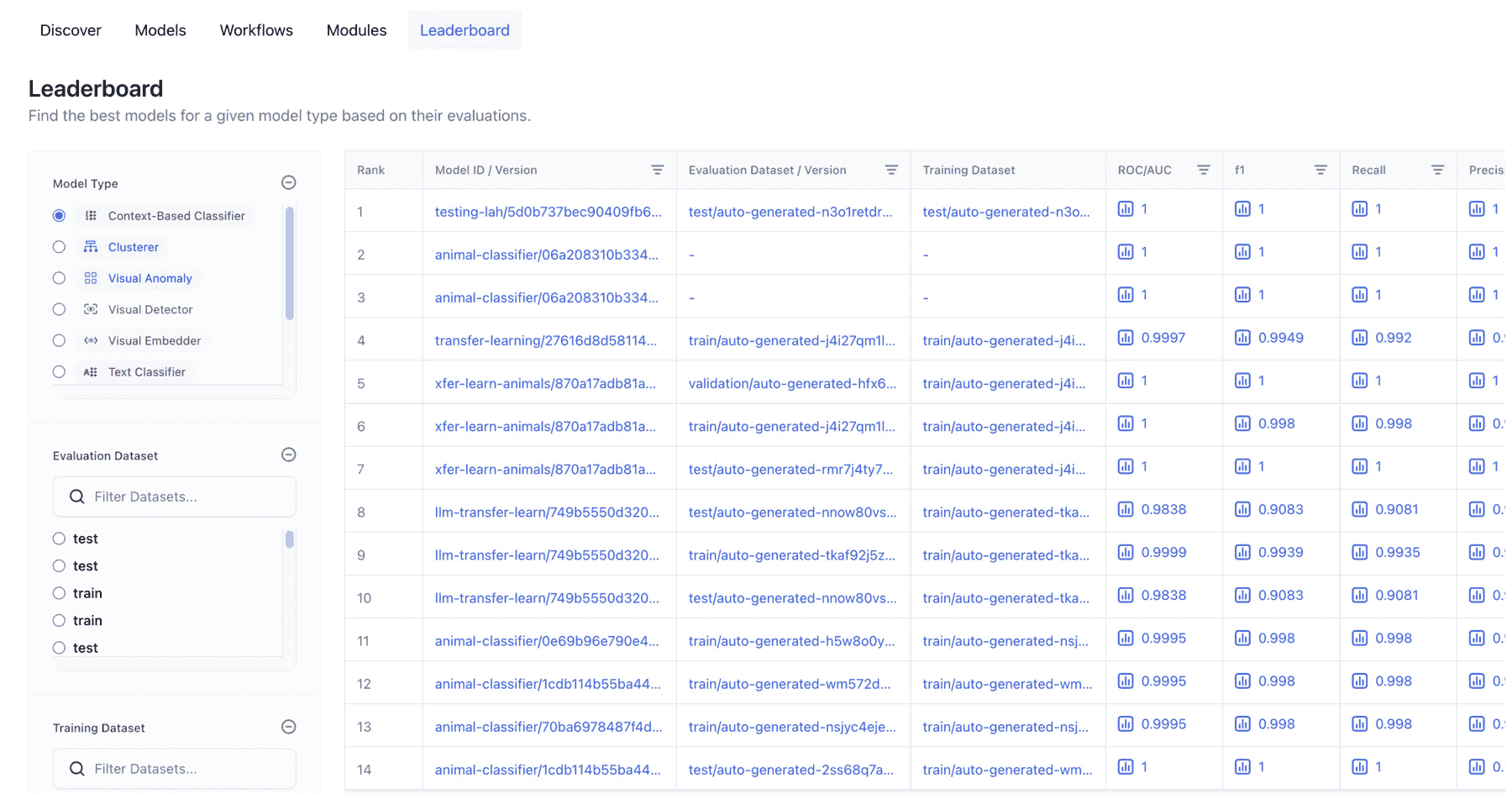

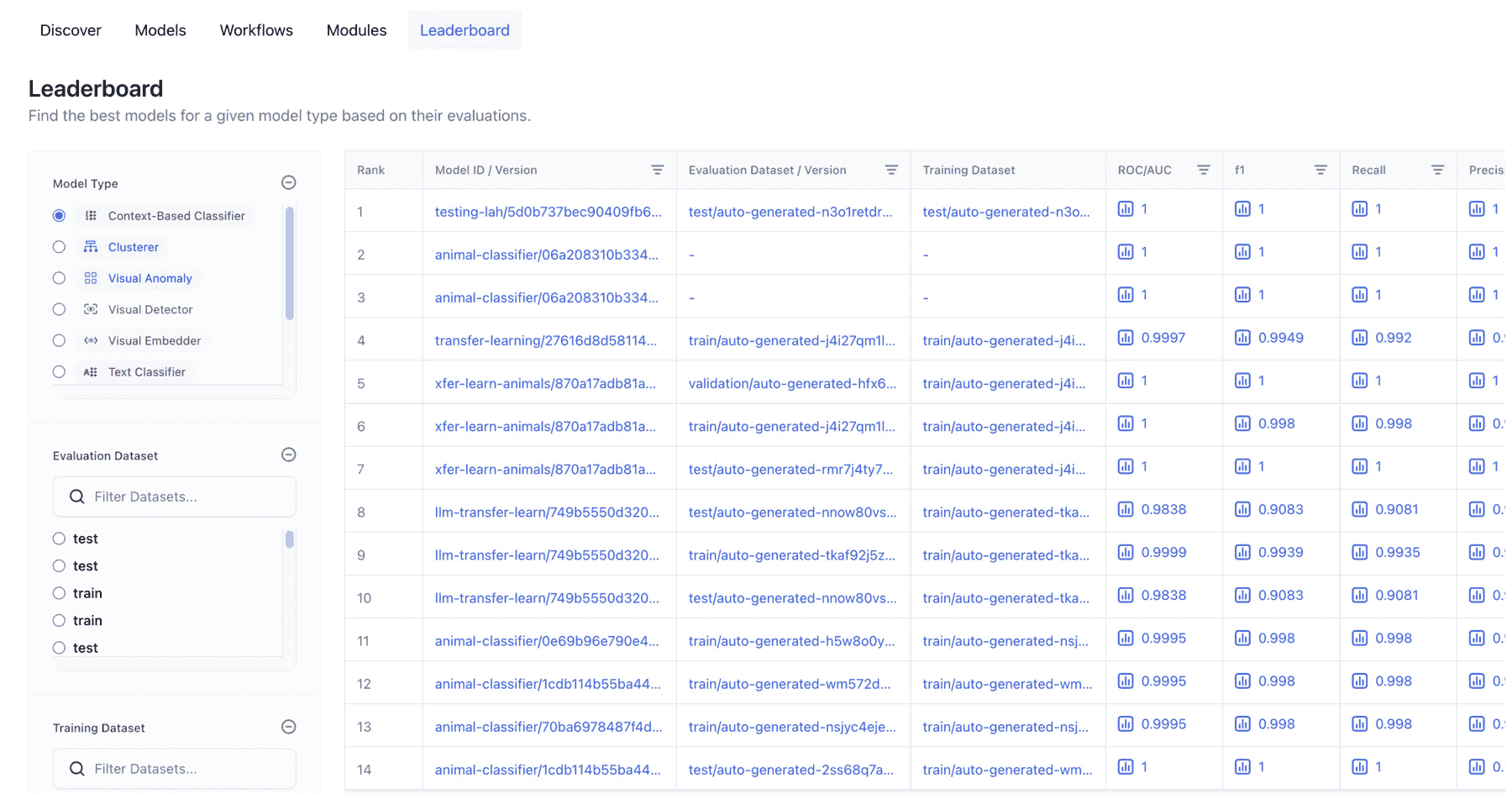

[Enterprise Preview] Fixed an issue with the Leaderboard, which organizes models based on their evaluation results

- Previously, for visual detection models, the displayed metrics included classification metrics like ROC, instead of the expected metric, which is Mean Average Precision (MAP). The Leaderboard now correctly displays MAP, just like in the model viewer's version table.

Bug Fixes

- Introduced multi-label text classification with Hugging Face text classification pipeline. Previously, there was an issue with multi-label text classification using the Hugging Face text classification pipeline. While it worked well for multi-class classification, it did not perform correctly for multi-label classification. We fixed the issue.

- Fixed issues with the model version details page.

- Fixed an issue that prevented the model version details page from displaying any output fields.

- Fixed an issue that caused the model version details table page to crash unexpectedly.

- Fixed an issue where the model version details page displayed incorrect parameter values when switching between different model versions.

- Fixed an issue where the model version details page displayed fields irrelevant to the specific model type. Unnecessary fields unrelated to the selected model type are now not visible.

- Fixed an issue where the Model Viewer page incorrectly rotated images in specific community apps. Previously, when images with a portrait orientation (height greater than width) were submitted to some apps, the predicted detection boxes appeared misaligned or incorrect. The Model Viewer page now correctly rotates images with portrait orientation, aligning detection boxes accurately for improved user experience and detection accuracy.

- Fixed an issue where the model ID disappeared after selecting the Base embed_model in the model creation process. Previously, users encountered an issue where their previously entered model ID would disappear upon selecting a base embed model when creating a new model. We fixed the issue.

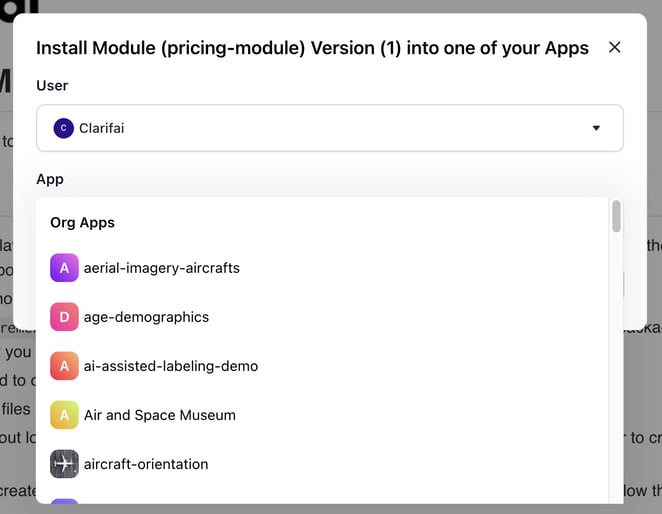

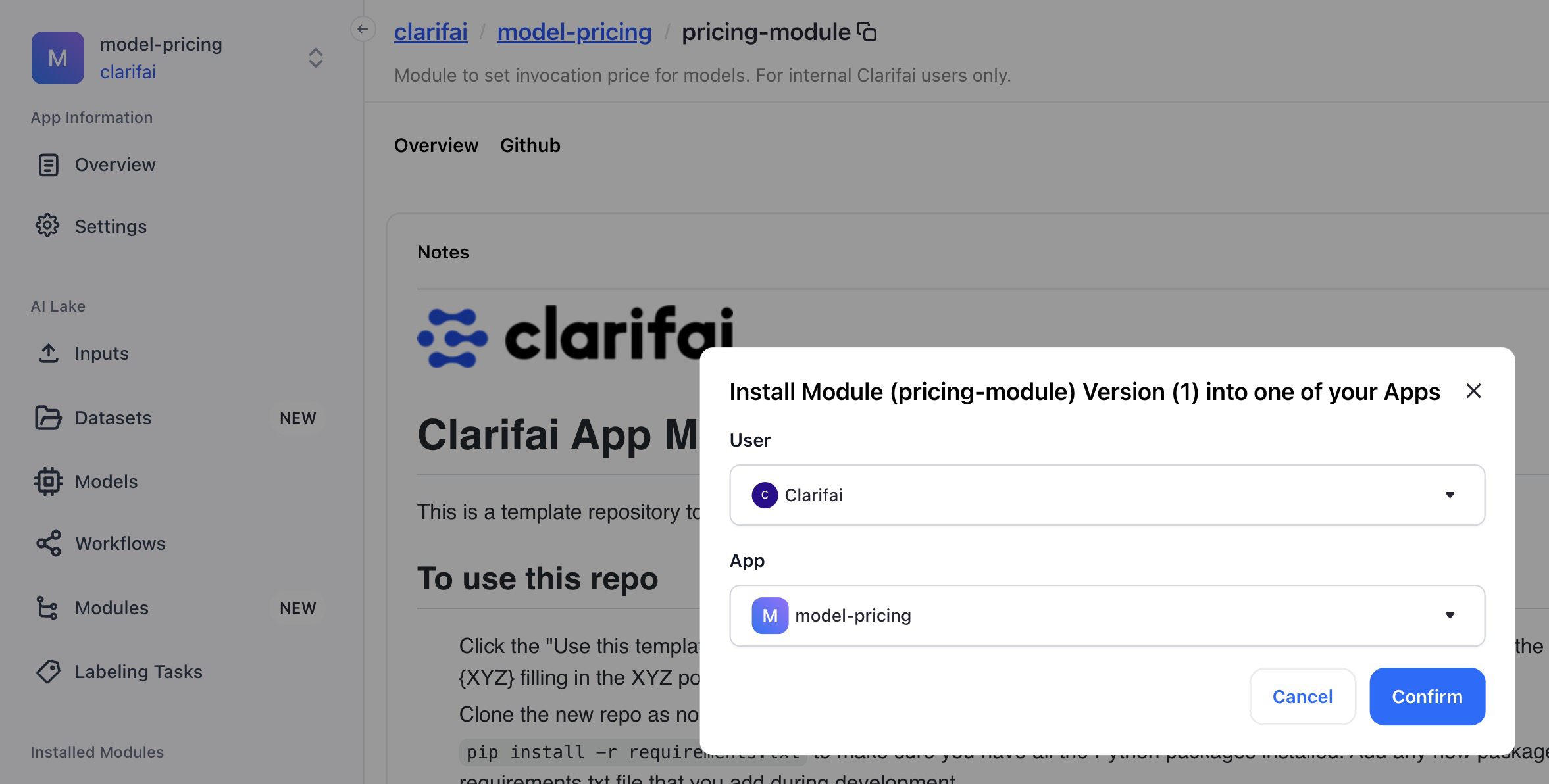

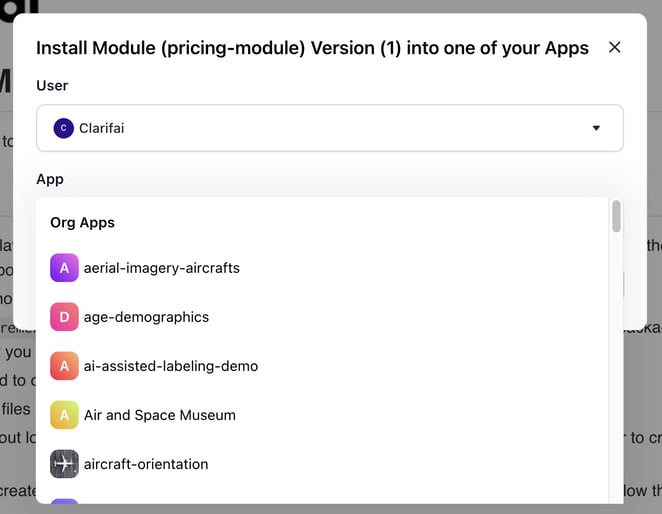

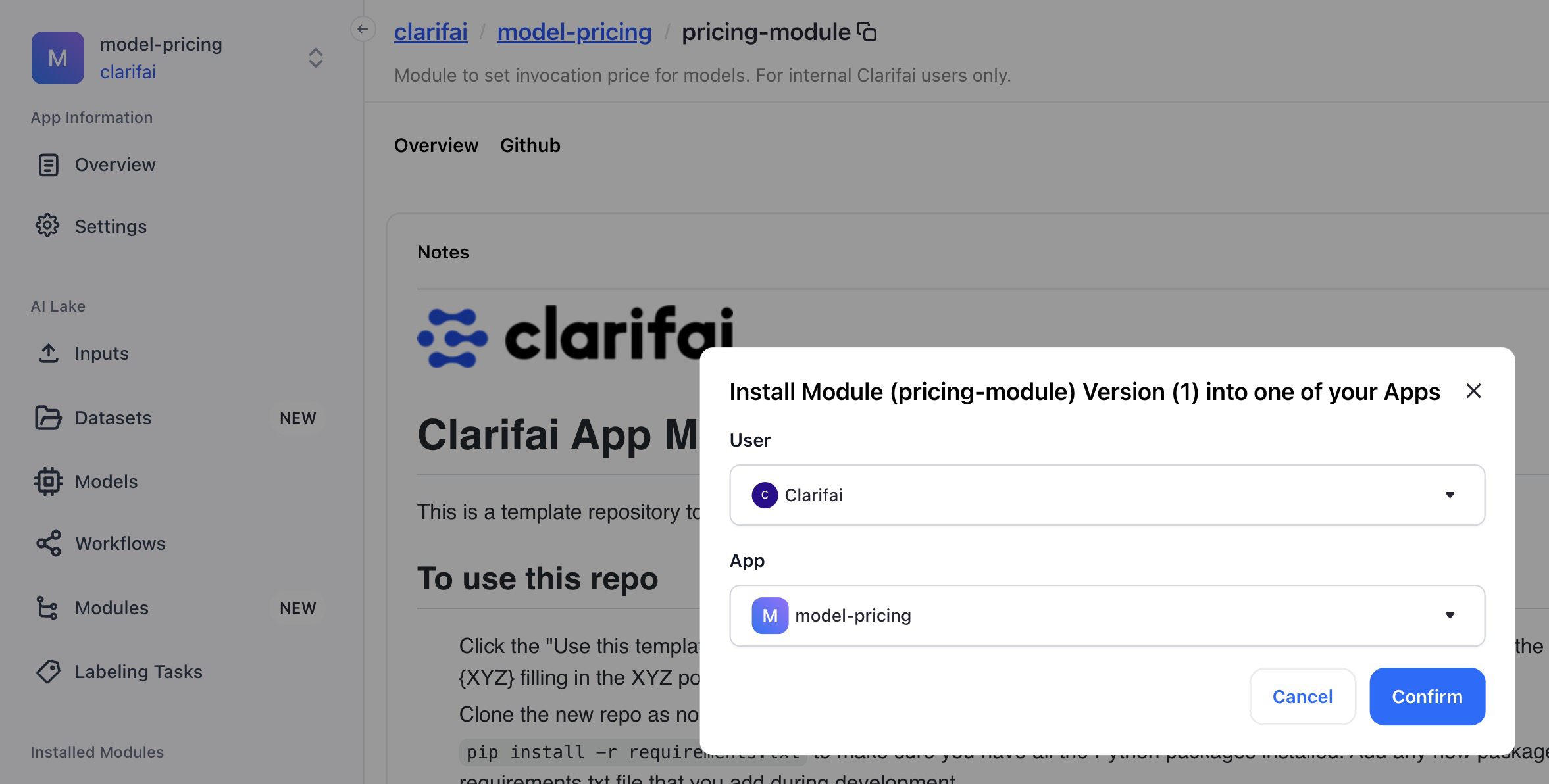

Modules

Removed collaborative applications from the apps drop-down list within the Install Module modal when an org user is selected

- Since organization users do not have collaborative apps or engage in collaborations, we have optimized the interface by removing collaborated apps in the Install Module modal specifically for this user group. This also fixed the app failure bug.

Enhanced the default app selection in the Install Module modal

- The Install Module modal now automatically selects your current app as the default destination to install your chosen module.

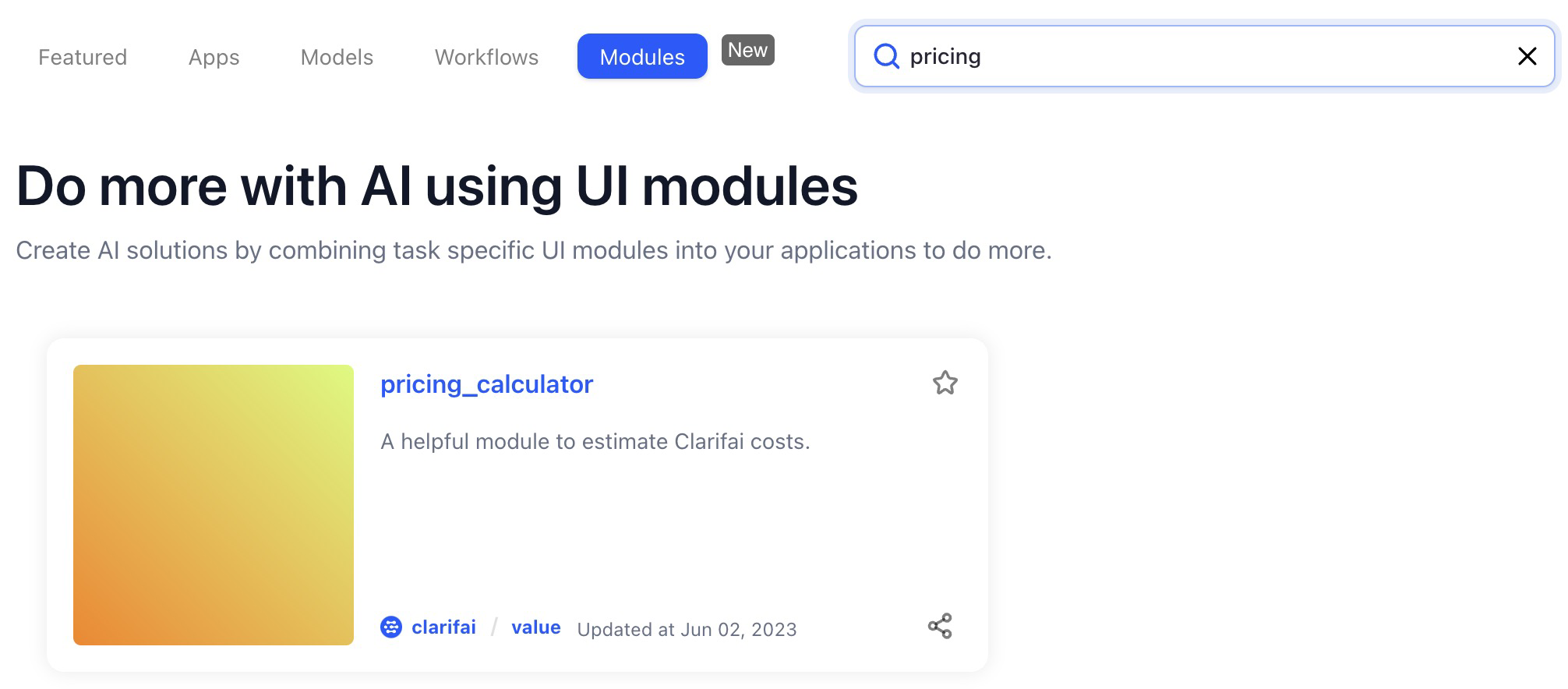

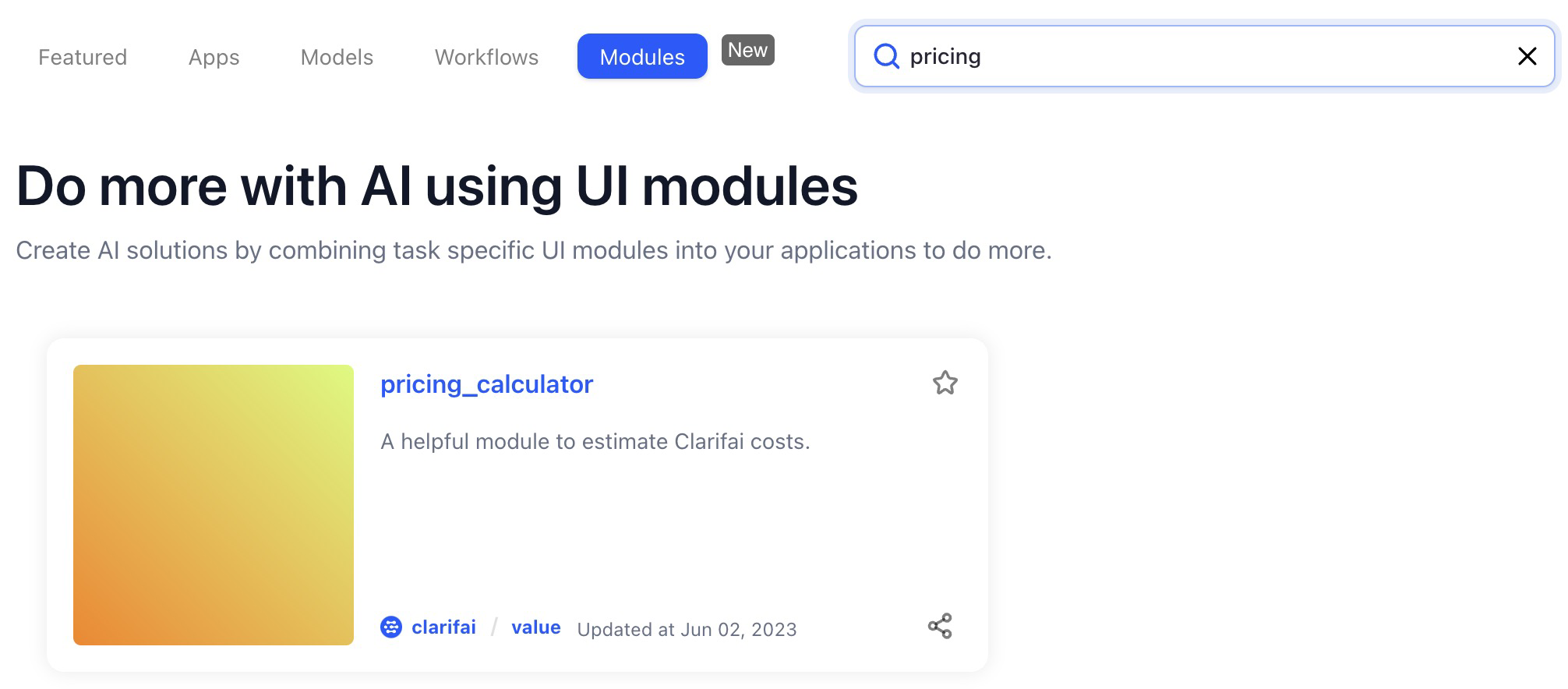

Improved the search functionality for modules

- This refinement in the search feature offers a more streamlined and productive experience when looking for specific modules or related content.

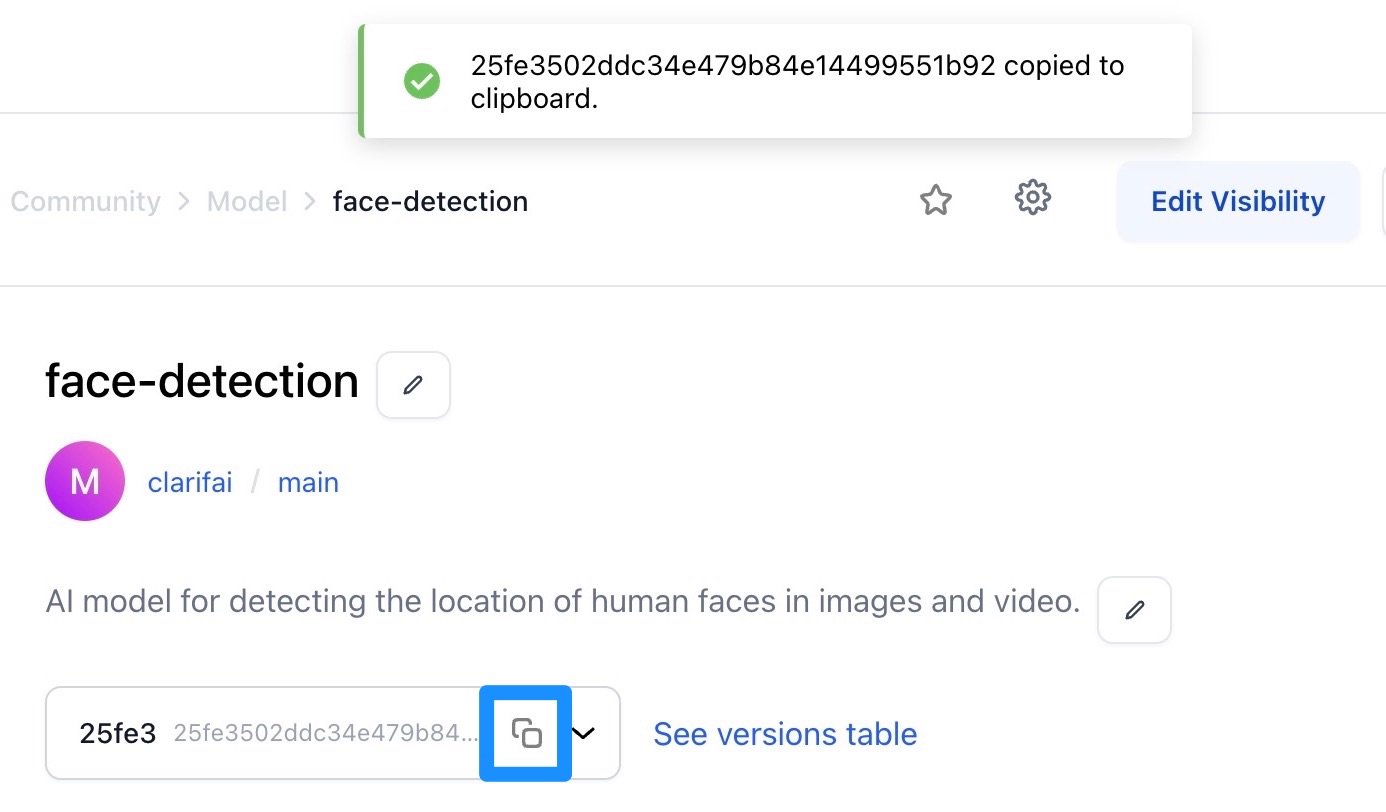

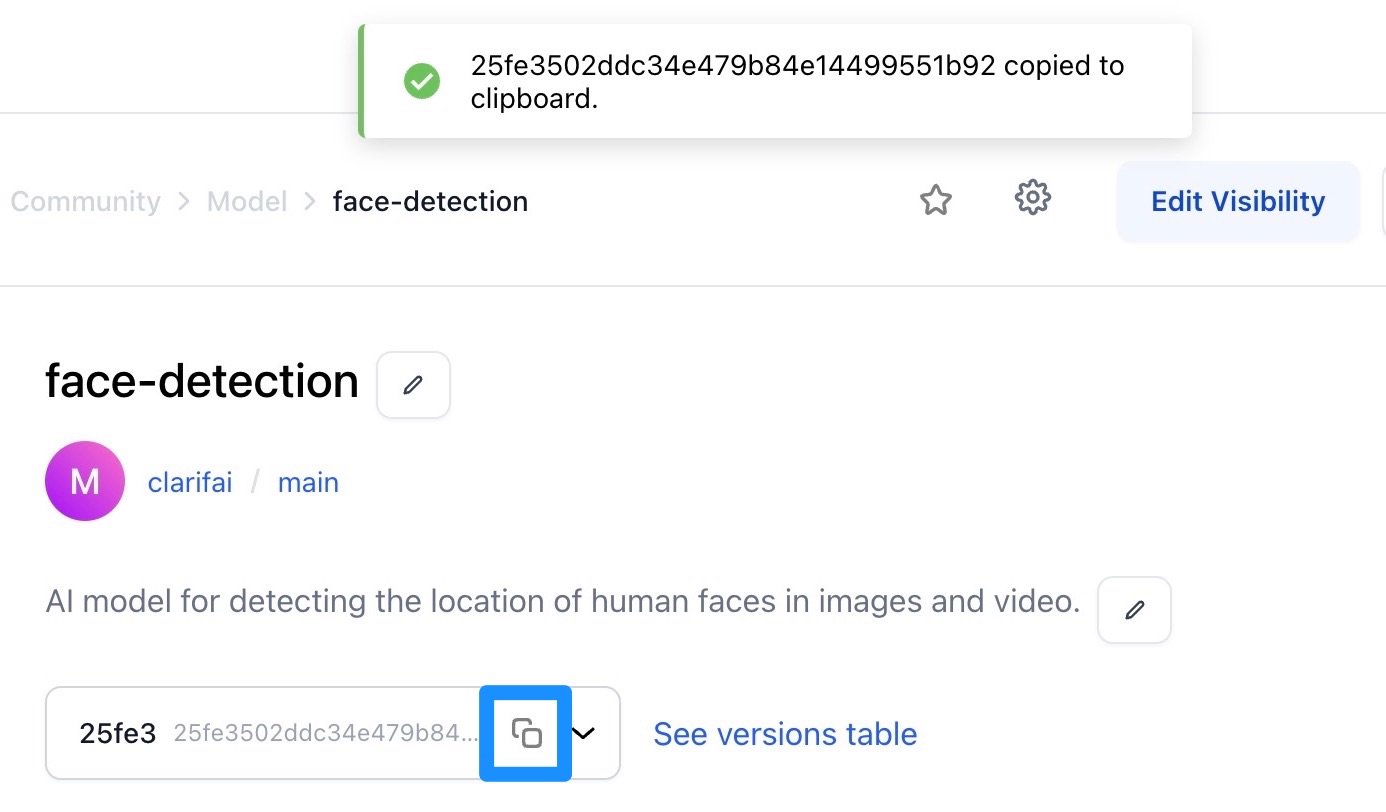

Introduced a handy addition to the module's viewer page—an easy-to-use copy icon

- You can now click the copy icon located in the Latest Version ID section to swiftly obtain the complete latest version URL for a module. This URL allows you to expedite the module installation process for your application.

Input-Manager

Added ability to view and tag text inputs within the Input-Viewer

- We have designed a comprehensive UI that allows you to interactively create and manage annotations for text inputs.

Bug Fixes

- Improved keyboard shortcut functionality in Input-Viewer. In the Input-Viewer, you can use keyboard shortcuts (hotkeys), such as H, V, P, and B, to switch between annotation tools.

- Previously, when opening the model selector or any other element with a popover while in the Input-Viewer, typing any of the hotkeys would unintentionally change tools in the background. We fixed the issue, and the hotkeys do not now trigger events that affect tools in the background.

- Enabled error-free transition to annotation mode in Input-Viewer. Previously, there was an error immediately after switching to annotation mode in the Input-Viewer. We fixed the issue.

- Fixed issue preventing collaborators from creating annotations in Input-Viewer. Collaborators can now actively participate in the annotation process, contributing to a more collaborative and efficient workflow.

- Improved the Input-Viewer's URL handling to ensure a more seamless navigation experience. Previously, there was an issue where the input ID part of the route parameters was not utilized effectively for rendering, leading to undesired redirects. We fixed the issue.

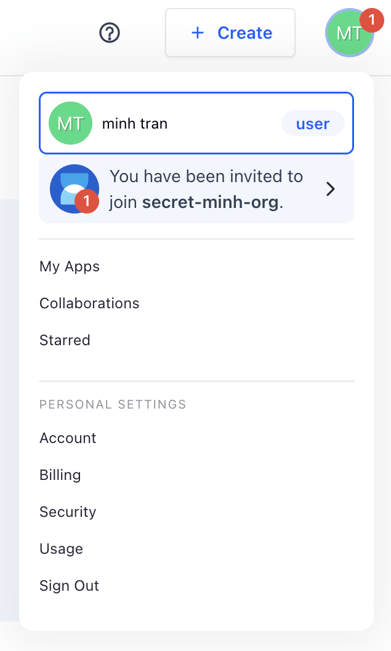

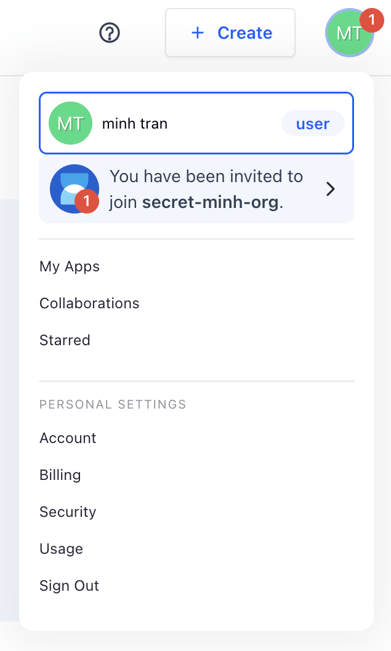

Invitations

- You can now seamlessly review and accept pending Organization invitations directly within the Portal, eliminating the need to rely solely on email for this purpose. This is valuable for both our SaaS offering and our on-premise deployments, as it caters to scenarios where email support may be limited or unavailable.

Organization Settings and Management

Bug Fixes

- Fixed an issue with displaying incorrect menu items in the mobile view. Previously, when you switched from your personal user account to an organization account by clicking your profile icon at the top-right corner of the navigation menu bar, the drop-down list displayed your personal details instead of the organization’s information. We fixed the issue.

- Fixed an issue where an organization contributor could not access public workflows. Organization contributors (organization members) can now fetch a complete list of all public workflows without any hindrances.

- Enabled organization contributors to view available destination apps in Install Module Modal. We fixed an issue that previously hindered organization contributors from viewing the list of available destination apps within the Install Module modal when attempting to install a module.

- Fixed an issue with searching for organization members. Previously, when you used multiple parameters, such as both first name and last name together, to search for members whose first name or last name matched the query text, it could result in an error within the response. We fixed the issue.

- Fixed an issue where collaborators with the organization contributor role failed to list model evaluations. Previously, collaborators trying to list model evaluations encountered an error message stating "Insufficient scopes", despite the expectation that the role would grant them access to view evaluations. We fixed the issue.

Apps

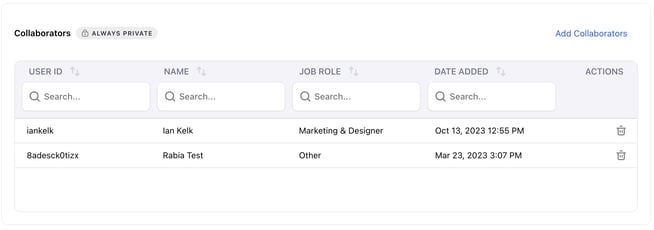

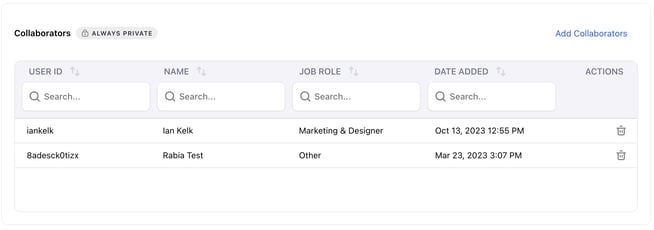

Enhanced App Settings page to display details of collaborators

- Now, you can easily access and view the details of your collaborators, including their ID, first and last name, email, job role, and the date they were added to the app.

Bug Fixes

- Fixed an issue where the app thumbnails were not cached properly. Previously, there was an issue where the images of uploaded app thumbnails on the App Overview page and on the drop-down sidebar list, which provides quick access to your available apps, occasionally appeared as blank or missing. We fixed the issue.

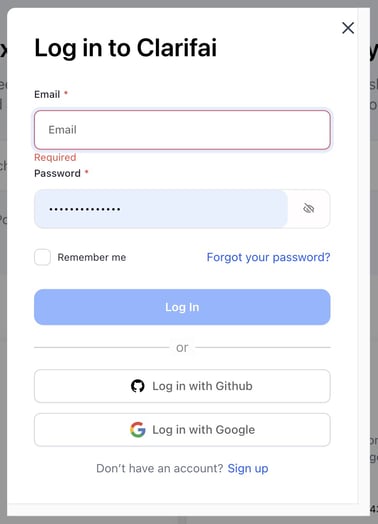

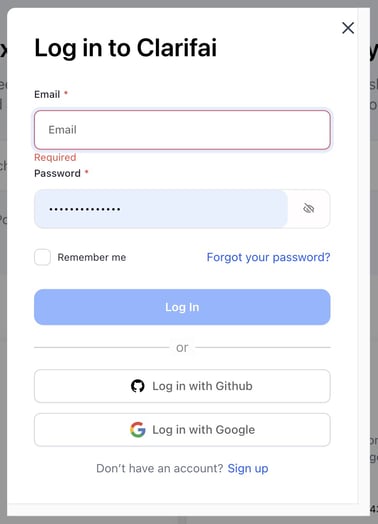

Login/Logout and Signup Flow

Introduced modal pop-ups for login and signup, and for various scenarios where redirects were previously employed

- We have retained dedicated login and signup pages while introducing modal windows for these actions. You can now access login and signup functions directly from your active page, providing quick and easy access to these essential features.

- For operations like "Use Model," "Use Workflow," "Install Modules," and more, we have replaced the redirection process with modal pop-ups. This eliminates the extra step and ensures a smoother user journey.

Bug Fixes

- Fixed an issue with the reCAPTCHA system. We improved the reCAPTCHA system to offer users a notably smoother and more user-friendly experience.

Labeling Tasks

Bug Fixes

- Fixed an issue where it was not possible to submit labeled inputs for some labeling tasks. Previously, you could not submit labeled inputs for certain labeled tasks. When working on some labeling tasks, if you loaded the input, selected the relevant concepts, and attempted to submit your labeled input, you could encounter an error. We fixed the issue.

Workflows

Bug Fixes

- Fixed an issue with processing videos using the Universal workflow. You can now confidently process videos with the Universal workflow without encountering any hindrances or issues.

- Fixed an issue with editing a workflow. Previously, while editing any workflow, the model version displayed "No results found," which was inconsistent with the initial workflow creation experience. The model version behavior now matches what is displayed when initially creating a workflow.

- Fixed an issue where it was not possible to copy a workflow without first changing its ID. Previously, you could successfully copy a workflow only after changing the copied workflow ID. You can now copy an existing workflow, even if you keep the same workflow name during the copying process, such as from "(workflow name)-copy" to "(workflow name)-copy."

- Removed the default/base workflow from "Use Model" modal. To use a model in a workflow, go to the model’s viewer page, click the “Use Model” button at the upper-right corner of the page, select the “Use in a Workflow” tab, and select a destination app and its base workflow. You’ll be redirected to the workflow editor page.

- Previously, if you tried to update the workflow in the editor page, you could encounter an error. The issue arises because the app's default/base workflow cannot be edited, but this limitation is communicated to the user late only after they've made changes to the workflow. We fixed the issue by graying out or excluding the base workflow option when users attempt to use a model in an existing base workflow.

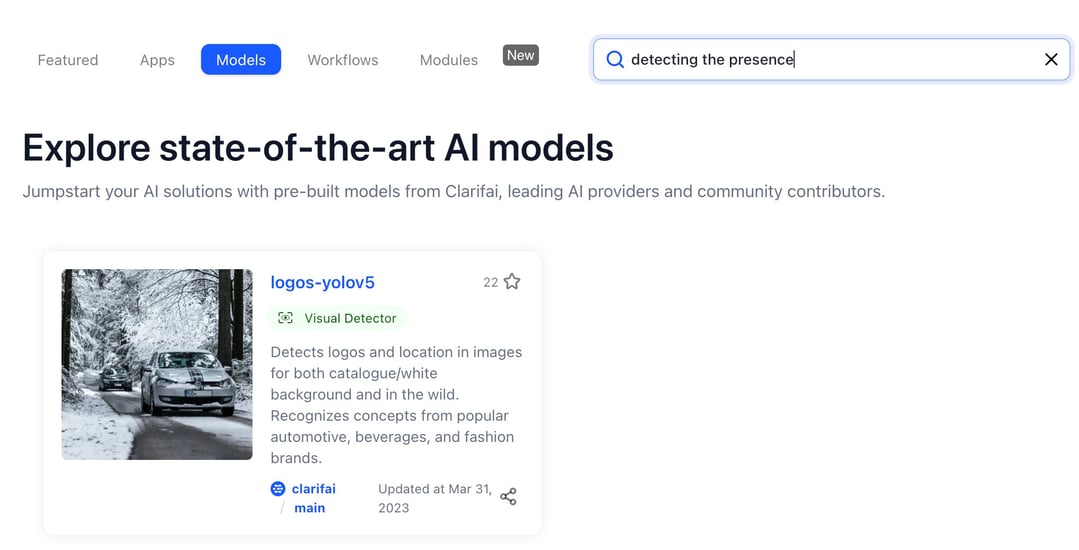

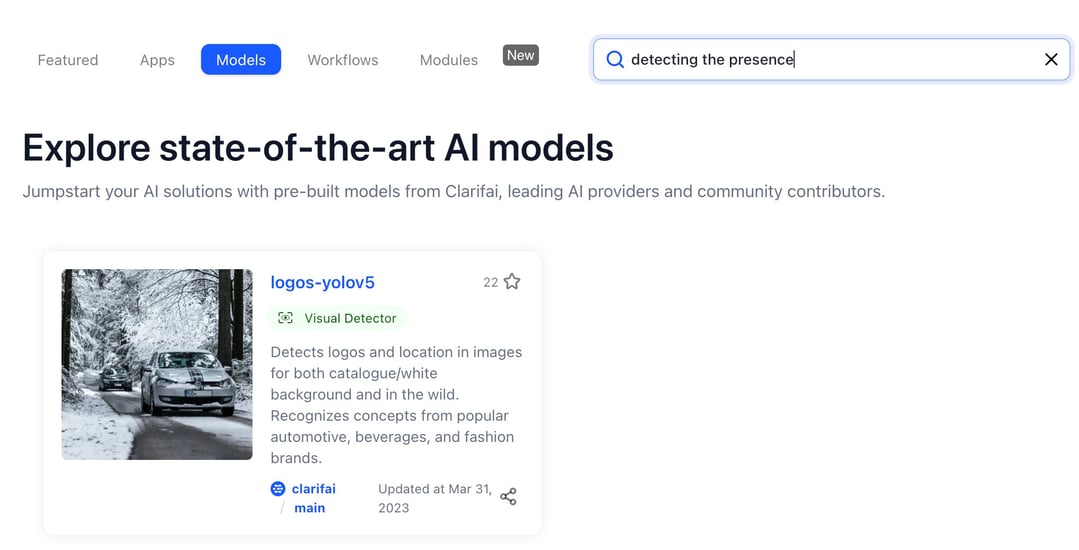

Markdown Search

Added ability to perform text-based searches within the markdown notes of apps, models, workflows, modules, and datasets

- For instance, when you visit https://clarifai.com/explore/models and input "detecting the presence" in the search field, the results will include any relevant resource where the phrase "detecting the presence" is found within the markdown notes. This feature enhances search functionality and allows for more precise and context-aware discovery of resources based on the content within their markdown notes.

Cover Image

Added cover image support for various types of resources, including apps, datasets, models, workflows, and modules

- This feature adds a visually engaging element to your resources, making them more appealing and informative. You can also delete the image if you want to.