Here at Clarifai, we have a lot of basketball fans, so if you’re like us, springtime can only mean one thing: NCAA March Madness.

Held every year since 2011, 68 college basketball teams from the Division I level of the National Collegiate Athletic Association (NCAA) compete to determine who will win the coveted national championship. Of course, going hand in hand with March Madness games are March Madness pools, where participants compete to see who can make the most accurate prediction about the championship’s brackets.

As an artificial intelligence (AI) company, you might think our team would try to use AI to win our own March Madness Pool (after all, AI’s value for data analysis is indisputable.) Contrarily, below Sam Dodge, one of Clarifai’s Senior Research Scientists explains how “dumb luck” (and a little math) won him the whole thing and how and why computer vision's accuracy is much more than chance.

Tell us about how you decided on your March Madness strategy.

Our office pool was free to enter and awarded cash prizes, so it was a no brainer to enter a bracket. Unfortunately, I have not watched any college basketball this year, and so I had no reason to favor one team over another. So, as with most difficult life decisions, I left the choice of my bracket to a random number generator:)

How did the “algorithm” decide who would make the final?

It was basically the computer randomly guessing who wins each game, with the guess weighted by the seeds.

So let's say we have a 1 seed play a 16 seed. I defined the probability of the 1 seed winning as 1 - (1/ (16+1)) = 0.94. The probability of the 16 seed winning is 1 - (16 / (16+1)) = 0.06. Then for each matchup, I have a random number generated from 0.0 to 1.0. If this number is less than 0.94 then the 1 seed wins, if not then the 16 seed wins. I could have made this better by computing the probabilities using historical data.

One reason why this worked well is that the seeds were pretty good. Then you add a little randomness to allow the possibility for lower seeds to win. But in the end, the most important element here is pure dumb luck. As I said, I did not watch any college basketball this season, so I had no knowledge of which teams are good.

So, how is this different from a computer vision model?

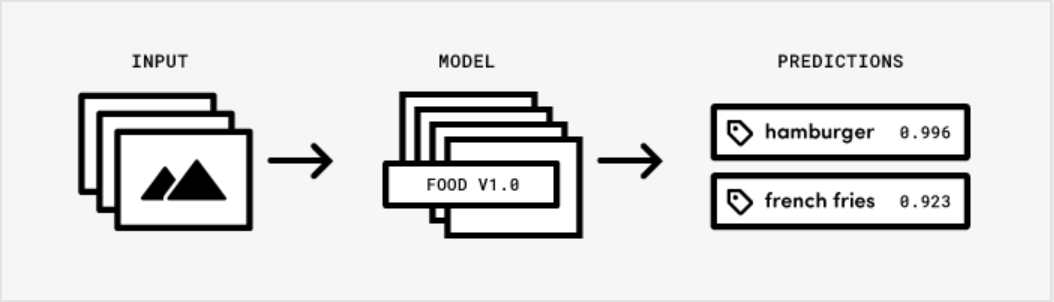

I would say this is not similar to a computer vision model at all. Computer vision models aren't just making random picks. We want our models to give the same predictions every time for the same image.

Some computer vision algorithms use randomness. For instance, when we train custom models, we may start with a model that has completely random weights (also called parameters) and then optimize those weights using the data.

It is actually important to start with random weights in the model. If we set the starting parameters to all the same values, the network would get “stuck” because all of the weights would update in the same way. So we usually randomly initialize models to provide an unbiased starting point that doesn’t have a symmetry problem.

It’s possible to not start with random weights and start with the weights from another model instead, which we do often. Starting from another model helps because that other model may have learned things that are useful for the new task.

How do you optimize these parameters?

A model is made of weights or parameters which determine the output of the model. We “train” these weights by using example labeled images. For example, if we show a randomly initialized model an image of a person, but the model’s output is “dog,” (i.e., it predicts the person is a dog,) we want to change the model weights to give an output closer to “human.” We do this by making small changes to the weights over and over again in the appropriate direction (i.e., try to decrease the weight until its 0%) This is what is happening when we train a model. The only tricky part is that the output is a complex non-linear function of the weights, so it might be difficult to get it to 0% for all of the person images, which is why models occasionally make mistakes.

With computer vision, the goal is to emulate human vision so computers can see as well we can. Having that ability lets computers take over many rote image classification tasks, giving us more time to focus on jobs that require human ingenuity (like trying our luck.)