|

###################################################################################################### |

|

# In this section, we set the user authentication, user and app ID, model details, and the URL of |

|

# the text we want as an input. Change these strings to run your own example. |

|

###################################################################################################### |

|

|

|

# Your PAT (Personal Access Token) can be found in the portal under Authentification |

|

PAT = '' |

|

# Specify the correct user_id/app_id pairings |

|

# Since you're making inferences outside your app's scope |

|

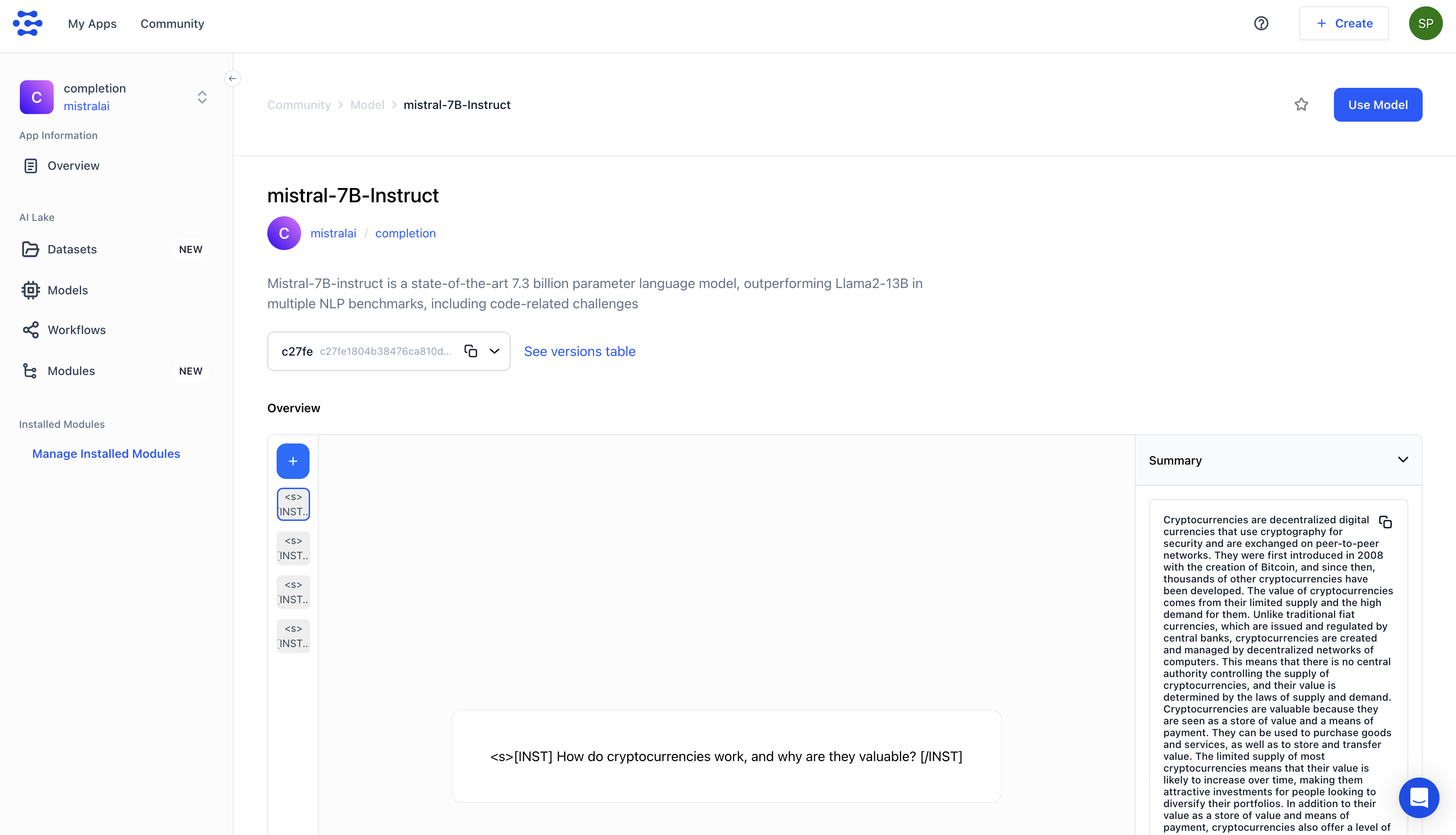

USER_ID = 'mistralai' |

|

APP_ID = 'completion' |

|

# Change these to whatever model and text URL you want to use |

|

MODEL_ID = 'mistral-7B-Instruct' |

|

MODEL_VERSION_ID = 'c27fe1804b38476ca810dd85bd997a3d' |

|

RAW_TEXT = 'Write a tweet on future of AI' |

|

# To use a hosted text file, assign the url variable |

|

# TEXT_FILE_URL = 'https://samples.clarifai.com/negative_sentence_12.txt' |

|

# Or, to use a local text file, assign the url variable |

|

# TEXT_FILE_LOCATION = 'YOUR_TEXT_FILE_LOCATION_HERE' |

|

|

|

############################################################################ |

|

# YOU DO NOT NEED TO CHANGE ANYTHING BELOW THIS LINE TO RUN THIS EXAMPLE |

|

############################################################################ |

|

|

|

from clarifai_grpc.channel.clarifai_channel import ClarifaiChannel |

|

from clarifai_grpc.grpc.api import resources_pb2, service_pb2, service_pb2_grpc |

|

from clarifai_grpc.grpc.api.status import status_code_pb2 |

|

|

|

channel = ClarifaiChannel.get_grpc_channel() |

|

stub = service_pb2_grpc.V2Stub(channel) |

|

|

|

metadata = (('authorization', 'Key ' + PAT),) |

|

|

|

userDataObject = resources_pb2.UserAppIDSet(user_id=USER_ID, app_id=APP_ID) |

|

|

|

# To use a local text file, uncomment the following lines |

|

# with open(TEXT_FILE_LOCATION, "rb") as f: |

|

# file_bytes = f.read() |

|

|

|

post_model_outputs_response = stub.PostModelOutputs( |

|

service_pb2.PostModelOutputsRequest( |

|

user_app_id=userDataObject, # The userDataObject is created in the overview and is required when using a PAT |

|

model_id=MODEL_ID, |

|

version_id=MODEL_VERSION_ID, # This is optional. Defaults to the latest model version |

|

inputs=[ |

|

resources_pb2.Input( |

|

data=resources_pb2.Data( |

|

text=resources_pb2.Text( |

|

raw=RAW_TEXT |

|

# url=TEXT_FILE_URL |

|

# raw=file_bytes |

|

) |

|

) |

|

) |

|

] |

|

), |

|

metadata=metadata |

|

) |

|

if post_model_outputs_response.status.code != status_code_pb2.SUCCESS: |

|

print(post_model_outputs_response.status) |

|

raise Exception(f"Post model outputs failed, status: {post_model_outputs_response.status.description}") |

|

|

|

# Since we have one input, one output will exist here |

|

output = post_model_outputs_response.outputs[0] |

|

|

|

print("Completion:\n") |

|

print(output.data.text.raw) |