MPT-7B-Instruct is a short-form instruction following model from MosaicML. The Model is built by fine-tuning the original MPT-7B.

You can now access the MPT-Instruct-7B model with the Clarifai API.

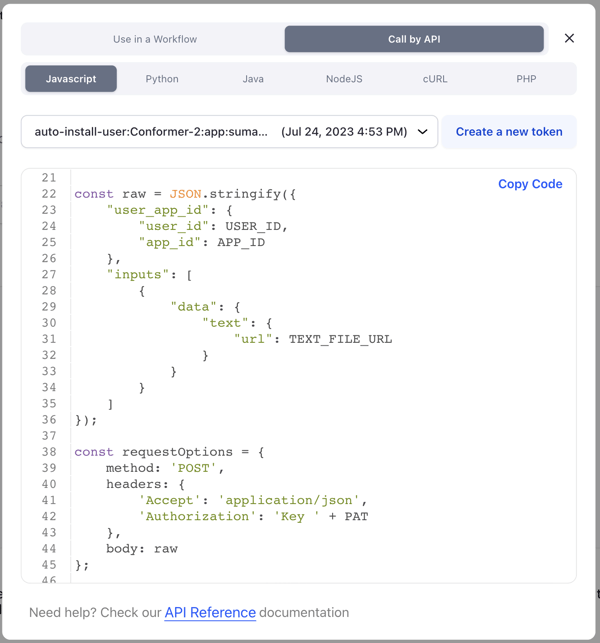

Running MPT-7B-Instruct model with Javascript

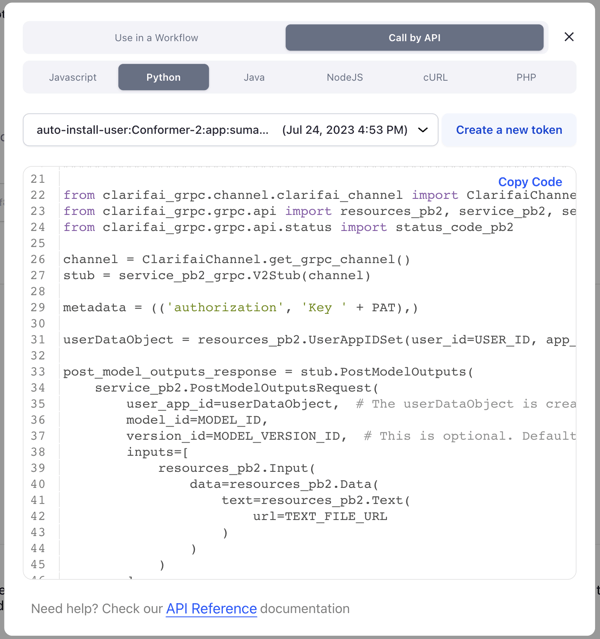

Running MPT-7B-Instruct model with Python

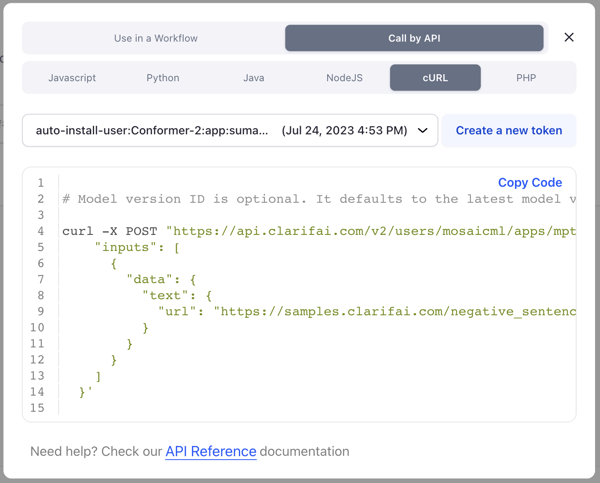

Running MPT-7B-Instruct model with cURL

Model Demo

More details of the MPT-7B-Instruct model

Use cases of the Model

Evaluation

Limitations

You can run MPT-7B-Instruct Model on Clarifai using Javascript:

You can run MPT-7B-Instruct Model on Clarifai using Python:

You can run MPT-7B-Instruct Model on Clarifai using cURL/HTTP:

# Model version ID is optional. It defaults to the latest model version if omitted.

You can also run MPT-7B-Instruct Model using other Clarifai Client Libraries like Java, NodeJS, PHP, etc.

Try out the model here: https://clarifai.com/mosaicml/mpt/models/mpt-7b-instruct

MPT-7B-Instruct is a decoder-style transformer with 6.7B parameters. It was trained from scratch on 1 trillion tokens of text and code, which were carefully curated by MosaicML's data team.

MPT-7B-Instruct is designed to excel at short-form instruction following tasks. It is particularly suitable for applications that require natural language instructions to be accurately processed and followed by the model. Potential use cases for MPT-7B-Instruct include:

- Language Understanding: The model can understand and follow textual instructions provided in various formats, such as YAML to JSON conversion.

- Automation: It can be utilized for automated tasks that rely on human-readable instructions, such as data preprocessing, text generation, or content conversion.

- Chatbot and Dialogue Systems: MPT-7B-Instruct can be used as a component in chatbot-like models to process and respond to user instructions effectively.

MPT-7B-Instruct's performance was evaluated using a combination of internal benchmarks and industry-standard evaluation methodologies. The model's ability to accurately follow instructions and generate appropriate outputs was assessed on various instruction-following tasks. Additionally, zero-shot performance on standard academic tasks was compared against other open-source models to establish its quality and capabilities.

While MPT-7B-Instruct is a powerful model for instruction-following tasks, it does have certain limitations that users should be aware of:

Language Dependency: MPT-7B-Instruct's performance may vary across different languages, with a stronger emphasis on English natural language text.

Context Length: Although the model is optimized to handle longer inputs compared to some open-source models, there may still be practical limitations on the length of instructions it can effectively process.

Specificity of Instructions: Like any language model, MPT-7B-Instruct may require precise and well-formulated instructions for accurate processing and generation.

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy