In the rapidly evolving landscape of artificial intelligence (AI), open source foundation models are emerging as a driving force for innovation and democratization. While tech giants once held the reins with proprietary models, the collaborative power of the open-source community is now challenging the status quo.

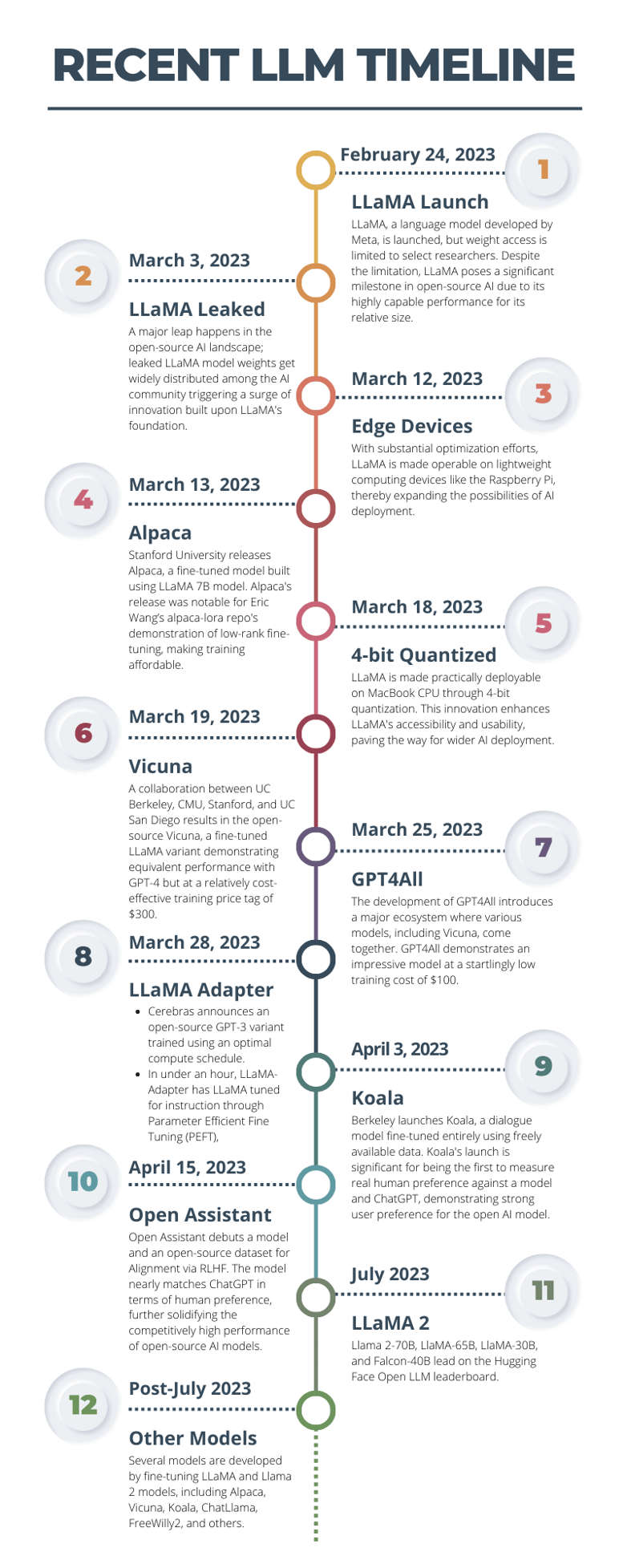

Large Language Models (LLMs), exemplifying this paradigm shift, are breaking free from the confines of a few corporations and unleashing immense potential for customization and widespread adoption. The accidental release of the LLaMA series by Meta in February 2023 was a turning point, spurring a wave of creativity and breakthroughs within the open-source community.

In this blog, we explore the significance of open-source foundation models, focusing on the LLaMA journey and the remarkable advancements they bring to the forefront of AI development.

The Rise of Open-Source Foundation Models: Empowering AI Innovation and Democratization

Open-source foundation models become more and more important every day. Proprietary models backed by tech giants such as Google and OpenAI once led the way, but in recent times, they've been increasingly challenged by the collective power of the open-source community.

Foundation models such as Large Language Models (LLMs) embody the themes of this shift in the AI landscape. This change in dynamics, from holding onto tightly controlled proprietary models to embracing the openness of information sharing and community-based innovation, promises a ripple of potential benefits across the board. By unshackling these foundation models from the confines of a few tech corporations, the potential for large-scale innovation, customization, and democratization of AI vastly increases.

In May 2023, a leaked memo from a Google researcher cast doubt on the company’s future in AI, stating that it has “no moat” in the industry. In this memo, a “moat” referred to a competitive advantage that a company has over other companies in the same industry, making it hard for competitors to gain market share. The researcher stated that despite their prominence, neither Google nor OpenAI has such a moat, implying that they lack unique, exclusive features or advantages in their AI models. Open source foundation models, the memo claimed, were about to eat their lunch.

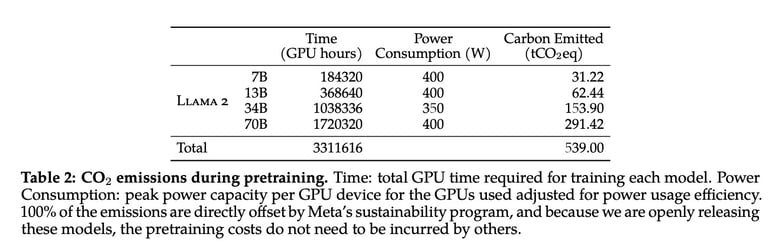

While this argument has merit, it fails to address one important point: all the incredible success of open source foundation models has been based on models created by corporations that were either leaked or intentionally released as open-source. To explain why this is the case, the details of the current state-of-the-art LLM, GPT-4, recently slipped out and indicated that the model has 1.8 trillion parameters across 120 layers, costing $63 million to train. The LLaMA 2 models we’re about to discuss used a total of 3.3 million GPU hours to train. One might say that’s at least somewhat of a moat.

Credit: LLaMA 2 paper

An incident that exemplifies the leveraging of commercial models in open source was the unintentional release, followed by the official release of powerful LLMs developed under the LLaMA (Large Language Model Meta AI) series.

Unintended Impact: The Accidental Release of LLaMA and its Consequences

The eyes of the AI community were turned towards Meta in February 2023, when it launched the LLaMA (Large Language Model - Meta AI) series. LLaMA was indeed a promising leap in the field of AI models. However, Meta intended to keep these models under proprietary control, limiting its widespread availability. Barely two weeks after its release, the model was leaked online in an unexpected turn of events.

The initial reactions within the community ranged from shock to disbelief. Not only did the accidental release violate Meta's ownership and control over LLaMA, but it also raised concerns about the potential misuse of such advanced models. Furthermore, it raised questions about legal and ethical challenges related to the unauthorized use of proprietary content.

Yet, the accidental release of LLaMA set in motion a surge of innovation within the open-source community. As developers and researchers worldwide acquired the model, its potential applications began to unfold. Innovations started appearing rapidly, with model improvements, quality upgrades, multimodality, and many more advancements conceived within a remarkably short span after the release.

Meta's Proactive Move: The Evolution and Advancements of LLaMA 2

As the wave of innovation swept through the open-source community following the unintended release of the original LLaMA, Meta decided to harness this sudden boom in creativity and engagement. Instead of fighting to regain control, the company chose to lead the way by officially releasing the second iteration of the model, LLaMA 2.

Proactively, in making LLaMA 2 completely open, Meta unlocked the potential for countless developers and researchers to contribute to its development. The significance of LLaMA 2 was underlined by its impressive parameter count and heightened performance capabilities, rivaling many closed-source models.

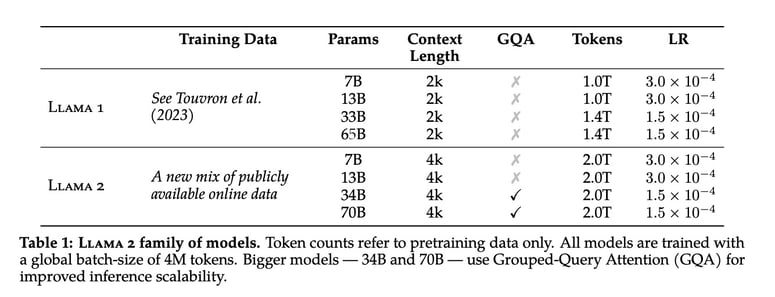

The rollout of LLaMA 2 brings with it an array of both pre-trained and fine-tuned Large Language Models (LLMs) boasting a scale from 7 billion (7B) up to an incredibly hefty 70 billion (70B) parameters. These models represent a notable advancement over their LLaMA 1 counterparts, given that they’ve been trained on 40% more tokens, feature an impressively extended context length of 4,000 tokens, and utilize grouped-query attention for the highly efficient inference of the 70B model.

Yet, what holds real promise in this release is the assortment of fine-tuned models specifically tailored for dialogue applications - LLaMA 2-Chat. These models have been optimized via Reinforcement Learning from Human Feedback (RLHF), leading to measurable improvements in safety and helpfulness benchmarks. Notably, based on human evaluations, the performance level of LLaMA 2-Chat models is comfortably in line with and surpasses that of numerous open models, reaching parity with the capabilities of ChatGPT.

The LLaMA Revolution: Unleashing Open-Source AI Advancements

The transition from the accidental release of the original LLaMA to the official release of LLaMA 2 heralds an unprecedented shift in the AI landscape. Firstly, the incident revitalizes the culture of open-source development. An influx of new ideas and improvements has emerged as developers worldwide are allowed to experiment with, iterate upon, and contribute to the LLaMA models.

Democratizing access to such advanced AI models has leveled the playing field. The open-source versions of LLaMA models enable individuals, smaller companies, and researchers with limited resources to participate in AI development using state-of-the-art tools, thus erasing the monopoly previously held by giant tech companies.

Finally, the completely open nature of LLaMA models means that any upgrades, improvements, and innovations will be immediately available to all. This cyclic flow of shared knowledge stimulates a healthy feedback loop, propelling the overall pace and breadth of AI advancements to unprecedented levels.

This so-called "LLaMA revolution" thus presents a compelling blueprint for AI's collective, collaborative future. And while questions about ethical and safety implications remain, there is no ignoring the whirlwind of innovation that has been unleashed.

OpenAI and Meta embarked on two unique paths. Initially, OpenAI was deeply rooted in ethical considerations and held lofty aspirations for worldwide change. However, as time went on, they became more inward-focused, shifting away from a spirit of open innovation. This shift towards a more closed approach drew criticism, especially due to their rigid stance on AI development. On the other hand, Meta started with a more restricted approach, which many criticized for its narrow AI practices. Yet, surprisingly, Meta's approach had a significant impact on the AI industry, particularly through its instrumental role in the development of PyTorch.

Much of this innovation has been driven by Low-Rank Adaptation (LoRA), a beneficial technology that has been instrumental in fine-tuning sizable AI models cost-effectively. Developed by Stanford University's Eric Wang, LoRA is a method that confines the changes made during the fine-tuning phase to a low-rank factor of the pre-trained model's parameters. This significantly reduces the complexity and computational requirements of the fine-tuning process, making it more efficient and affordable.

The role of LoRA is vital in the broader development and progression of open-source AI. It was showcased through Stanford's development of Alpaca, a fine-tuned model built using the LLaMA 7B model. This demonstration showed that training powerful AI models using LoRA could be carried out affordably and with great performance.

How Does Meta Benefit from Making Models Open-Source?

Meta benefits significantly from the innovation that the open-source community can spur. When AI systems are made open-source, developers and researchers worldwide can experiment with and improve them. This collective wisdom and ingenuity of the global AI community can drive the technology forward at a pace and breadth impossible for a single organization to achieve. Open-sourcing their models can also drive economies of scale and effectiveness. It allows for consolidating computational resources, minimizing redundancies in system design, and preventing the proliferation of isolated projects. This potentially reduces computational resource costs and the associated carbon footprint and opens a pathway for extensive safety and performance improvements.

Credit: LLaMA 2 paper

Making LLMs more widely available for commercial use will likely drive product integrations. The increased ability of other businesses to leverage Meta's LLaMA models in numerous applications could also indirectly feed back into Meta's products and services by surfacing new use cases and integration points.

Meta's contribution to open-source AI reinforces its position as a leader in AI advancement. The company is committed to furthering technological progress for the collective benefit rather than limiting advancements to its proprietary use. This can generate goodwill in the developer community towards Meta, strengthening its presence and influence within the ecosystem.

While open-sourcing LLaMA does bring up several challenges, the move also provides substantial benefits and opportunities for Meta. It ensures Meta remains not just a contributor, but a driver, in the ongoing journey of AI advancement. Perhaps most importantly, it strikes a huge blow to the first-mover advantage of OpenAI’s ChatGPT models, which caught the entire industry when ChatGPT appeared in late 2022.

Future Perspectives and Obstacles

As we move towards the widespread use of open source foundation models like LLaMA, one of the most pivotal aspects to consider is the potential consequences and challenges that this shift might introduce. These surges in technological advancement undeniably come with their fair share of obstacles, spanning the arenas of safety, ethics, and potential misuse of information.

In exploring future perspectives, it's essential to note Meta's consistent emphasis on open research and community collaboration. They have a robust history of open-sourcing code, datasets, and tools, facilitating exploratory research and large-scale production deployment for prominent AI labs and companies. Meta's open releases have spurred research in model efficiency, medicine, and conversational safety studies, further amplifying the value of shared resources.

Despite these strides, Meta recognizes the potential risks and societal implications of openly releasing powerful AI models like LLaMA. Although they have put prohibitions against illegal or harmful use cases, misuse of these models remains a significant threat. The democratization of access to advanced LLaMA models will put such technology into more hands, increasing the possibility of unregulated use.

As these models become integrated with an array of commercial products, this responsibility of ethical and safe use also extends to developers leveraging these models. In anticipation of potential privacy- and content-related risks, Meta stresses the need for developers to follow best practices for responsible development. As new products are built and deployed, managing these risks becomes a shared responsibility among all stakeholders in the AI field.

Other logistical and environmental challenges have emerged. The compute costs for pretraining such Large Language Models (LLMs) are excessively high for smaller organizations. Also, an unchecked proliferation of large models could exacerbate the sector's carbon footprint.

The silver lining here lies in Meta's apparent belief in collective wisdom and the ingenuity of the AI-practitioner community. Meta contends that adopting an open approach will yield the benefits of this technology and ensure its safety. The shared responsibility within the community can lead to better, safer models while preventing the onus of progress and safety work from falling solely on large companies' shoulders.

Navigating this new terrain of open-source foundation models will undeniably present challenges ranging from ethical boundaries to digital security. Thus, safeguarding against these risks while maximizing the benefits of such models is an undertaking that will shape the future trajectory of AI.

Want to stay up-to-date with the AI world? We regularly publish the latest news and trends in AI. Subscribe to our newsletter.

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy