To an outsider looking in, the accomplishments of the machine learning field in recent years can sometimes seem like magic. When it comes to technologies which cast people into wonder, Generative Adversarial Networks, or GANS, is the closest to being actual magic.

They have gained massive public attention due to their capability to perform amazing feats such as inventing new hyper-realistic faces, making videos of celebrities that say anything you want, and allowing computers to write their own music.

What's in a Name?

The simplest way to understand how they’re able to accomplish all of this is to break down the name and dig into specifics of what it actually means. First and foremost are Generative and Adversarial. Imagine a scenario where there are two parties: the counterfeiter and the treasury. The counterfeiter wants to make the most convincing fake money possible, so they look to examples of real money and try to learn what encompasses a real bill, and then produce a counterfeit. If the counterfeiter tries really hard and through enough experience learns what really makes a dollar bill, they can produce very convincing fakes. However, the treasury always has to be on top of counterfeiters, so they focus on finer security details that allow them to better discriminate between real bills and fakes. In other words, through the process of constantly trying to beat each other, over time the forger keeps improving their skills at making fakes, and the treasury gets better at detecting fakes.

Similar to the setup with the counterfeiters and treasury, the GANs have 2 networks, the Generator and the Discriminator that work with the following objectives:

- The generator network optimizes by minimizing the probability that the discriminator assigns the correct label “real or fake” to the data it creates.

- The discriminator network optimizes by maximizing the probability of distinguishing between real data and data created by the generator network.

Practice Makes Perfect - Training for the Win

With enough training, the theory is that the generator network will get better and better until the probability that the discriminator can correctly distinguish between the real data and the fake data approaches 0. The practical result is a generator network that is very good at producing convincing fakes of almost any kind of data, images, video, audio, and more.

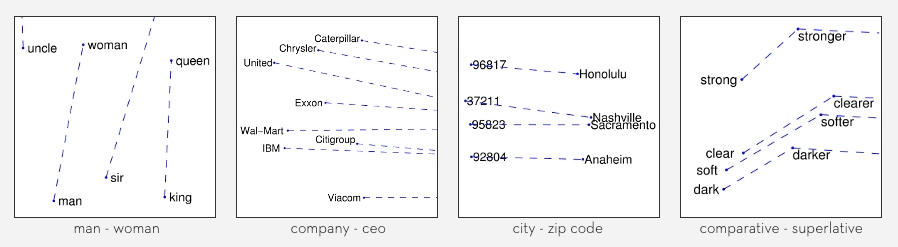

Amazing are not just the practical, but also the theoretical, implications of this framework. Like other deep neural networks, the idea is that with enough computation GANs actually learn relationships between subtle features, and through their training process actually learn the underlying meaning of data categories. Those familiar with neural networks which learn word embeddings, such as the GloVe representation, have to be familiar with the semantic substructures which allow vector arithmetic as in the following demo:

Source: https://nlp.stanford.edu/projects/glove/

In the above image from Stanford’s GloVe we see we can perform vector arithmetic, which means we can take the vector representation of categories and add them and subtract them in meaningful ways, capturing relationships in the underlying data. For example, man - woman is roughly equal to king - queen, and so on for analogous word pairs.

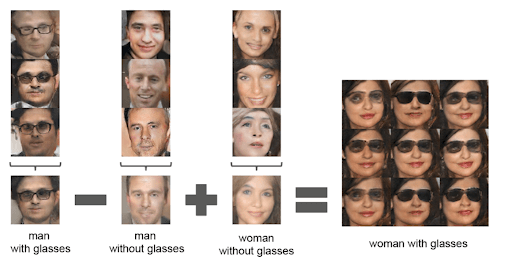

We can also achieve a similar result illustrated by the following image from the seminal paper on deep convolutional GANs:

Source: https://arxiv.org/abs/1511.06434

Source: https://arxiv.org/abs/1511.06434

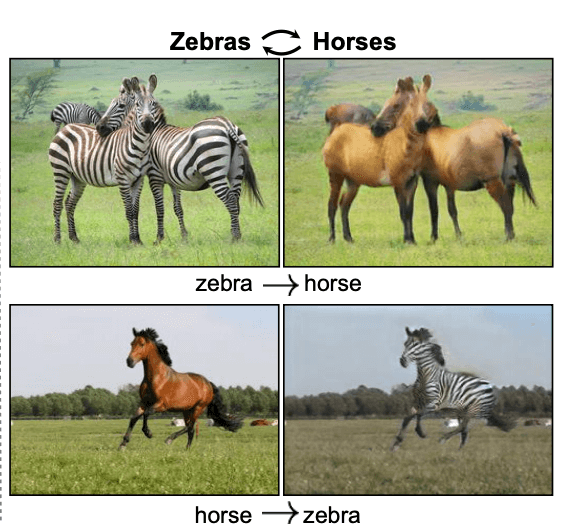

When we perform arithmetic on the generator’s noise vector we can get outputs that appear to show that GANs learn a meaningful underlying representation of the data set. Note this is different than style transfer models, which are specifically trained to do so rather than relying on post-hoc vector arithmetic, and also produce exciting results as in the famous zebra to horse example:

Source: https://arxiv.org/pdf/1703.10593.pdf

Source: https://arxiv.org/pdf/1703.10593.pdf

GANs are capable of creating surprising, and at times eerily convincing, machine-generated images. As resolution improves through increased access to hardware like high-performance GPUs, GANs will continue to create more and more realistic results and get closer to not just fooling discriminator networks, but also generating results indistinguishable by humans.

More AI Resources

Check out the Ultimate Artificial Intelligence Glossary for even more information on:

- Key terms & definitions to better understand the AI world

- AI experts you need to know

- AI organizations pushing forward the technology