That Which Does Not Kill the Model Makes it Stronger

As the gap between power and latency on wireless edge devices rush towards their respective limits, computer vision researchers are preparing for a paradigm shift in on-device processing, sensing, security, and intelligence. Enormous energies are being directed toward the art and science of visual data. And, astoundingly, this data is doing some art of its own.

Of the many advancements in AI, there is perhaps no technique that has better captured the public imagination than the famous (some would say infamous) Generative Adversarial Network (GAN).

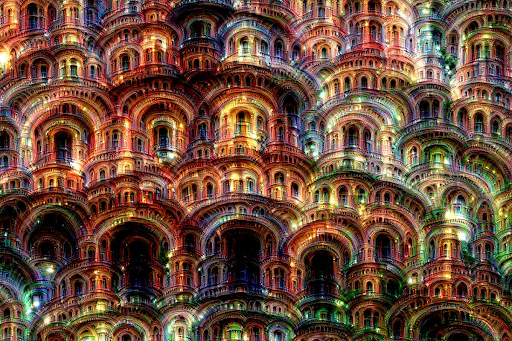

Take a look under the hood of a growing number of generation, simulation and transformation technologies, and you will find the almighty GAN. Examples can be found all over the web. GANs are generating convincing portraits, artificially aging faces, and even transforming videos of horses into videos of zebras.

The results are so convincing and realistic that many people believe that GANs have leaped over the uncanny valley once and for all - producing original and “intuitively human” portraits of people who never existed.

The technology is nothing short of amazing, and the details of successfully building a GAN are as complex as you would think, but at the core of the technology there is an appealingly and simple principle at play: that which does not kill us, makes us stronger.

The intelligent image recognition systems used at Clarifai like our Custom Facial Recognition tools are largely built on a family of technologies known as convolutional neural networks (CNNs). As the “neural” part of the CNN name implies, these networks are inspired by the workings of the brain.

The secret to the success of GANs is that they have extended this analogy from one brain to interactions between multiple brains. We might say that if CNNs are inspired by neurology, GANs are inspired by evolutionary biology, economics, and game theory - fields where minds compete with each other, and only the fittest survive. And like a capitalistic economy, this competition drives creativity and destroys ideas that don’t work.

The critical insight here is that it is possible to train two networks – one nested inside the other – that compete against each other in a game of “Generate and Discriminate”. One network is trained to generate new samples for you (the “generator”), and a second network is trained to guess whether or not samples are real or fake (the “discriminator”).

The generator is driven to create more and more convincing samples as it attempts to deceive the discriminator, and the discriminator is driven to get better and better at detecting fake samples.

If everything goes according to plan, the two systems will struggle against each other in a virtuous cycle until they achieve a Nash equilibrium (from the Nobel Laureate economist John Nash of “Beautiful Mind” fame), and you will have a generative system on your hands that is capable of producing convincing new images, original works of art, or any other kinds of data you hope to emulate.

More AI Resources

Check out the Ultimate Artificial Intelligence Glossary for even more information on:

- Key terms & definitions to better understand the AI world

- AI experts you need to know

- AI organizations pushing forward the technology