Computer Vision (CV) is a field of computer science that focuses on creating digital models to process, analyze, and understand image and video data. Computers don’t have the subjective “experience” of vision the way that people do, but computers can explore and extract meaningful information from images, and this information can be used to drive actions and automate systems.

In this article, we will take a look at:

Artificial intelligence (AI) has revolutionized the field of computer vision. Computers can now better classify, identify, and analyze image data more effectively than has ever been possible before. How did this happen?

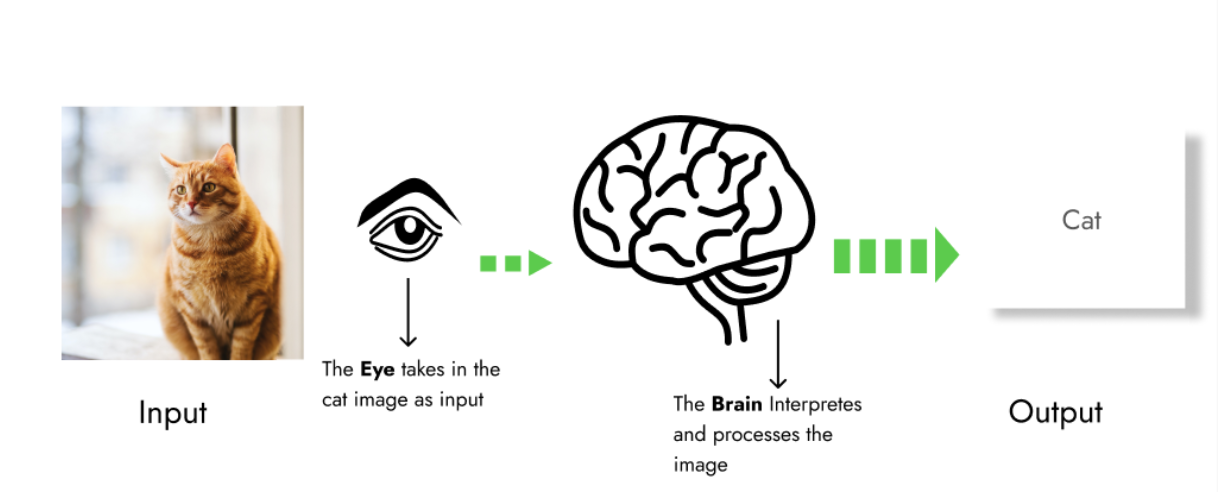

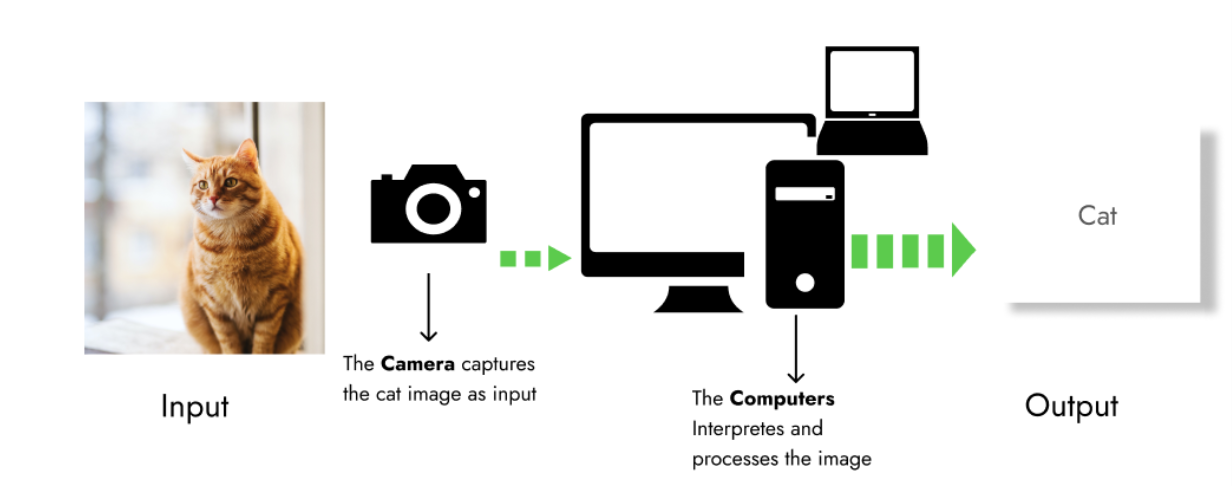

Computer vision technology is largely built upon the example that we see in the natural world. Your camera acts as an eye, and it captures images to be processed on a computer.

Computer vision technology got its start In the late 1950s. Hubel and Wiesel carried out research on the visual perception of a cat. They observed and compared the visual pathway from the retina to the visual cortexes by studying the receptive fields of the cells in the striate cortex.

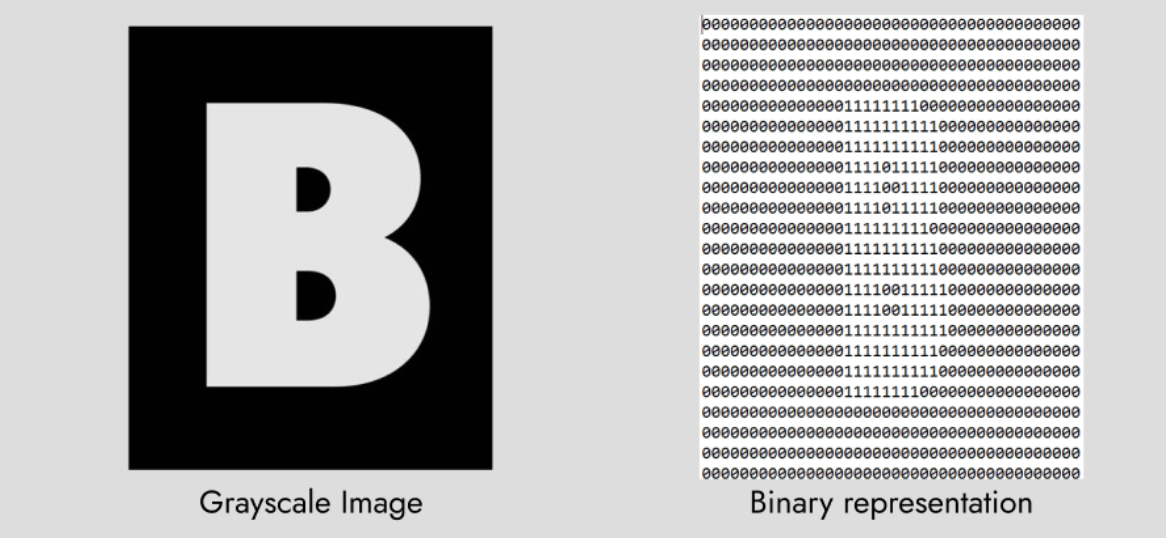

The receptive field is a sensory field within which visual stimuli can influence activity within the sensory cells (such as the firing of a neuron). The research categorized the receptive cells as ‘excitatory’ and ‘inhibitory.’ For grey images, we can interpret this as ‘1s’ and ‘0s’.

This study of perception led to research on how humans perceive vision and how computers can interpret it.

In their most basic form, computer vision models take an image as an input and return a word as their output. In this example the input is an image of a cat, and the output is the word “cat”. At no point in this process does the computer have a conscious experience of “seeing” a cat.

The image below shows a picture of the letter ‘B’ and its corresponding binary representation. The pixel values in this image display the light intensity of one or several spectral bands (gray or colored images).

Machines interpret this data as a series of pixels, each with its color values. In this simplified example, each pixel is stored as a single bit (represented as a binary of 0 or 1), where 0 corresponds to the back background and 1 corresponds to the white letter.

Computers “see” the world in terms of the values stored in each pixel. Machine learning algorithms learn to identify patterns and relationships in these pixel values. This pixel-by-pixel way of looking at things is very different from human vision, but it is still very effective. Computers have been shown to be able to process more images, more accurately, than people can. Computers can identify individual objects in an image, the position of these objects, and much more through machine learning models and algorithms.

A computer vision application can be a stand-alone application that solves a specific task of recognition and detection. It can also be a part of a sub-system in a larger design.

Computer vision systems can acquire digital images from one or several image sensors or devices such as cameras, radars, tomography devices, range sensors, etc. The resulting image data you get can be an ordinary 2D image, a 3D volume, or an image sequence. These images are then analyzed by the computer one pixel at a time.

Computers process, augment, and prepare image data before an algorithm is applied. Pre-processing ensures that image data satisfies the requirements of the model. Some common pre-processing steps include noise reduction to reduce irrelevant information from data, standardization and compression to enhance processing speeds and image augmentation to improve model performance.

Computer vision models extract features at different levels of complexity from image data. These features can include “low-level” features like lines, edges, corners, points, or blobs; “high-level” features like a car, or a person’s face; and there are many “in-between” features that a computer can recognized but might not be intuitive for a person to recognize.

Once an image’s “features” have been processed, CV algorithms can accomplish a variety of high-level processing tasks. They can classify images, identify specific objects in images, and even estimate model parameters, such as object pose or size.

Computer vision technologies have been adopted by nearly all industries, with significant growth and diversification of use cases in the last few decades. You might have seen or used some of them. If you are an Instagram user, you will recognize the filters and how Snapchat uses Computer Vision to recognize faces

Image recognition is at the core goal of almost all computer vision technology. Image recognition allows you to identify what your image is a picture “of”, or what objects are “in” your image. You can identify people, vehicles, clothing and essentially any other object that is visually distinct.

The following describes some of the tasks we attempt to solve with CV:

Classification: It identifies the category or class that the image belongs to.Motion recognition is a subdiscipline of Computer Vision that focuses on understanding the relative positions of various body parts over time. Motion or gestures from your hands, face, or legs can be used to control or interact with devices without any contact. The applications of gesture recognition extend to postures, proxemics, gait, and human behaviors. With this study, computers can better understand the human body and its movement. So, we can get a closer and better relationship with our machines.

Other applications of gesture recognition include:

When you have one or several images of a particular scene, or a video, you can reconstruct the object via a 3D model or image. Scene reconstruction involves capturing the shape and appearance of a real-world object from pictures or scans of the object. The model can be a set of 3D points or a complete 3D surface model.

Some applications of 3D reconstruction include:

Computer Vision and many other forms of “artificial intelligence” have been inspired and influenced by the study of “natural intelligence” in humans and animals.

Machine learning is defined as a branch of Artificial Intelligence focused on building applications that learn from data and improve their accuracy over time without being programmed to do so. Before machine learning, we had to make do with a top-to-bottom description of the features that make up a digital image. Now, we can provide machine learning algorithms with examples of the things that we would like to recognize, apply the necessary processing power and training cycles, and we get a computer “model” of what it is to be an image of the things that we would like to recognize.

This is similar to how we learn. We do not just wake up one day, look at a dog, and say “this is a dog”. We know how to recognized dogs because of our experience with dogs. We have learned by looking at examples of dogs in the real world and our brains have acquired the ability to understand the essential features of “dogness”.

Machine learning has contributed immensely to the growth of Computer Vision. We now have more effective algorithms to process and understand digital images. Machine learning provides insight to unstructured digital data such as images, videos, texts, and more.

Over the last century, there has been extensive research on the eyes, neurons, and brain structure devoted to the processing of visual stimuli in both humans and animals. These studies gave us an understanding of how the actual vision in the eyes operates to solve vision-related tasks.

Neurobiology has played a central role in inspiring the technology behind computer vision systems. Artificial Neural networks (ANN) are learning algorithms that are inspired by the structure, feature, and functional aspects of biological neural networks.

Convolutional neural networks are an ANN that are in widespread use for image and video processing. A convolutional neural network typically consists of three different processing “layers”; a convolutional layer, a pooling layer, and a fully connected layer. The convolution and pooling layer handles the feature extraction whereas the fully connected layer maps the feature extraction to an output.

Medical image processing is one of the most prominent uses for computer vision. With it, you can extract information from medical image data to diagnose a patient.

Image data here takes forms such as ultrasonic, X-ray, mammography and microscopy, and angiography images. A recent application is the automated detection of COVID-19 cases using deep neural networks with X-ray images.

You can use facial recognition to identify people’s faces to their identities. Several software applications such as Snapchat, Instagram, and Facebook have integrated Computer Vision to identify people in pictures. Another application for facial technology is biometric authentication. Several mobile devices today allow users to unlock their devices with their faces.

Computer vision is the central element in augmented reality applications. With CV, AR apps can detect objects in real-time and process this information for virtual objects or elements to add to the physical space.

Self-driving cars use Computer Vision to see and understand their surroundings. An automated vehicle uses cameras placed at different angles to send video as input for the CV software application. The processing involves the detection of road-markings, nearby objects, traffic lights, and more.

We live in a time when there is more image and video data produced than any other kind of data. We also have a higher computational power to handle these processes. CV is essential to how we interact with and understand this data, and will continue to show huge advances and contributions across a wide range of fields and industries.

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy