Using AI models, developers can create an astonishing array of useful real-world applications, representing the set of rules or guidelines applied to data for analytical, predictive, and automation purposes.

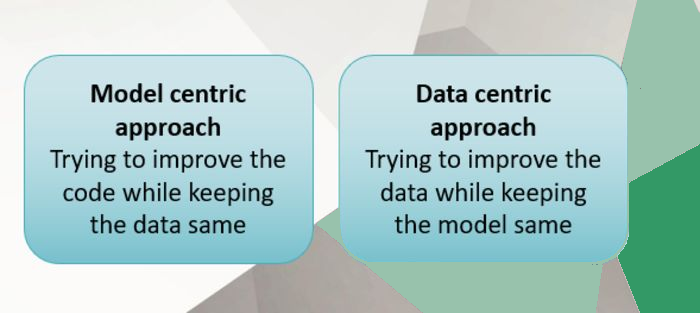

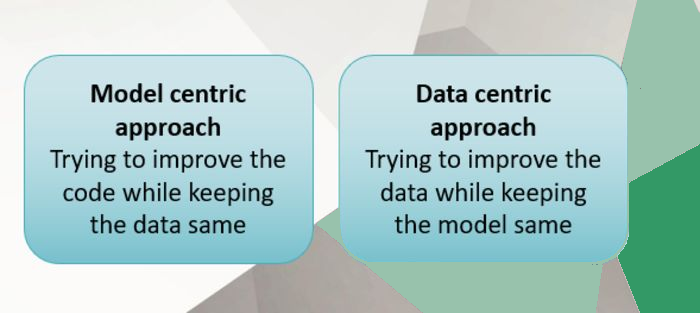

The AI community has always prioritized the development of models, putting more effort into their optimization. However, new developments have shown that this is not always the best approach (Fekete, Kay, & Röhm, 2021). This is because AI projects are not simply application development projects; they rely on training data, models, and configurations that control how a particular model is applied to a specific AI problem. Application code only implements the algorithms used in AI models. Thus, focusing only on optimizing the machine code is missing a big picture from the broader AI problem. The new mantra in the AI community is "if you want to be AI-first, you need to have a data-first perspective".

The data-centric approach consists of systematically optimizing datasets to improve the accuracy of AI systems. Machine learning scientists find this approach promising because refined data generates better results than unrefined data. The objective of a data-centric approach is to shift the focus from fiddling with model parameters to ensuring quality data input.

Figure 1: Two approaches to solving machine learning problems

Why is high-quality data important in a data-centric approach?

In machine learning, the training data consists of labeled images, phrases, audio, video, and other data sources. If this training data is poor, the resulting model and its optimization will also perform poorly. As the saying goes, garbage in, garbage out. This might produce poor customer experiences through AI-based chatbots, while it can be life-threatening in a biomedical algorithm or an autonomous vehicle. Therefore, scientists are shifting towards a data-centric approach to significantly improve AI (Sambasivan et al., 2021).

What is High-quality data?

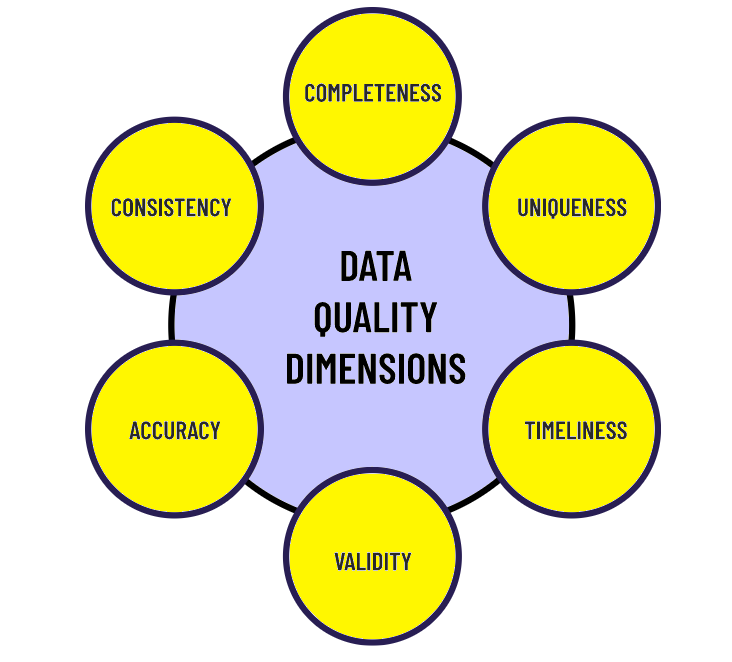

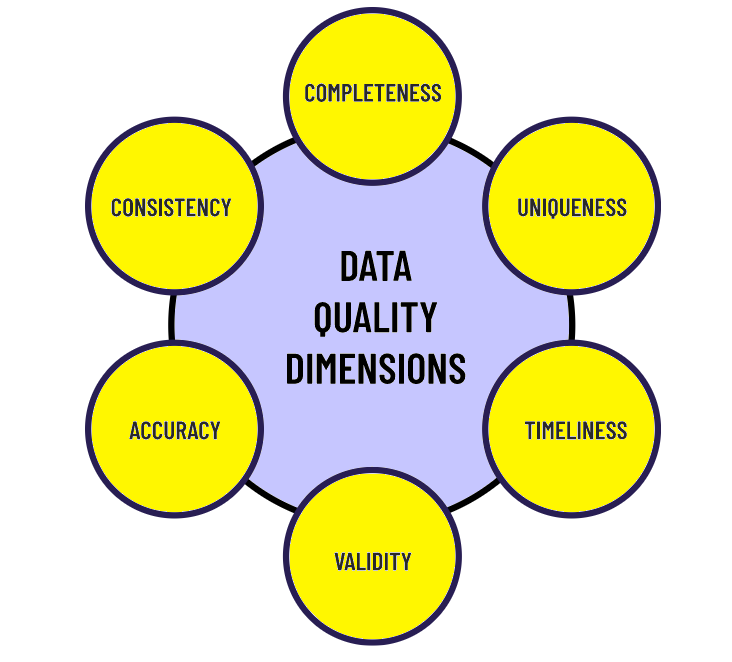

In order to focus on a data-centric approach, one should be aware of what defines high-quality data. It should be complete, unique, accurate, ethically sourced, secure, and free from clutters which affect the intelligence of the algorithm. For example, high-quality data will be ideally free from bias. Data bias is when certain elements of the dataset impact algorithm decisions more powerfully than others. For example, a facial recognition system trained with faces of white men only will have a considerably low level of accuracy with women and people of other ethnicities. This is known as “sample bias”. There can be other types of bias hidden in the dataset as well, such as exclusion bias, measurement bias, recall bias, association bias, racial bias, and observer bias (Bertossi & Geerts, 2020).

Figure 2: Key features which distinguish high-quality training data

Figure 2: Key features which distinguish high-quality training data

Apart from biases, high-quality data is also free from poor or inconsistent data labeling. Sometimes the labeled data is an incorrect representation of the problem a particular AI model is trying to solve, and sometimes inconsistent data labeling results when a single object is differently labeled during the labeling process.

Important Aspects of Data-Centric Approach

Andrew Ng, an AI pioneer, recently elaborated on how the AI ecosystem should change its focus from a model-centric approach to a data-centric approach. He pointed out that 80% of the machine learning process is actually sourcing and preparing high-quality data for AI algorithms while training the model only represents 20% of the effort. Despite this, training is given primary focus. This trend is slowly changing, as evident from Gartner’s hype cycle for AI showing data labeling and annotation as an “Innovation Trigger”.

Quality of Data Labeling: It is quite possible that different labelers give different labels to a single kind of data. The quality of data labeling drops significantly in such cases due to human error. These noisy labels affect the AI model performance, and their impact varies by industry. Sectors such as healthcare and agriculture are most affected due to smaller datasets, while in larger datasets, the noise can average out due to millions of data points.

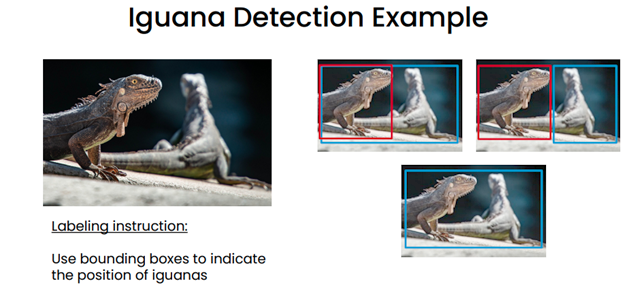

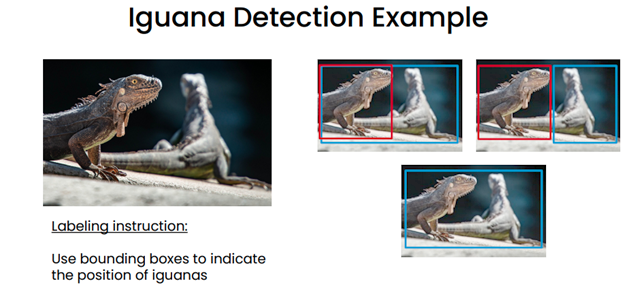

In the following images are some examples of noisy labels. The labelers were given the same labeling instructions to label the data.

Figure 3: Labeling noise in iguana detection example

As you can imagine, AI models will perform poorly with noise in labeled data. One can mitigate the problem by further refining the guidelines; two independent labelers may be asked to label the sample of images. If there is any inconsistency in labeling it will be found, and the guidelines are updated again until the inconsistency is removed.

Data augmentation reduces overfitting when training a machine learning model. Apart from adding more data, clean data free from noise is important because it increases the model's ability to generalize better on new, unseen data.

Data sources with the right kind of data may involve discussion with subject matter experts and finding the right businesses with a rigorous understanding of the subject matter. While sourcing the data, scientists need to consider terms of data accumulation, data storage, and business logic to make data coherent.

Feature engineering introduces variables that might not be present in the original raw data by extracting them from other features to increase the model’s efficiency and accuracy.

Data volume may not be so important. While the general consensus today is that more data is better for training, this is not always true. Data quality also matters, and training a model with a huge volume of data containing noise and bias can be slow and erroneous, while a smaller dataset with good quality labeling can result in better model performance.

Key Takeaways

1) An AI system comprises code and data, and both are equally needed to build and operate an efficient AI model.

2) Model development and its training are equally important.

3) High-quality data sourcing and preparation is an extremely important factor in the development of efficient and accurate AI models.

4) The data-centric approach is to improve the consistency of labeled data, as well as remove biases.

References

Bertossi, L., & Geerts, F. (2020). Data quality and explainable AI. Journal of Data and Information Quality (JDIQ), 12(2), 1–9.

Fekete, A., Kay, J., & Röhm, U. (2021). A data-centric computing curriculum for a data science major. In Proceedings of the 52nd ACM Technical Symposium on Computer Science Education (pp. 865–871).

Sambasivan, N., Kapania, S., Highfill, H., Akrong, D., Paritosh, P., & Aroyo, L. M. (2021). “Everyone wants to do the model work, not the data work”: Data Cascades in High-Stakes AI. In proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1–15).

Figure 2: Key features which distinguish high-quality training data

Figure 2: Key features which distinguish high-quality training data