In the beginning, AI engineers toiled in obscurity, spending years hand-crafting equations to solve even the smallest Computer Vision problems. But the world has certainly changed quickly over the last few years. With the advent of Machine Learning, the pace of innovation is accelerating, and the potential for new business applications is almost limitless. But building cutting edge solutions often requires vast amounts of resources, which has meant that companies with the deepest pockets could build the best solutions. Today, that has changed and we can now welcome you to the world of Data Efficiency with Clarifai.

Let's start with some good news. Just about every aspect of Computer Vision is getting better with Machine Learning vs. the old “rule-based” approach, where researchers were crafting equations by hand. Images are more easily classified, objects are quickly detected, poses are accurately estimated, and faces are recognized. Not only are the end results better, but the path to getting these results is predictable and repeatable —good news for anyone trying to incorporate this technology into their business.

Once a problem has been clearly defined, and meaningful metrics identified, a data scientist simply needs to provide examples for their model to learn from. Then Machine Learning algorithms can automatically detect patterns in data that once had to be discovered by a human, measured and explicitly incorporated in code. Many challenging “vision” problems have now been solved using "machine learned Computer Vision." The best solutions tend to be those where extremely large datasets have been collected, and months or even years of GPU time has been spent searching for the best match to the labelled data. This pattern in turn has led to thinking that the steps of solving an AI problem are as follows: first collect as much data as possible, then label the data, and finally, train as long as possible. Unfortunately this technique usually leads to failure because of an incorrect assumption in the data collection process. Even when this approach is successful it takes longer and costs more than necessary. In fact, organizations can spend 90% of their entire AI modeling budget using this approach.

Because data collection and labelling are not free, a better approach is to use a “deploy first” approach, using as little data as possible, and using the deployed system to cheaply collect the best possible data. As more data is obtained, initial models can be used to reduce the cost of labelling data.

Here are two questions to consider:

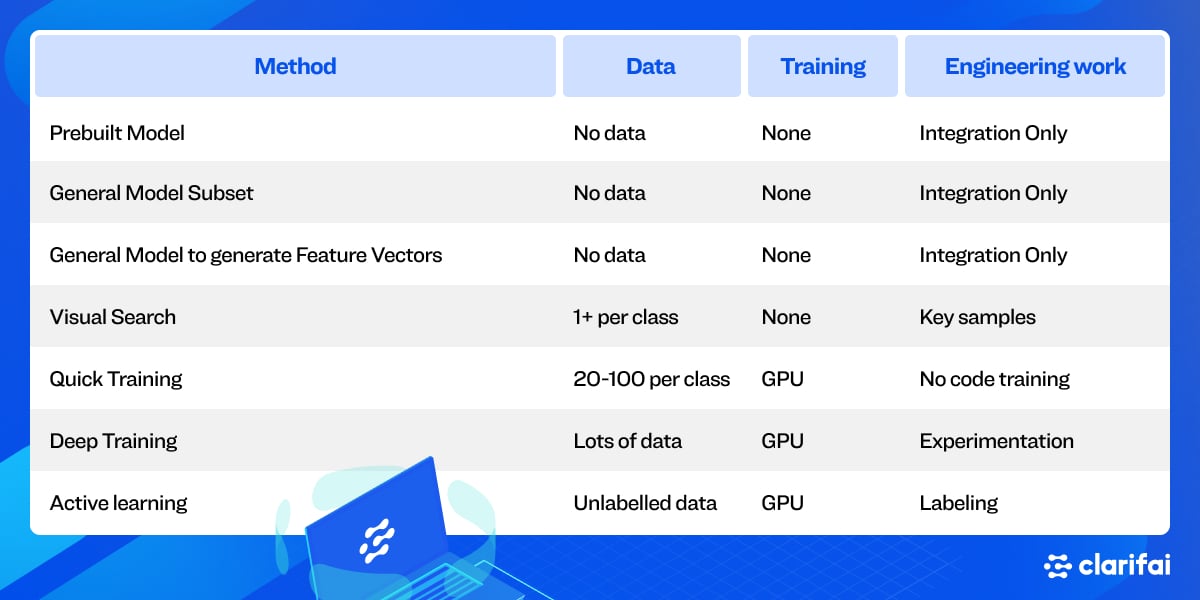

We will start with zero data and show how to deploy models with no data. Then we will work our way up to one sample per category, and then a few samples, and then many samples. Slightly different techniques may be used at each point, but all are supported in the Clarifai Platform.

The fact is that most of us do not have access to unlimited training data. We also don’t have access to unlimited processing power, or unlimited time to train our models. Most of us are trying to get the best possible result with the least amount of effort. The feasibility of ML applications in business are limited by the usual considerations of cost, risk and ROI.

Clarifai helps companies build AI-powered businesses applications that are practical for business to implement in the real world is one of the reasons that Clarifai Research is so focused on data efficiency.

We have some customers that have lots of data but don’t have a way to label it. We have some customers that have little, or no training data and want to get a solution going quickly. We hope to help our customers do the best they can with the data and resources that they have right now.

Most Machine Learning models follow a similar pattern and respond to data in a similar way. It is typically relatively easy to get to some medium-high level of accuracy, and much, much harder to get to very high levels of accuracy. We might get to 60-80% accuracy with relatively few training samples, and might need thousands or even millions more training samples to achieve accuracy above 90%.

But the pattern is clear: As we provide more data or more computational power, and (as long as we have a consistently labeled dataset and an appropriately selected algorithm), we are able to build models that provide more accurate predictions (Figure 1).

Figure 1: Accuracy vs Error rate. Accuracy increases quickly in the beginning. As training data and computation increase on the horizontal axis, accuracy increases on the vertical axis and error decreases.

Given this learning pattern, a couple of questions might come to mind:

What if there was a way to get to some level of acceptable accuracy right away? We could deploy a solution right now, and then acquire more training data from the real world. We could begin to work with a model in production and we could provide a model with the best possible examples to learn from.

Clarifai offers dozens of prebuilt models that have already trained with millions of parameters on millions of samples. There are a wide variety of use cases where one of the models in Clarifai’s Model Gallery will provide us with the accuracy and functionality that we need right out of the box.

If we have no data and one of these models matches what we need we should use one of these models. Models can be configured and managed easily through Clarifai Portal, and we can integrate them within our own software using Clarifai’s API. Clarifai provides clients/examples to make this trivial in Python, Javan, and NodeJS. Any language that can generate an HTTP request can call inference, even the command line with cURL:

Clarifai's general model has over 9,000 visible concepts that are evaluated with one call and the most significant are returned. If Clarifai hasn't build an explicit model for a particular problem, the general model can be used and the results filtered to include only concepts of interest.

With a single image per class, classification can be done by searching for the nearest match. That is to say, if we have one example of what we are looking for, we can use our prebuilt models to index a completely unstructured dataset, and then sort your data based on “visual similarity”.

With Visual Search we can use just one example of every type of image that we want to recognize, and instantly structure our data around the visual characteristics of this one sample.

This sort of technique has wide-ranging applications across many different fields, and ends up being part of end solutions, and data preparation pipelines alike. Sometimes called “one-shot” learning, visual search works well if the query is similar to sample image and all images differ in the same way. (e.g. catalog images matching catalog images). This technique can be used to rapidly find other examples from an unlabelled dataset.

This one-shot learning approach does not work as well if there are lots of differences besides the "main" characteristic that we are looking for. For these cases it can be preferable to gather more example images and train a custom model.

Clarifai platform enables us to perform transfer learning from any available pretrained models. Unlike training the whole network from scratch using an entire data set, which can take days of compute time, Clarifai's quick training can be done in seconds or minutes. We can easily evaluate the trained model on the validation set that is set apart during the training process.

To put it another way, we can create new models that can understand which differences matter and which ones to ignore with just a small number of examples. Once trained, these new custom models behave just like Clarifai’s pretrained models and can be used to classify images.

Clarifai's custom training works well for a variety of scenarios and provides a "no code", "no data changes" way to train. The platform also handles many common issues automatically such as class imbalances, data augmentation, and exclusivity constraints.

Clarifai offers the tools and resources to get up and running quickly with very little data. In many cases, this means that we can build models that can understand your dataset with high-to-mid levels of accuracy relatively easily. But what about cases where higher levels of accuracy and performance are required than would be possible using the prebuilt models?

For such cases, Clarifai supports deep training where users have direct control over deep network layers, model architectures and model parameters. These models allow you to train the entire model graph to represent your unique data pipeline. Deep trained models are capable of delivering the most accurate and performant models, but they require much more data than other techniques.

Since Clarifai gives us so many ways to quickly build a solution to a problem, we can and should deploy this solution right away. This is because a model in production can be used to collect, analyze and label the data that represents a unique business problem. This data can then be used to help us build a better model.

Active learning is the process by which we can train our models to learn from the data in our unique use case. Once deployed, we can use the predictive power of our initial model to help aid the labeling of our training data. This training data can then be used to build our own deep trained model from the ground up.

The figure below outlines the procedure used to improve a Not Safe For Work (NSFW) model, and avoid inappropriate content. If Clarifai didn't already have a NSFW model, the general model could be deployed and terms like "drugs" or "gore" could be collected. For a given use case some level of gore might be acceptable and some not, so images with that label could be relabeled and a new model trained on the new data. This new model will likely be much better than any model that was trained on data collected from a different source than the product steam. But even this model can be improved over time by collecting more data from real users. This is especially useful if the type of imagery changes over time e.g. images with sepia tones are used more frequently.

Machine learning AI has driven advances across diverse application domains by following a straightforward formula: search for improved model architectures, create large training data sets, and scale computation.

Given this pattern it is advantageous to deploy first. Clarifai automatically scales computation for us, offers a curated selection of the best model architectures, and makes the creation of large training data sets easier than ever before. Clarifai supports us through the full AI lifecycle so that we can build the best possible models for the data that our businesses are actually working with.

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy

© 2023 Clarifai, Inc. Terms of Service Content TakedownPrivacy Policy