Recently, deep convolutional neural networks (DCNNs) have attracted much attention in remote sensing. Deep learning-based methods have been adopted for a variety of SAR images tasks, including object detection (automated target recognition), land cover classification, change detection, and data augmentation. In order to transfer CNN experience and expertise from optical to SAR, we need to understand the difference between optical imagery and SAR data [2].

SAR imagery provides information about what’s on the ground, but distortions and speckle make these images very different from optical images. Since SAR data is slightly less intuitive than optical data, it imposes a challenge for human labelers and models to correctly classify features. As a result, compared with the large-scale annotated dataset in natural images, the lack of labeled data in remote sensing becomes an obstacle to train a deep network, especially in SAR image interpretation. While transfer learning provides an effective way to solve this problem by borrowing the knowledge from the source task to the target task, the universal conclusions about transfer learning in natural images cannot be completely applied to SAR targets. So the analysis of what and where to transfer in SAR target recognition is helpful to decide if transfer learning should be used and how to transfer more effectively [3].

SAR Imagery Classification

Transfer Learning

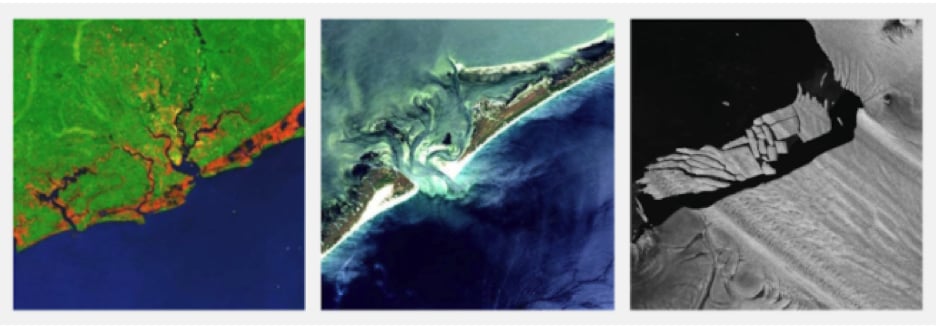

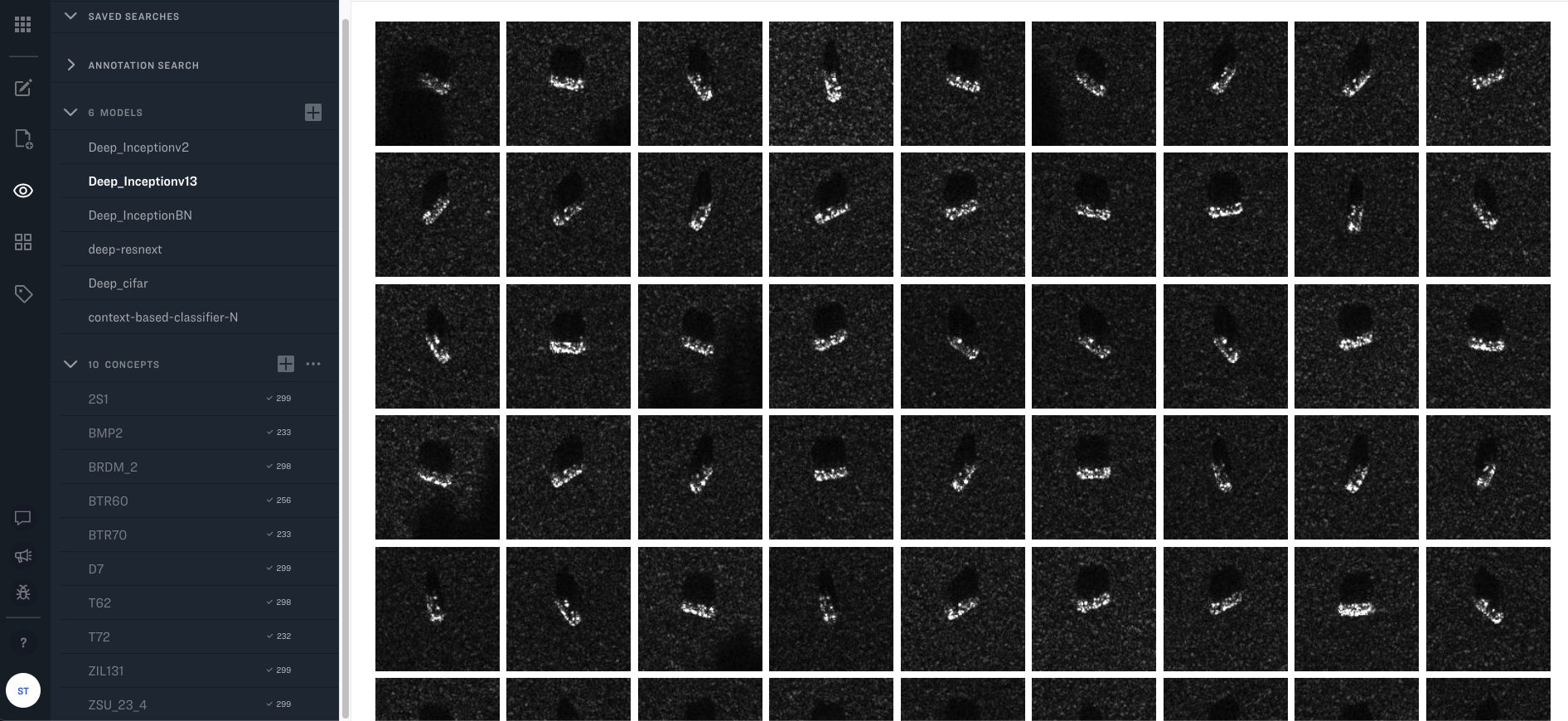

We would like to use the Clarifai platform for SAR imagery classification. The Clarifai platform enables us to run multiple tests simultaneously and compare the results quickly. We can easily upload any available SAR datasets to the Clarifai platform, then we can quickly try different classification schemes. We perform some tests using Moving and Stationary Target Acquisition and Recognition (MSTAR10) dataset [4] that includes SAR image chips of 10 different types of vehicles and is used in many SAR classification publications. We also upload TenGeoP-SARwv [5] to the Clarifai platform to perform tests. It includes ten geophysical phenomena from Sentinel-1 wave mode.

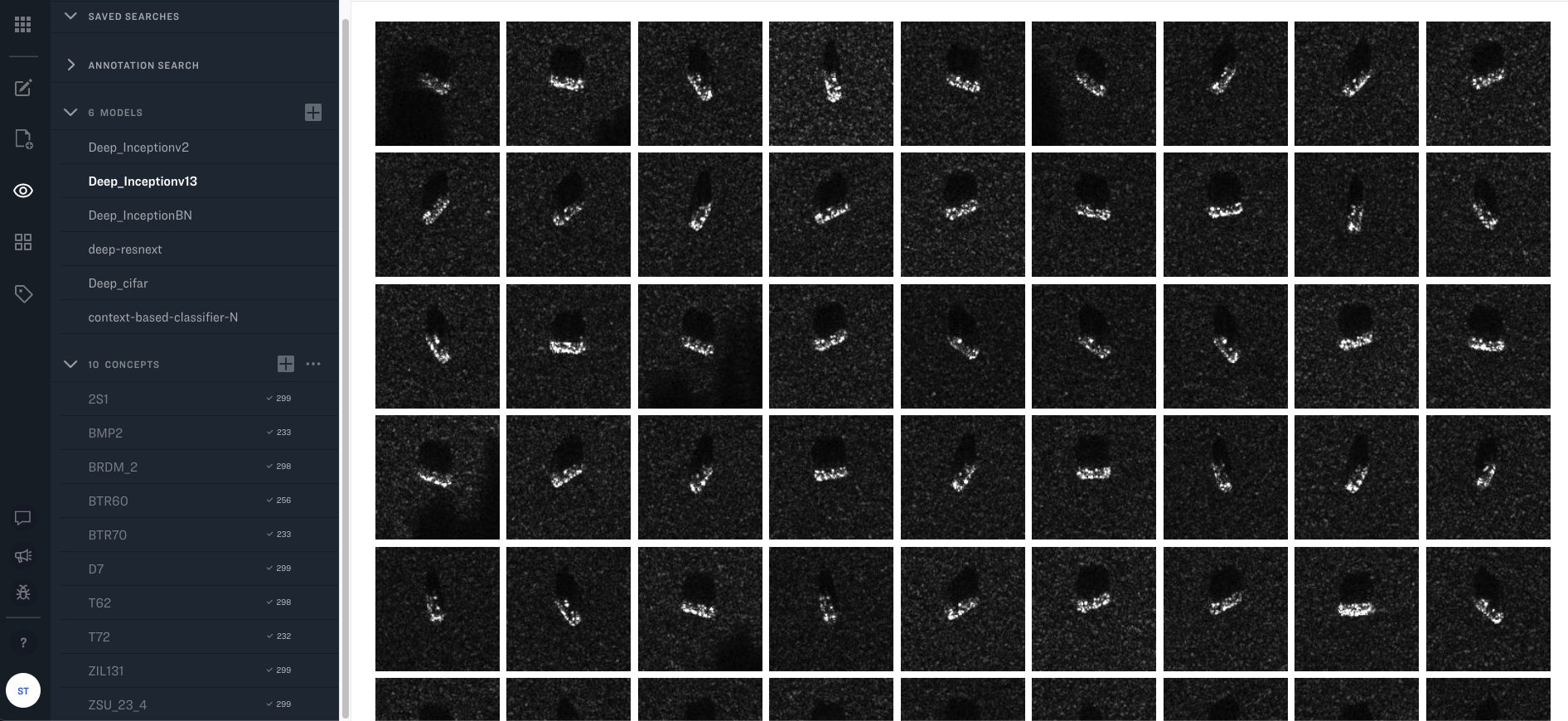

Fig 1. MSTAR10 dataset exploration in the Clarifai Portal

Fig 1. MSTAR10 dataset exploration in the Clarifai Portal

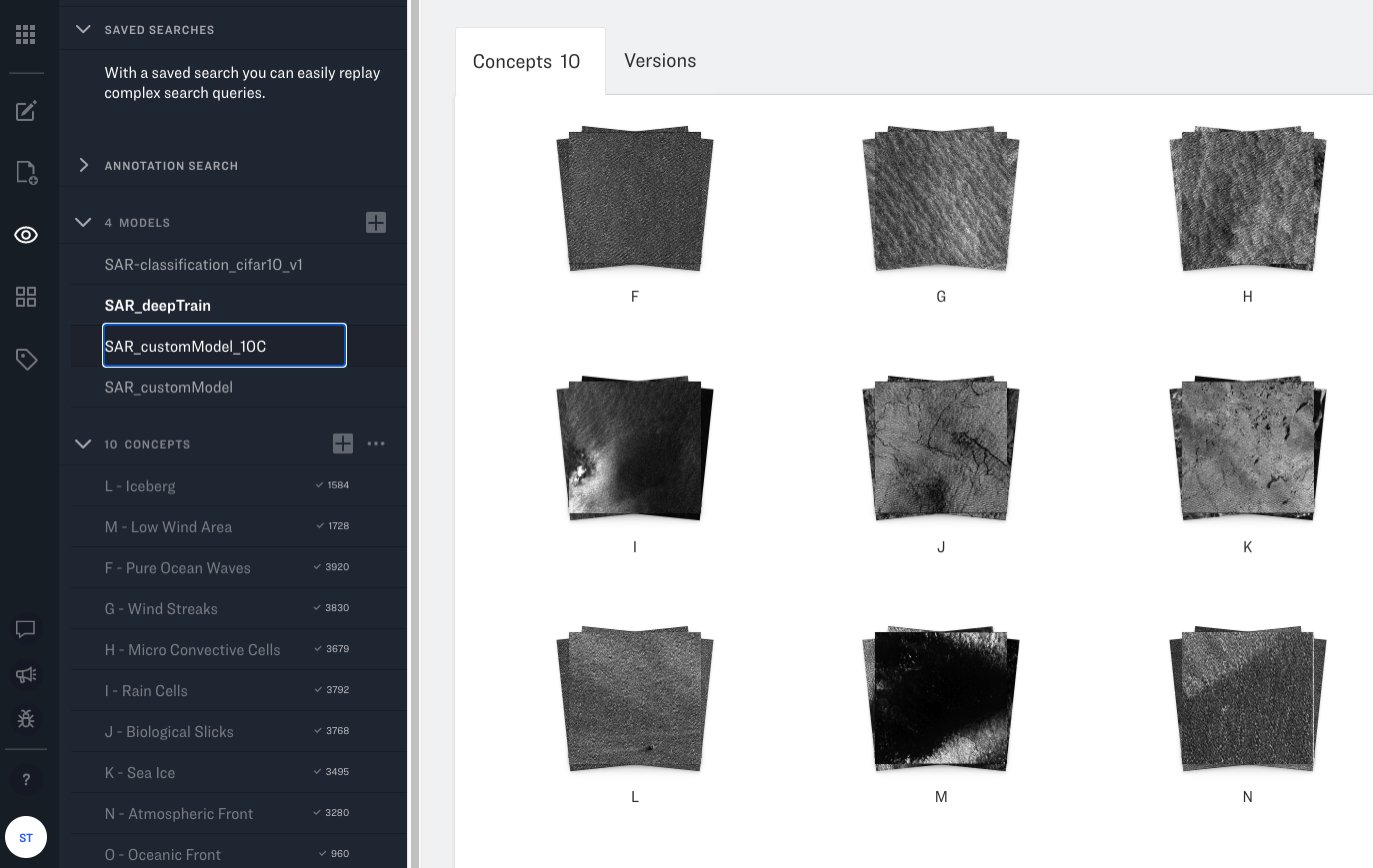

Fig 2. Different classes of TenGeoP-SARw dataset

Fig 2. Different classes of TenGeoP-SARw dataset

We first start with transfer learning from the General model on the Clarifai platform. Clarifai platform enables us to perform transfer learning from any available pretrained models. Unlike training the whole network from scratch using the entire data set which can take days of compute time, Clarifai's quick training can be done in seconds or minutes. We can easily evaluate the trained model on the validation set that is set apart during the training process.

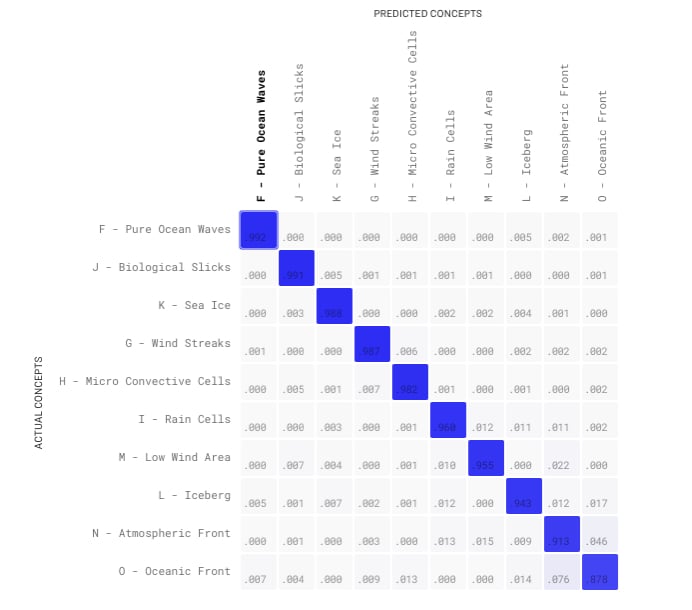

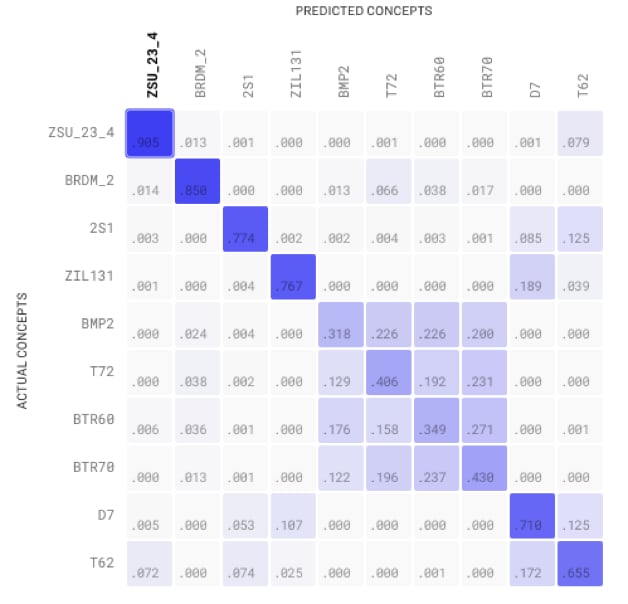

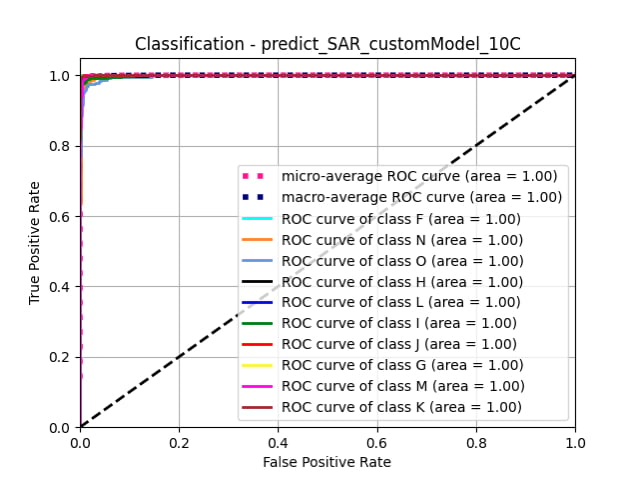

We upload each dataset along with the ground truth labels into an App in the Clarifai platform. The App enables us to explore each dataset and different classes in the explorer mode, as is shown in Fig.1 and Fig. 2. A custom model is trained using each dataset by transferring the knowledge from Clarifai’s General model. After the training is done, we can evaluate the trained model using the statistics provided in the app, including the confusion matrices and the area under the ROC curves (AUC). The evaluation is done on the holdout validation set.

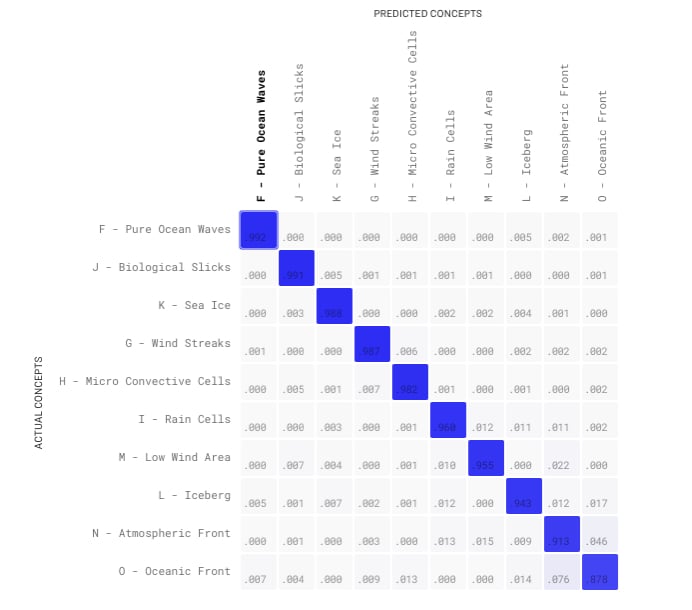

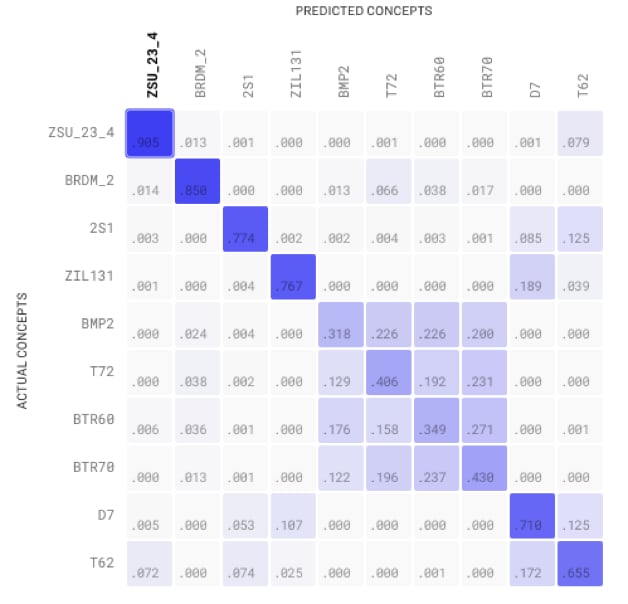

Table 1 shows the confusion matrices and AUC values for both datasets. Based on the AUC values in Table 1 we can quickly conclude that transfer learning from a model trained on optical imageries work better for TenGeoP-SARwv dataset than MSTAR10 dataset.

We can explore the confusion matrices to get a better idea of which categories of data are performing well and which ones are not. For example for the case of TenGeoP-SARwv dataset, we can see that the trained model performs almost perfectly on all the classes. But for the MSTAR10 dataset, while some classes, e.g. anti-aircraft weapon system (ZSU_23_4), are easier for the trained model to distinguish from others, there are high confusions among some other classes such as different types of tanks (BMP2, T72, BTR60 and BTR70).

Table 1. Custom training by transfer learning from General model

|

Dataset

|

AUC

|

Confusion Matrices on Validation Data

|

|

TenGeoP-SARwv

|

0.9994

|

|

|

MSTAR10

|

0.9691

|

|

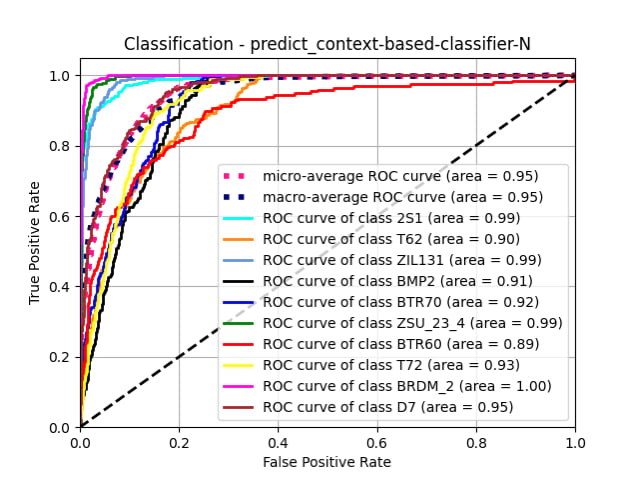

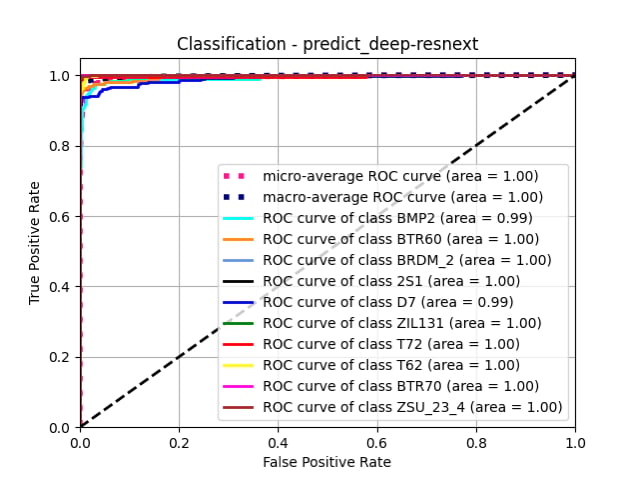

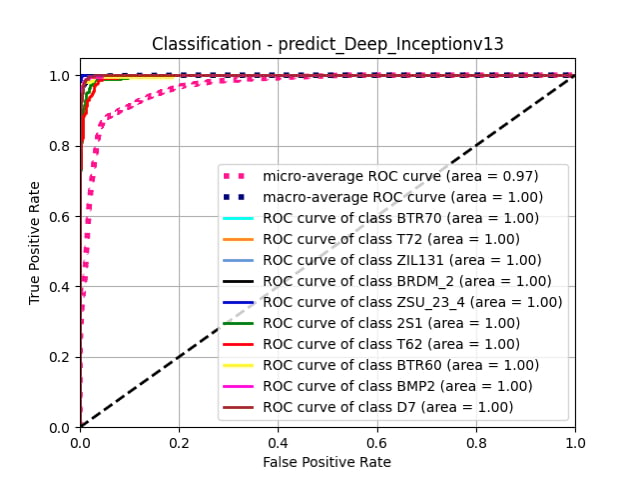

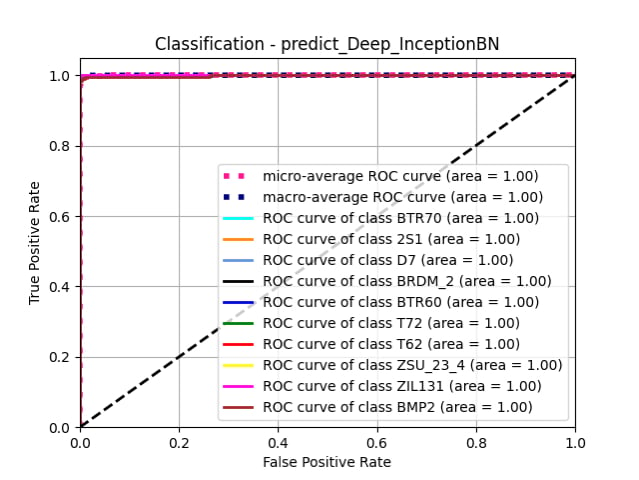

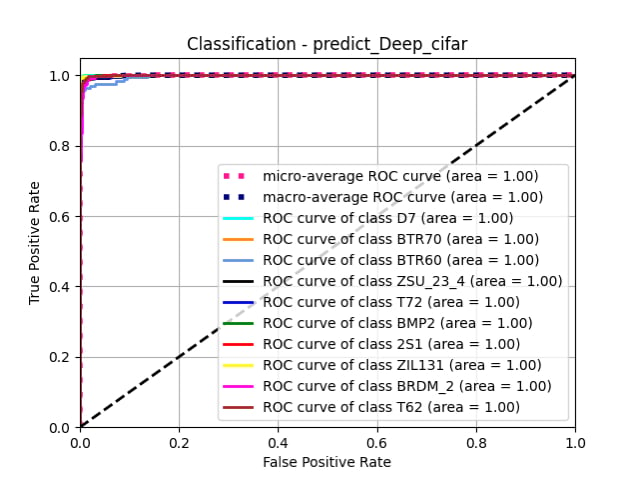

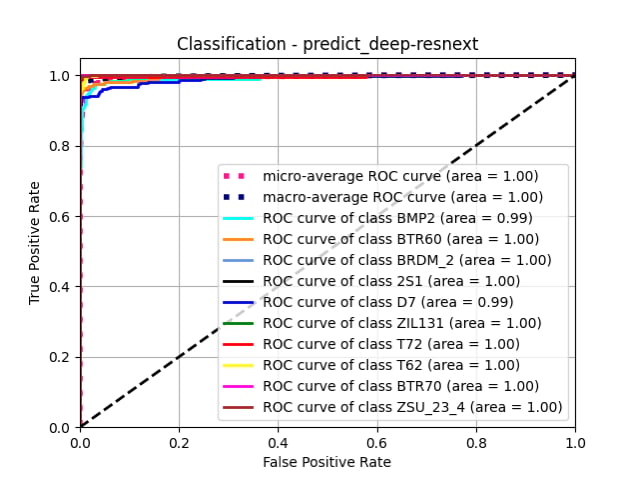

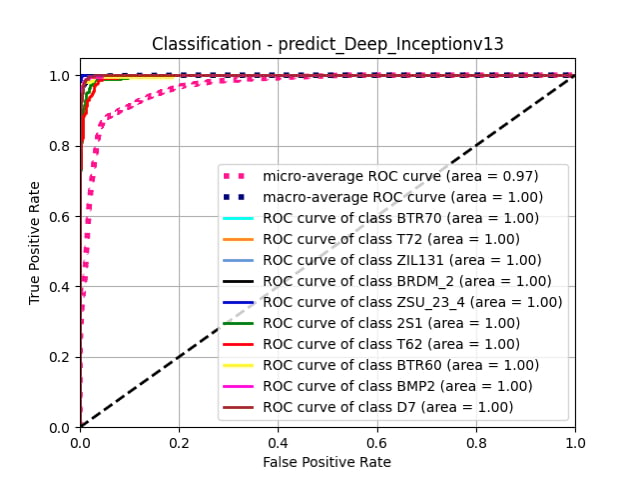

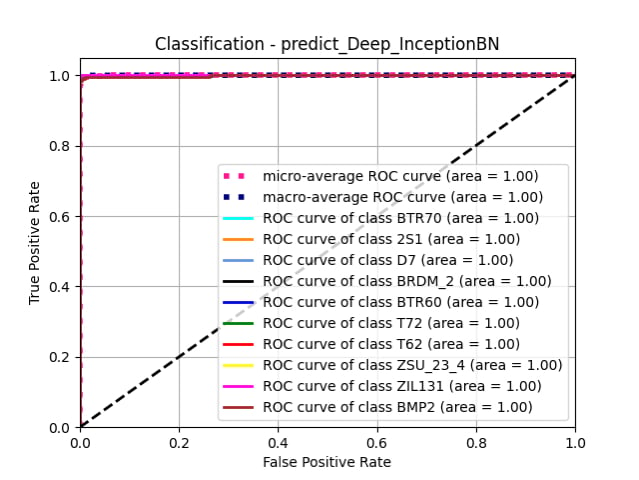

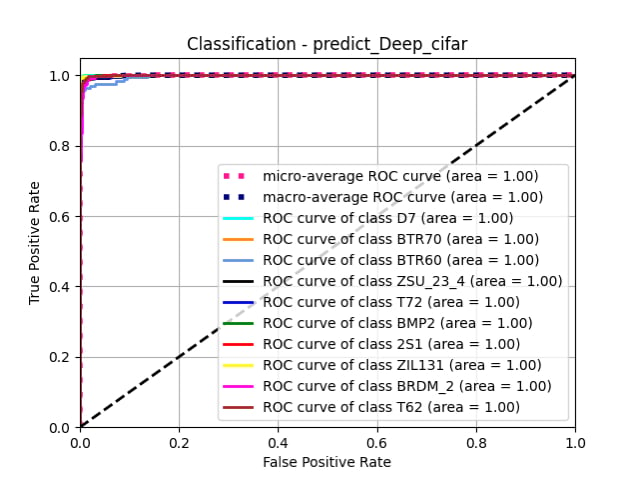

To match the results in the literature, we can evaluate the trained models on the test datasets by running inference on each image. The ROC curves are shown in Fig. 4. The results are in agreement with the validation results.

Fig. 3 Transfer learned models on MSTAR10 (left) and TenGeoP-SARwv (right). The ROC curves are computed on the test sets.

Deep Model Training

Next step is to train classification models using SAR datasets from scratch, without transferring any knowledge from other datasets. Now the question is: What neural network architecture should we pick and if the best choice varies for different datasets? Again, Clarifai platform makes it easy to deep train various model architectures and compare their performances for different datasets. We performed this experiment using the MSTAR10 dataset, because quick training was already working quite well on the other data set. Regardless of the model architecture, we can see the improvement that deep training offers compared to transfer learning on this dataset.

|

ResNext Architecture

|

Inception Net

|

|

|

|

|

Inception Net with Batch Norm.

|

Cifar10, architecture

|

|

|

|

Deep trained models (trained from scratch with no knowledge transfer) on MSTAR10

What’s next

Despite some successful preliminary research, and unlike the evaluation of optical data, the huge potential of deep learning in SAR is still ahead. Our goal at Clarifai is to add more SAR datasets to our repository and make more pre-trained models available to the public. This is an important step toward advancing the research work in this area.

References

1- Deep Learning Meets SAR https://arxiv.org/pdf/2006.10027.pdf

2- An Introduction to Synthetic Aperture Radar: a High-Resolution Alternative to Optical Imaging https://digitalcommons.usu.edu/cgi/viewcontent.cgi?article=1012&context=spacegrant

3- What, Where and How to Transfer in SAR Target Recognition Based on Deep CNNs https://arxiv.org/pdf/1906.01379.pdf

4- MSTAR: https://www.sdms.afrl.af.mil/index.php?collection=mstar

5- Labeled SAR imagery dataset of ten geophysical phenomena from Sentinel-1 wave mode (TenGeoP-SARwv) https://www.seanoe.org/data/00456/56796/

Fig 1. MSTAR10 dataset exploration in the

Fig 1. MSTAR10 dataset exploration in the  Fig 2. Different classes of TenGeoP-SARw dataset

Fig 2. Different classes of TenGeoP-SARw dataset